Can we detect imagined speech from brain signals?

Published in Neuroscience

Speaking is a key function that, if impaired or lost, can highly deteriorate one’s quality of life. However, millions of people suffer from speech disabilities, ranging from mild to severe conditions. When other motor functions are impaired, communication can become a real challenge. For instance, people who suffer from neurodegenerative diseases such as amyotrophic lateral sclerosis (ALS) gradually lose their ability to control their muscles (including the face muscles) while their cognitive functions remain intact. In such cases, communicating by gazing at a screen, selecting one letter at a time, becomes the only option to communicate, although it is extremely effortful and slow.

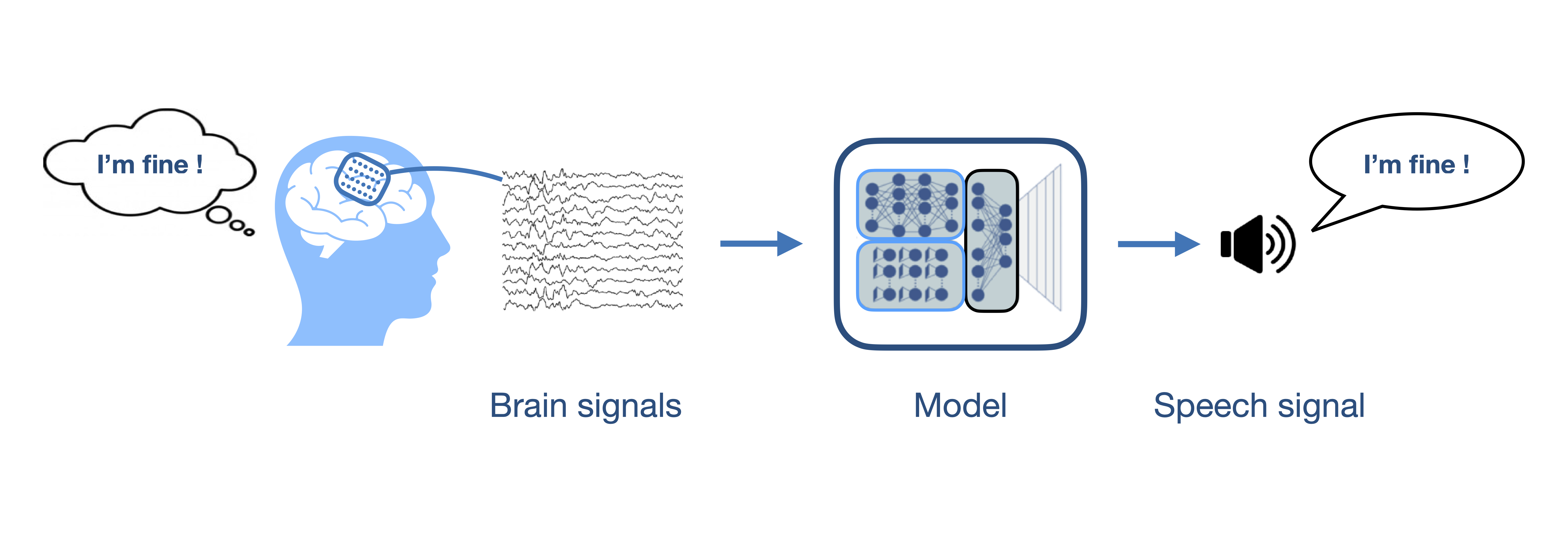

A speech neuroprosthesis is a device that would decode, directly from the brain, what a person intends to say. Electrodes are placed on the surface of the brain and record brain waves that are then translated into speech. Recent progress has been done to decode speech from patients who attempt to speak1,2,3. These patients suffer from neurodegenerative diseases or consequences of brainstem strokes. They are still able to vocalize some sounds which are most of the time unintelligible.

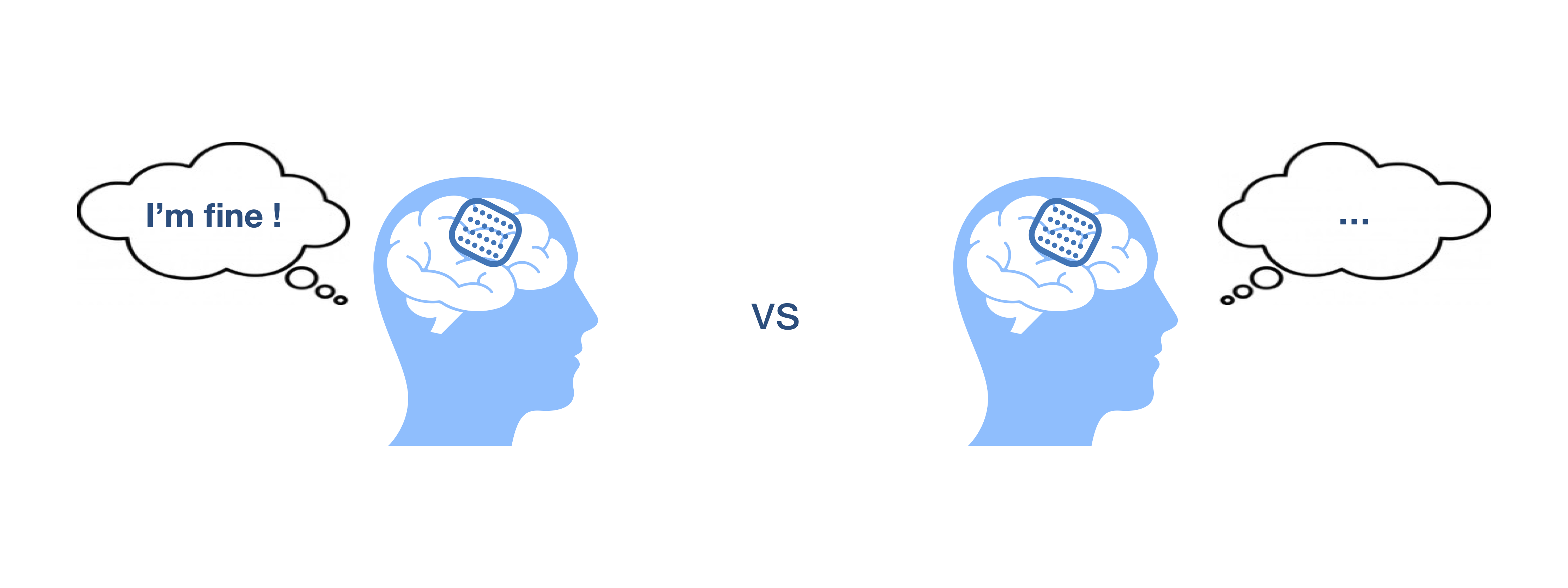

In this study, we focused on imagined speech: participants were asked to do as if they would speak but without moving the lips or any articulator. Imagined speech is particularly interesting because it does not rely on residual movements that might disappear throughout the progression of a disease. Furthermore, it requires less effort for the patients, for whom attempting vocalizing can be tiring, slow and frustrating. Note that imagined speech should not be confused with simply thinking which requires even less attention and effort.

A challenge with imagined speech is that nothing visible tells us when a person is imagining speaking. Therefore, the goal of this study was to develop a model that detects when a person is imagining speaking. This would allow to turn on and off a speech neuroprosthesis and would help its implementation by highlighting the relevant brain signals to decode. It would also prevent the assistance device to produce undesired output when the patient has no intent to use the device.

Since implanting electrodes does not come without risks, we performed experiments with patients who were implanted with electrocortigraphy electrodes for clinical purposes (epilepsy monitoring) but were still able to speak. Patients are in hospital bed for two or three weeks and participate in experiments on a voluntary basis. During our experiment, which lasts approximately one hour, participants were asked to speak, listen and imagine they are speaking a list of given sentences. This allowed us to compare how the brain processes the different speech modes.

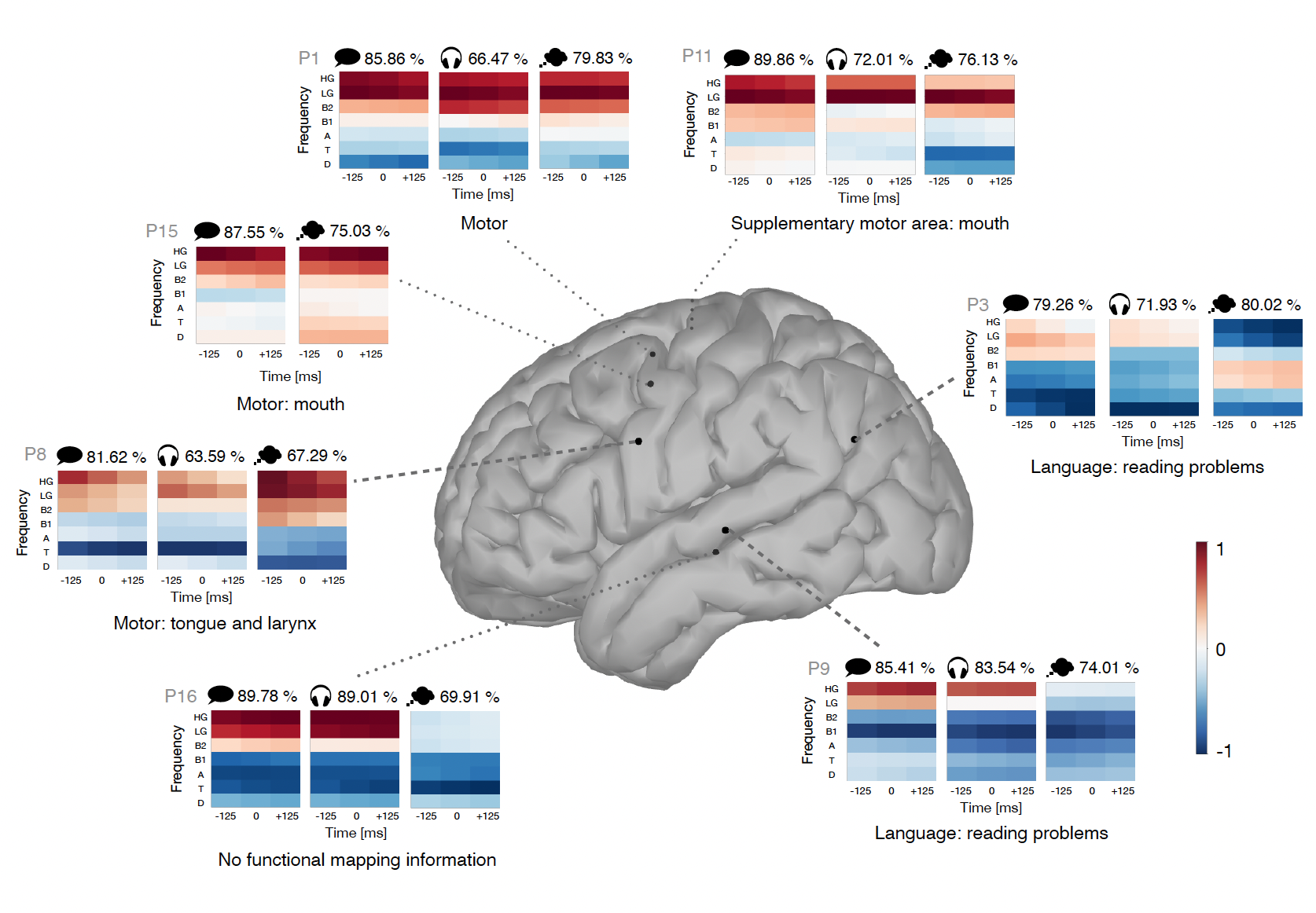

We recorded brain activity from sixteen participants with different implant locations. We were able to detect speech events from brain signals for thirteen participants for both the speaking and listening modes. Imagined speech was, as expected, more difficult to detect but we could still detect events for ten participants. Not being able to detect speech events can be due to the inefficiency of the model but also to the position of the implant which might not be optimal for speech detection as it was chosen for clinical purposes and not the purpose of this experiment.

We analyzed how speech events are manifested in the brain by studying the frequency bands and brain regions that permitted the detection of the events. We observed that the motor cortex is activated during imagined speech although no actual movement is performed. We noticed high similarities between actual and imagined speech in that brain region. The motor cortex was also activated while participants were listening, although to a lesser extent. Temporal regions were also active during imagined speech as well as during speaking and listening. However, the contributions of each frequency band in that region differed during imagined speech compared to the other two speech modes.

Given the observed similarities between speech modes, we attempted to transfer models from one mode to another. We applied a model trained to detect one speech mode to detect another speech mode. We found that the transfer was possible, with some extra precautions in the temporal regions, given the observed differences in activity. Another interesting aspect is the transfer of models between participants, which was also shown to be possible for patients with implants in similar locations.

Although we are not yet able to decode what a person imagines saying, this work deepened our understanding of how the brain processes speech, in particular imagined speech. The next step will now be to decode the detected events.

We would like to address a special thank you to the participants who took part in this study.

This research was conducted at the Computational Neuroscience group, KU Leuven. The data was collected at Ghent University Hospital.

You can find the full paper here: https://www.nature.com/articles/s42003-024-06518-6

References

1. Moses, D. A. et al. Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria. New England Journal of Medicine 385, 217–227 (2021).

2. Willett, F. R. et al. A high-performance speech neuroprosthesis. Nature 620, 1031–1036 (2023).

3. Metzger, S. L. et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature 620, 1037–1046 (2023)

Follow the Topic

-

Communications Biology

An open access journal from Nature Portfolio publishing high-quality research, reviews and commentary in all areas of the biological sciences, representing significant advances and bringing new biological insight to a specialized area of research.

Your space to connect: The Psychedelics Hub

A new Communities’ space to connect, collaborate, and explore research on Psychotherapy, Clinical Psychology, and Neuroscience!

Continue reading announcementRelated Collections

With Collections, you can get published faster and increase your visibility.

Signalling Pathways of Innate Immunity

Publishing Model: Hybrid

Deadline: Feb 28, 2026

Forces in Cell Biology

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in