AI is transforming the practice of science and engineering at a breakneck speed. Disruption and innovation in AI is a daily occurrence that is providing researchers with a fluid set of ideas, methods and tools to guide and elevate human insight into new, uncharted territory. How can we maximize the potential of AI and advanced computing to accelerate and sustain scientific discovery and innovation?

In a recently published paper, FAIR principles for AI models with a practical application for accelerated high energy diffraction microscopy, we introduced a set of practical, concise, and measurable FAIR (Findable, Accessible, Interoperable and Reusable) principles for AI models, and described how FAIR AI models and datasets may be combined with a domain-agnostic computational framework to enable reproducible and reliable autonomous AI-driven discovery.

The definition of practical FAIR principles for AI models is inspired by the original FAIR guiding principles, which have been recently adapted in the context of scientific datasets and research software. Key lessons we learn from these initiatives is that FAIR principles for scientific datasets aim to automate data management so as to accelerate and sustain scientific discovery. On the other hand, FAIR principles for research software seek to increase transparency, reproducibility, and reusability of research, and to support software reuse over redevelopment. Since AI models bring together disparate digital assets such as scientific datasets, research software and advanced computing, FAIR principles for AI models also require a computational framework in which they may be quantified. This process may be likened to defining the rules of chess (practical FAIR principles), crafting the pieces (creating AI models by combining data, scientific software, modern computing environments) and the board (the computing and scientific data fabric in which FAIR AI models and datasets are used to enable discovery).

These considerations are captured in our proposed definitions of FAIR principles for AI models. They also incorporate our personal experiences developing, sharing and using AI models in several disciplines, and encapsulate the insights of a broad cross section of scientists, ranging from undergraduate students to senior faculty. Our proposed principles aspire to streamline and facilitate the use, development and adoption of AI methodologies for a diverse ecosystem of scientists.

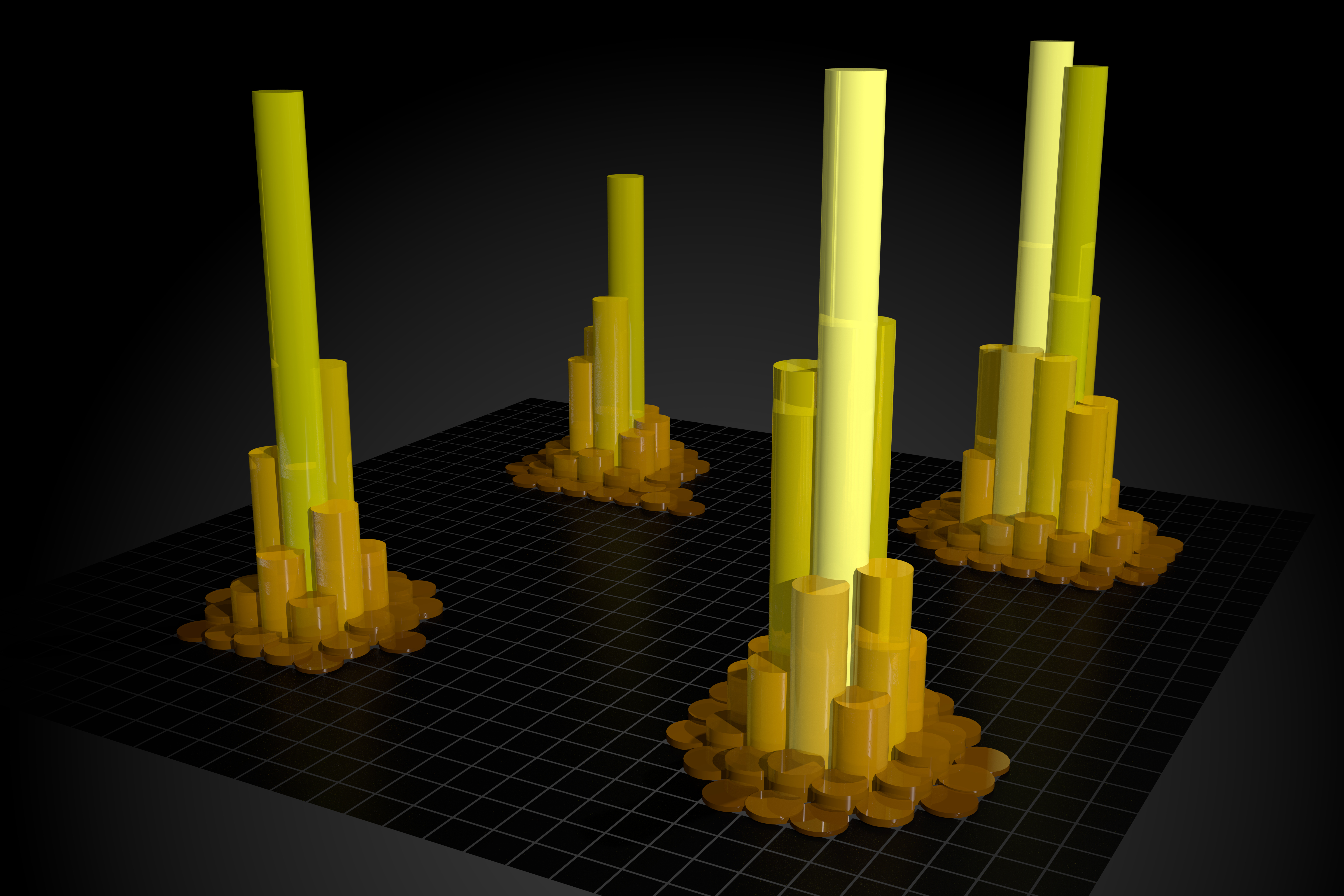

We selected high energy diffraction microscopy as a science driver to showcase the convergence of FAIR principles for data and AI models. In practice, we FAIRified an experimental dataset of Bragg diffraction peaks of an undeformed bi-crystal gold sample produced at the Advanced Photon Source at Argonne National Laboratory. This FAIR and AI-ready dataset was published at the Materials Data Facility (MDF). We then used this dataset to train three types of AI models at the Argonne Leadership Computing Facility (ALCF), namely, a traditional AI model using PyTorch, and an NVIDIA TensorRT version of the traditional PyTorch AI model using the ThetaGPU supercomputer; and a model trained on the SambaNova DataScaleⓇ system at the ALCF AI-Testbed. These three different models were published in the Data and Learning Hub for Science (DLHub) following our proposed FAIR principles for AI models. Based on insights we have gathered from a broad ecosystem of AI users, we know that publishing FAIR AI models and datasets is commendable, but not enough to accelerate the adoption and further development of novel AI tools. Thus, we leveraged funcX, a distributed Function as a Service (FaaS) platform, to connect FAIR AI models hosted at DLHub and FAIR and AI-ready datasets hosted at MDF with the ThetaGPU supercomputer at the ALCF to conduct reproducible AI-driven inference. This entire workflow is orchestrated and executed with Globus. In essence, all these different pieces create an environment in which end users who have access to ALCF supercomputers invoke AI models (hosted at DLHub) that are linked to datasets (hosted at MDF), and then leverages leadership class supercomputers to do AI inference, gaining new insights from complex datasets along with domain-informed metrics that clearly indicate when AI predictions are trustworthy.

We expect that our proposed vision for the integration of FAIR and AI-ready datasets with FAIR AI models and modern computing environments to enable autonomous AI-driven discovery will also catalyze the development of next-generation AI, and the creation of a rigorous approach that identifies foundational connections between data, AI models and high performance computing.

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Genomics in freshwater and marine science

Publishing Model: Open Access

Deadline: Jul 23, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in