Explore the Research

A FAIR and AI-ready Higgs boson decay dataset - Scientific Data

Scientific Data - A FAIR and AI-ready Higgs boson decay dataset

Massive datasets from scientific experiments, and the new tools to extract knowledge from them, have led to new and unexpected results in our understanding of the universe. Large-scale scientific facilities, like CERN, produce data at an unprecedented rate and researchers are now using the tools developed to exploit large commercial digital assets, such as social media, to understand the data. This has led to a paradigm shift in scientific computing and data analysis methodologies. This transformation has led to the design and deployment of advanced computing systems that include data benchmarks to quantify their performance for data-driven problems: high-performance-computing has become high-performance-data-computing.

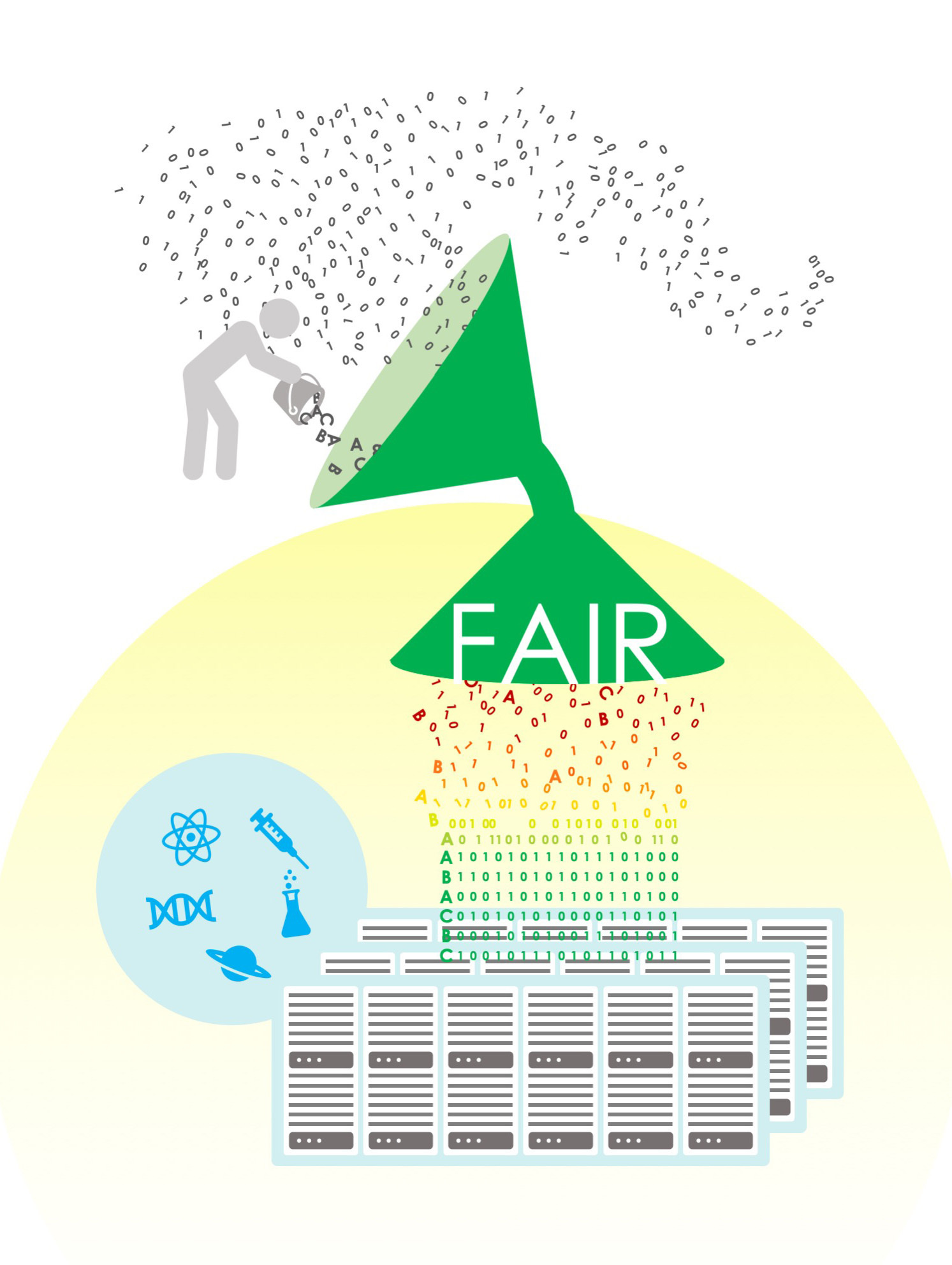

In an article we recently published in Nature Scientific Data, we contribute to this new paradigm by presenting the construction of a dataset, following the FAIR (findability, accessibility, interoperability, and reusability) Data Principles, that with deep learning neural networks was used to characterize the Higgs boson first observed at CERN in 2012. It is a fundamental particle responsible for the generation of mass in all other elementary particles. Since its discovery, researchers at the Large Hadron Collider have developed strategies to understand how it interacts with the other elementary particles, and if there are anomalies that could indicate physics beyond our current understanding of nature. The purpose of releasing this dataset following the FAIR principles is to encourage the exploration of novel deep learning techniques to better understand the Higgs boson and to invite researchers to help develop novel solutions that can further our exploitation of the massive datasets that have been produced, and the even larger ones that will be produced in the years ahead.

This work is done in the context of two themes. First, starting in the early 2010s, innovative computing and artificial intelligence (AI) were combined by both researchers and companies. This led to a revolution that is driving both increased scientific understanding and a multibillion dollar market that touches every aspect of human behavior and social interactions. Early on, the need to create and adhere to best practices for data collection, curation, and processing was recognized as essential to accurately identify and forecast patterns. As data-driven pattern recognition evolved, AI models and the data became two parts of the same mesh in the automation of knowledge production.

Second, starting in 2014, a number of stakeholders created the FAIR (findability, accessibility, interoperability, and reusability) Data Principles to provide a vision for good data management and stewardship practice. The paradigm shift introduced by establishing these principles was that machines and humans were both made equally valid users, paving the way to automate the reusability of data.

Recognizing the opportunities of automating data reusability and in response to these changes, our group, the Findable, Accessible, Interoperable, and Reusable Frameworks for Physics-Inspired Artificial Intelligence in High Energy Physics (FAIR4HEP) project, was funded by the US Department of Energy, Office of Scientific Computing in response to its FAIR Data and Models for Artificial Intelligence and Machine Learning call to work on making publicly released datasets and models comply with the FAIR principles, and to provide guidance to other researchers on how to do the same.

© Argonne Leadership Computing Facility Visualization and Data Analytics Group

We are working with multiple stakeholders in the US and Europe to understand and guide the adoption of FAIR principles in AI research, using high energy physics as a science driver to harness and develop novel AI applications. Our article provides a guide that enables researchers to create and evaluate whether datasets adhere to FAIR principles. The key motivation to produce FAIR and AI-ready datasets is to automate and streamline the creation of AI tools and approaches that leverage modern computing and scientific data infrastructure. Data should be a basic component of this, as opposed to a product that requires extensive pre-processing or feature engineering before it is adequate for computing.

If we are able to come together as a community and produce an agreed upon set of best principles to create FAIR and AI-ready experimental data, we can envision a future in which scientific facilities broadcast data to modern computing environments (HPC centers, cloud/edge-computing, etc.) that seamlessly use these datasets to produce novel AI tools, provide trustworthy AI-predictions that set robots in motion in an automated lab, order the next batch of HPC simulations, and boost human intelligence to enable groundbreaking discoveries in science, engineering and mathematics, providing informed policy for disaster risk and vulnerability, etc.

What are additional advantages of such a program? Creating datasets, AI tools, and smart cyberinfrastructure that work together seamlessly will enhance the individual impact of these components. We will be able to understand fundamental connections between AI, data, software, and hardware, and uncover unexpected connections among disparate areas of research. Such an approach will lead to the creation of a rigorous AI framework for interdisciplinary discovery and innovation. The byproducts of such a program would be bountiful. If we were to understand fundamental connections between data and AI, we would be able to create minimal datasets for training, design AI architectures with the required complexity for the task at hand, and use commodity optimization schemes that reduce time-to-insight during the training stage and provide state-of-the-art performance with minimal computational resources.

Within the next decade, as FAIR AI models and data are adopted by the scientific community, we will be able to gradually bridge existing gaps between theory and experimental science. AI models that are currently trained with HPC simulations and approximate mathematical models will be gradually refined to learn and describe actual experimental phenomena, identifying principles and patterns that go beyond existing theories. In time, AI will be capable of synthesizing knowledge from disparate disciplines to provide a holistic understanding of natural phenomena, unifying mathematics, physics and scientific computing for the advancement of science and the well being of our species.

Eliu Huerta for the FAIR4HEP team.

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Genomics in freshwater and marine science

Publishing Model: Open Access

Deadline: Jul 23, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in