Beyond the Kahoot! Music: Are We Enhancing Learning or Just Entertaining Students?

Published in Behavioural Sciences & Psychology and Education

Waking Up Students with a Start

Everyone who teaches in higher education knows the feeling. It's mid-afternoon, the projector light dulls the room, and class energy hits rock bottom. You notice you're losing your audience. So, you change the dynamic: you project a QR code, and that repetitive, catchy music starts playing.

Suddenly, the classroom comes back to life. Students pull out their phones, laugh, and compete. As teachers, we breathe a sigh of relief: "It works! They are motivated." But when the music stops and the class ends, a doubt arises: Have they learned more because of this, or have we simply turned the university into a TV game show?

This concern was the driving force behind our research published in Humanities & Social Sciences Communications. Together with my colleagues, we decided to put anecdotes aside and search for the real scientific evidence behind audience response systems (ARS), analyzing ubiquitous tools like Kahoot!, Socrative, Mentimeter, or Wooclap.

Searching for Needles in a Digital Haystack

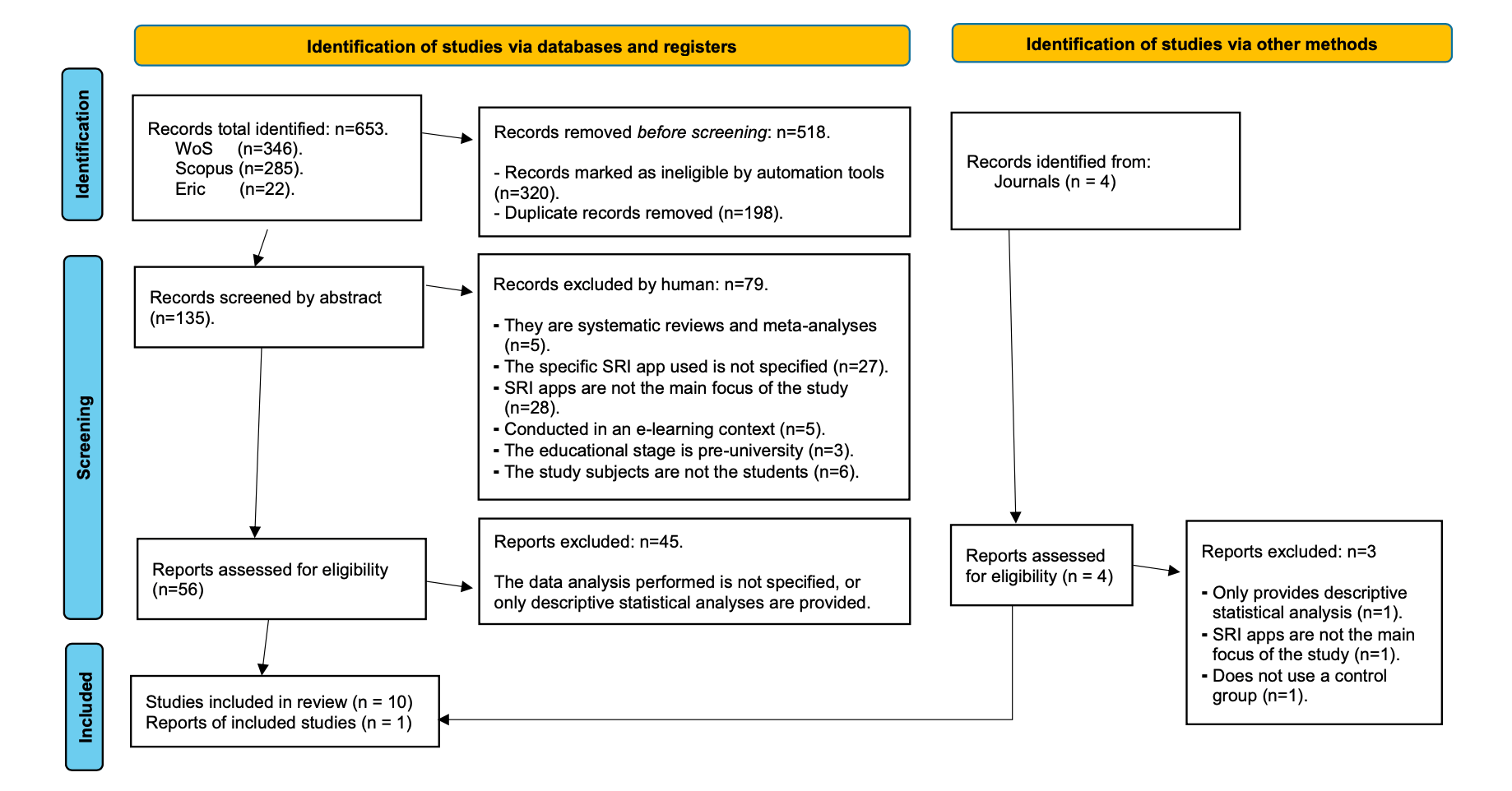

We conducted a systematic review under the PRISMA framework to answer a key question: Do these tools truly enhance learning and motivation in university settings?

What we found in the databases (Web of Science, Scopus, and ERIC) was revealing. We started with 653 recordspromising to analyze the impact of these apps. However, upon applying basic scientific quality filters—looking for studies with control groups and inferential statistical analyses, not just opinion surveys—the number plummeted.

Out of 653 initial studies, only 11 met sufficient methodological rigor criteria.

The evidence funnel: our selection process revealed that the vast majority of literature on ARS lacks robust experimental designs.

This fact confirms the existence of a "bubble" of educational technology publications driven by novelty, but often lacking the solidity needed to confirm real pedagogical benefits.

The Novelty Trap and Kahoot!'s Dominance

Our analysis confirmed that Kahoot! is the undisputed king, being the most used and researched system. Its competitive game design (Game-Based Learning) seems to fit students' motivation needs.

But what do those 11 rigorous studies tell us about real learning? The results are mixed:

-

Motivation: There is consensus that these tools improve participation and engagement in the short term.

-

Performance: Evidence is inconclusive. Some studies show improvements in specific skills (like pronunciation or medical concepts), while others find no significant differences compared to a traditional class.

The Great Silence: Where is Long-Term Learning?

Perhaps the most critical finding is the "temporal silence." Almost no study evaluated if what was learned was retained in the long term. Measurements are usually taken right after the "game," when emotion is high.

Without longitudinal studies, we run a risk explained by Cognitive Load Theory: if the interface and competition generate too much extraneous load, they might distract from deep learning, creating an illusion of competence that fades within a few days.

Furthermore, the "teacher effect" is rarely measured. Is it the app improving the class, or the skill of an innovative teacher? It is very likely that part of the improvements is due to teacher facilitation and not the software itself.

Why Does This Matter?

Models like the Technology Acceptance Model (TAM) suggest we adopt these tools for their ease of use. But popularity does not equal effectiveness. If we use Kahoot! only as a "defibrillator" for boring classes, we are applying a pedagogical band-aid.

Are we solving the root problem or just covering it up? Using ARS merely to wake up a class is a pedagogical band-aid.

Towards More Mature Research: 3 Recommendations

Based on our review, we propose three lines of action for the educational community:

-

Beyond Satisfaction: Let's stop basing success on whether students "liked it." We need validated instruments to measure real learning.

-

Longitudinality: Let's design studies that measure knowledge weeks after the intervention. We need to know what remains when the screen turns off.

-

The Teacher's Role: We must investigate how the teacher's style moderates the tool's success. Technology doesn't teach alone; instructional design is key.

Conclusion: Technology with Purpose

Universities might have effective technological equipment, but we must be responsible. Audience response systemsare powerful allies if we stop viewing them as magic toys and start analyzing them with rigor. I invite you to read the full article to delve into the details and help us build evidence-based education, not trend-based.

Read the full paper here: Do audience response systems truly enhance learning and motivation in higher education? A systematic review

Follow the Topic

-

Humanities and Social Sciences Communications

A fully open-access, online journal publishing peer-reviewed research from across—and between—all areas of the humanities, behavioral and social sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Interdisciplinarity in theory and practice

Publishing Model: Open Access

Deadline: Dec 31, 2026

Addressing the impacts and risks of environmental, social and governance (ESG) practices towards sustainable development

Publishing Model: Open Access

Deadline: Mar 27, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in