Do people want empathy from AI?

Published in Neuroscience, Computational Sciences, and Behavioural Sciences & Psychology

Picture this: You recently submitted a paper for publication after hours of laborious data collection, analysis, writing, and wordsmithing. After this enormous amount of effort and weeks of waiting, you are rewarded with a simple desk-rejection. You feel devastated, but perhaps also somewhat embarrassed after praising this paper to your colleagues. You’re hesitant to turn to these academic colleagues for support, but simultaneously feel that your non-academic support system could not appropriately understand the weight of this rejection. For these reasons, you turn to a constantly available, and highly reliable source of support: ChatGPT. It validates your feelings and encourages you that “you’re doing great by continuing to push forward, even when it’s tough.”

Recent advancements in artificial intelligence (AI) technology have resulted in large language models capable of producing expressions of emotional support that seem highly empathetic to human users, despite AI’s fundamental lack of ability to understand human emotional experience. Such developments have raised intriguing questions about how people seek out, receive, and perceive emotional support from both humans and AI.

Prior work has found that AI expressions of emotional support are consistently rated as higher quality, more empathetic, and make people feel more heard than human expressions of empathy. However, when people learn that empathy was created by AI, it suffers an empathic penalty. In other words, people like AI empathetic messages but do not like that they came from AI.

This push and pull of AI expressions of empathy led to our primary question: When given the free choice, would people choose to seek empathy from human or AI empathizers? While past work has primarily experimentally assigned people to interact with human or AI empathizers, we sought to directly assess people’s empathy-seeking preferences using a motivational approach. This question is of the utmost importance as the perils and potential of AI empathy hinge on people’s willingness to turn to AI as a source of emotional support.

Across multiple studies, we presented participants with the free choice between receiving empathy created by human or AI empathizers. Participants then received a real empathy response created by the source of their choosing, and rated how empathetic they found it and how heard they felt by the empathizer. We explored participants’ empathy choice both by asking them to imagine themselves in a variety of situations (e.g., a rejected marriage proposal, losing a friend, etc.), as well as asking them to share real emotional situations they were dealing with and creating human and AI empathy responses that were unique to their personal situations. These studies included hundreds of unique empathy responses created by both amateur and expert human empathizers (e.g., crisis responders).

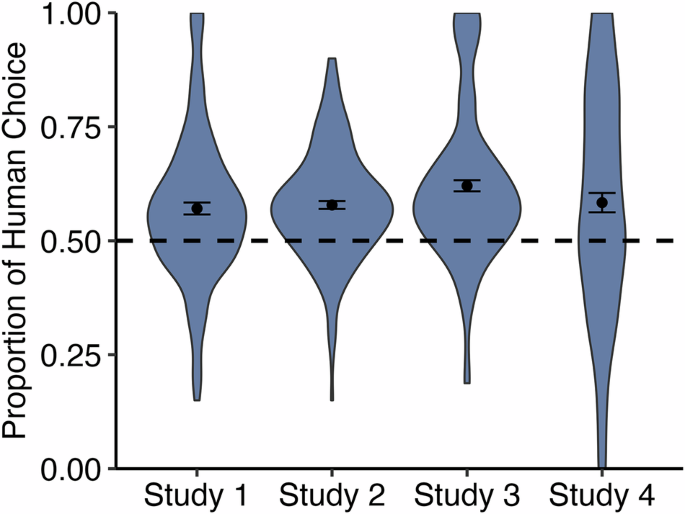

This series of studies revealed a striking paradox:

-

People choose to receive human empathy over AI empathy.

-

But people rate AI empathy as more empathetic and making them feel more heard when they do choose it.

Despite avoiding AI empathy, people seem to emotionally benefit from it nonetheless. These results speak to AI’s positive potential as a source of emotional support while highlighting the importance of respecting individual preferences in choosing the source of their emotional support. This could suggest potential applications for AI in supplementing—though not replacing—human empathy.

Follow the Topic

-

Communications Psychology

An open-access journal from Nature Portfolio publishing high-quality research, reviews and commentary. The scope of the journal includes all of the psychological sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Intensive Longitudinal Designs in Psychology

Publishing Model: Open Access

Deadline: Mar 31, 2026

Replication and generalization

Publishing Model: Open Access

Deadline: Dec 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in