Escaping the “Interpreter’s Trap”: When Explainable AI Fails to Protect Justice

Published in Computational Sciences, Law, Politics & International Studies, and Philosophy & Religion

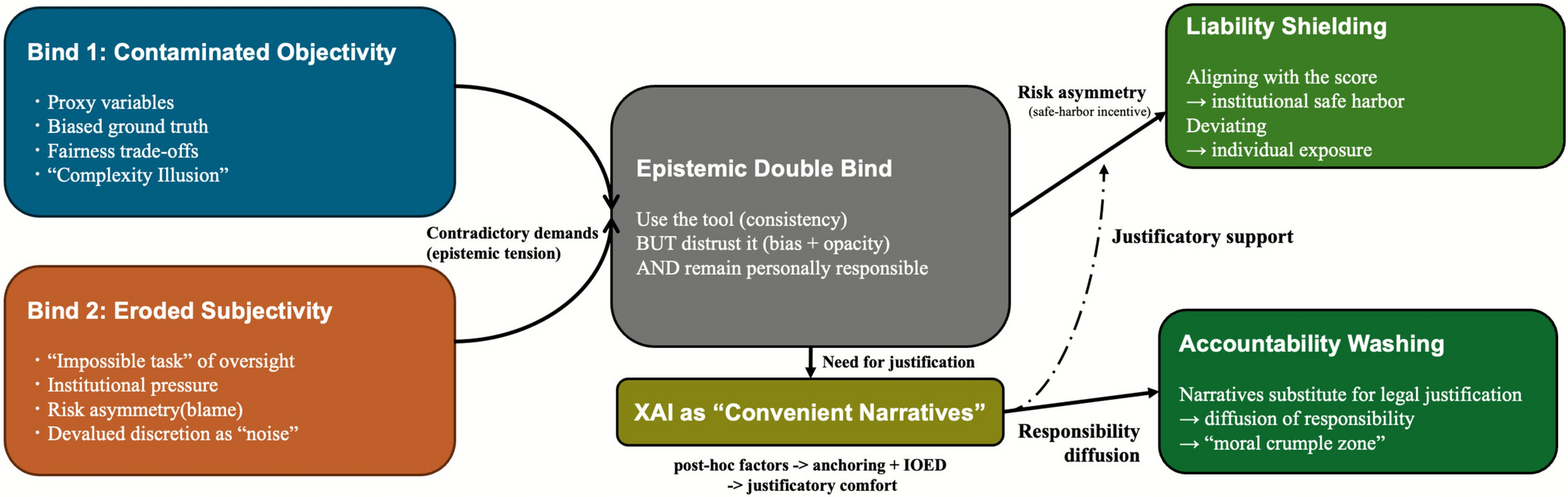

My investigation led me to the concept of "The Interpreter’s Trap." In studying risk assessment tools like COMPAS, I realized that the problem wasn't merely technical opacity, but a structural "institutional double bind." Decision-makers are often caught between "contaminated objectivity"—data carrying hidden historical biases and proxy variables—and "eroded subjectivity," where their own professional discretion is devalued against the machine’s "evidence-based" output.

Crucially, my research suggests that this trap is sustained by a mechanism of "liability shielding." I found that decision-makers face an asymmetry of risk: aligning with a high-risk score creates a safe harbor of "scientific" justification, whereas deviating from the algorithm imposes a heavy personal burden of proof. In this high-stakes environment, post-hoc XAI often fails to empower human oversight. Instead, it creates "convenient narratives"—simplified rationales like "prior arrests"—that anchor the judge’s decision to the algorithm. This effectively converts uncertainty into institutionally defensible justifications, facilitating what I term "accountability washing."

As this was my first peer-reviewed article, the research process itself was a steep learning curve. While the initial drafts were unpolished, rigorous feedback from reviewers helped me refine my critique from simple technological skepticism into a robust socio-legal framework. This process taught me that the "trap" is not just about the algorithm, but about the specific legal and organizational structures that incentivize deference to machines.

Ultimately, I argue that we should aspire to a higher standard of system design. We must pierce the "Complexity Illusion"—the false assumption that opaque, complex models are inherently more accurate. For high-stakes normative decisions, "explaining" a black box is often insufficient. I advocate for a paradigm shift toward inherently interpretable "glass-box" models—systems designed to be transparent from the ground up, where the logic is visible and contestable by default.

However, this is not just a technical challenge—it is a legal and normative one too. Transparency alone is meaningless if the human in the loop cannot act upon it. My paper argues that true "Human Oversight," as envisioned in emerging frameworks like the EU AI Act, requires more than just receiving an explanation; it requires the effective power to disagree. We must couple interpretable models with a robust "Right to Contest," creating a socio-legal infrastructure where algorithmic outputs are treated not as objective verdicts, but as contestable evidence. Without this normative shift, even the most transparent model risks becoming another tool for bureaucratic validation rather than justice.

As an early-career researcher, I hope this paper contributes to the ongoing conversation about how we can build AI systems that support, rather than supplant, human ethical judgment.

Read the full paper: https://rdcu.be/e2MXo

Follow the Topic

-

AI & SOCIETY

This journal focuses on societal issues including the design, use, management, and policy of information, communications and new media technologies, with a particular emphasis on cultural, social, cognitive, economic, ethical, and philosophical implications.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in