Behind the ChemBench Paper: Measuring AI's Chemistry Capabilities

Published in Social Sciences, Chemistry, and Computational Sciences

My journey into language models started with fine-tuning them for property prediction tasks. This connected me with Michael Pieler, and together we launched the ChemNLP project to train chemical foundation models in an open science setting.

The Challenge of Evaluation

When training a general-purpose model, evaluating its performance isn't straightforward. You can't test every possible task, so which ones should you prioritize? We searched for existing benchmarks but found nothing that suited our needs.

This gap coincided with me finishing my PhD and launching a new research group. In conversations with Philippe Schwaller, we began collecting chemistry questions. Soon, it became a team-building activity for my newly formed group - everyone contributed to collecting and reviewing questions daily: The scale of the task demanded everyone's participation.

As we tested these questions with models, we discovered numerous technical challenges: questions requiring scientific notation, handling of Roman numerals, and other specialized notations. Edge case by edge case, we developed a powerful pipeline for formatting and parsing scientific questions and answers.

Establishing a Baseline

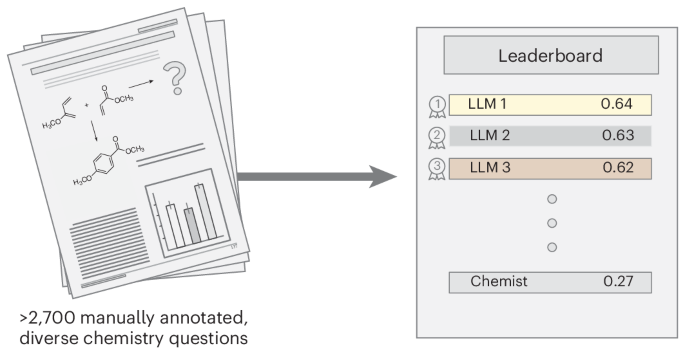

Despite our growing collection and parsing tools, we still lacked context for what constituted "good" performance. Would 50%, 80%, or 20% accuracy be impressive? To answer this, we needed to measure how human chemistry experts performed on the same tasks.

Working in a chemistry department gave us access to colleagues who could help. But this introduced a new problem: our questions were stored in specialized data formats with molecules as SMILES strings - not intuitive for most chemists. We built an entire web platform to properly render molecules, track progress, and maintain a leaderboard while addressing privacy concerns (chembench.org).

With the platform complete, we invited colleagues to participate - which led to significant management challenges.

The Science and Implications

The paper's scientific findings are very interesting: leading AI models already perform better than humans on significant portions of our benchmark. They're literally "superhuman" on these chemistry tests. Many outperform the chemistry PhD students in our study.

As a chemistry group leader, two observations stand out:

- If a student performed twice as well as others on exams, I'd try everything to recruit them to my group. Perhaps we should be equally eager to incorporate these high-performing models into our research.

- If models excel at the same exams we give students, we need to reconsider what we're testing. What's the purpose of these questions if AI can solve them so easily?

These findings signal exciting shifts ahead in chemistry education and research. We face substantial discussions about the role of AI in science - discussions where these AI systems might eventually participate.

The entire project was an incredible team effort. I'm grateful to Adrian, Nawaf, Martino, Sreekanth, and many others from my research group and beyond who made ChemBench possible. Their collaboration opened pathways to new research projects like MaCBench.

We're actively using ChemBench for internal model evaluation, continually learning how to best measure and improve AI's understanding of chemistry (for which we have released other resources such as the largest and broadest chemical dataset - the ChemPile). The benchmark is shaping up to be a valuable resource for the field, helping us navigate a future where AI and human chemists work increasingly together.

For more details, explore the full ChemBench paper and resources at chembench.lamalab.org

Follow the Topic

-

Nature Chemistry

A monthly journal dedicated to publishing high-quality papers that describe the most significant and cutting-edge research in all areas of chemistry, reflecting the traditional core subjects of analytical, inorganic, organic and physical chemistry.

Latest Content

Why is Singapore Identified in Global Research as Number One? How Physical Activity and Education Excellence Created a Global Leader

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in