I didn’t mean to write about peer review again. Two things I never mean to write about are Peer Review and Impact Factors, but being an editor provides a privileged viewpoint on both so I seem to keep coming back to them. And here we go again:

This time the spur is a new paper in Scientific Reports that caught my eye called “How Important Tasks are Performed: Peer Review“. I wrote last month about the psychology of setting deadlines for referees

and so this line in the abstract made me sit up and take notice: “the time a referee takes to write a report on a scientific manuscript depends on the final verdict”. The phrase “a Deadline-effect” also appears in the abstract. My interest was piqued. Did the authors have real data? Were they going to conclude that setting different length deadlines could change the decision on a manuscript?

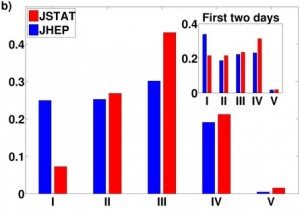

Fractions of various decision: classes accepted (I), accepted with minor revision (II), to be revised (III), rejected (IV), not appropriate (V) from all reports (as an inset the same for short-duration processes) . Adapted from Figure 2b of ref. 1

The authors have looked at data from two journals: Journal of High Energy Physics (JHEP) and the Journal of Statistical Mechanics: Theory and Experiment (JSTAT). The journals don’t really matter, what is important is that they have access to data from these journals of every interaction with referees giving times for responses to review requests, how long it took them to return reports and what the decision was (on the paper). From this they can do lots of plotting of decision times and see that things seem to be covered by Poisson statistics which means that they can do some mathematical modelling. They also see that for those reports that come back fast, that is within a day, there is a much higher proportion of clear cut decision: Accept as is, or Reject.

I don’t think that either of those observations will surprise many people but there is more. For a start the two journals have different reviewing deadlines. JSTAT asks for reviews in 3 weeks, JHEP in 4. Both slower than Nature Protocols but then this is physics, a foreign country where they do things differently. Their data don’t support my comments on deadlines though because the shape of the distribution of return times for reviews is the same for both journals just that JHEP’s is spread over a longer scale.

Now because the distributions they get are Poisson looking they can be modelled with a series of stochastic steps. Referees are seen as people who spend their days doing little bits of action (sub tasks) each one taking about 10 minutes, that add up to a whole task. Every 10 minutes (approximately) the referee chooses what subtask to do based on importance of the overall tasks. If that were all though the distributions wouldn’t be as they are, there would be power-laws involved and a long tail of reports that take a very long time to be completed. What stops this happening is the ‘Deadline-effect’. Because there is a deadline set on the task the referees know they have to spend a set amount of time before the deadline on the report. The clear-cut reports come in more quickly not because they have fewer sub-tasks but rather because the referees assess from the outset that these are going to be ‘easier’ sub-tasks to complete and so are likely to perform them with higher probability. Always pick the low hanging fruit first!

I can’t quite decide what take home message I have from this paper. It certainly suggests that clear deadlines and ruthless chasing are needed to get reports from referees. Perhaps though there is also a lesson for authors: If you want to get your paper reviewed swiftly, make it as easy as possible to review. That it took almost 3 months for this paper to pass through review might suggest that is a conclusion the authors didn’t draw for themselves.

1. Hartonen, T. & Alava, M. J. How important tasks are performed: peer review. Scientific Reports 3, 1679 doi:10.1038/srep01679

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in