LLMs and AI Agents: Transforming Scientific Forecasting

Published in Earth & Environment, Materials, and Physics

LLMs and AI Agents: Catalysts for Scientific Discovery

Advances in large language models (LLMs) from 2024 to 2025 have notably accelerated scientific discovery, particularly benefiting fields such as materials science and quantum computing. Leading models including Google DeepMind’s Gemini 2.5, Meta’s open-source LLaMA 4, and DeepSeek demonstrate remarkable multimodal capabilities, sophisticated contextual awareness, and enhanced scientific reasoning. The competition among these top-tier AI models is driving innovations that enable faster experimentation, improved data analysis, and deeper insights into complex scientific phenomena.

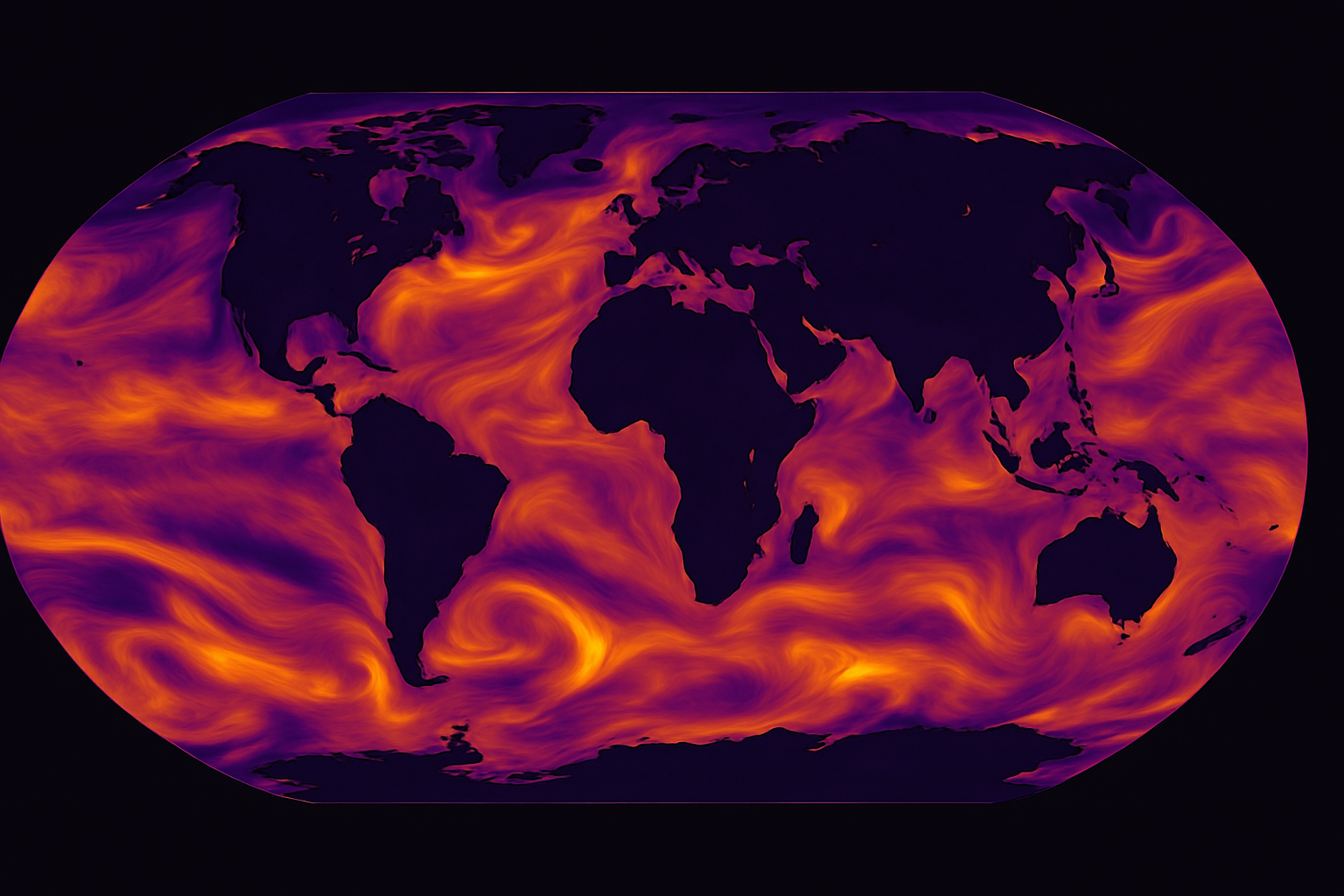

LLMs and AI Agents are New Frontiers for Scientific Forecasting Such as Weather GraphCast

Multimodal Capabilities Enhancing Scientific Research

The rise of multimodal LLMs—capable of processing text, images, audio, and video—is reshaping scientific research workflows. Google's Gemini 2.5 excels in integrating diverse data inputs, from intricate scientific diagrams to extensive quantum simulation outputs, leveraging context windows exceeding 32,000 tokens (Google DeepMind, 2024). Its visual comprehension capabilities, such as interpreting spectroscopy data and quantum state visualizations, enable predictive modeling and data-driven insights essential to materials science.

Meta’s open-source LLaMA 4 has also shown robust performance in handling complex scientific literature and datasets. Its accessibility to the broader scientific community fosters collaborative research, accelerating advancements in quantum computing and advanced materials development (Meta AI, 2025).

Meanwhile, DeepSeek offers specialized processing capabilities ideal for analyzing intricate scientific data streams, enhancing real-time data interpretation and facilitating rapid hypothesis testing.

Autonomous Agents and Workflow Automation

Autonomous AI agents leveraging these advanced LLMs are transforming research methodologies. Agents built on Gemini 2.5, LLaMA 4, and DeepSeek autonomously manage complex experimental workflows, oversee extensive simulations, and dynamically interact with real-time data sources. For example, these AI agents can optimize quantum experimental parameters and interpret results autonomously, streamlining research processes and enabling faster iteration cycles.

Domain-Specific Fine-Tuning and Adaptation

Domain-specific fine-tuning methods such as Low-Rank Adaptation (LoRA) have enhanced the adaptability of general-purpose LLMs to specialized scientific domains. Through efficient fine-tuning, LLaMA 4 achieves superior accuracy in predicting molecular properties and quantum computational outcomes (Mistral AI Team, 2023). Retrieval augmentation generation (RAG) further boosts model capabilities by dynamically integrating the latest scientific findings, ensuring continuous relevance and precision.

Advanced Scientific Reasoning and Extended Contextual Analysis

Enhanced reasoning capabilities and expanded context windows, exemplified by Claude 3.7's capacity for up to 100,000 tokens (Anthropic, 2023), enable comprehensive analysis of large-scale scientific datasets and theoretical frameworks. Techniques like structured chain-of-thought prompting and integration with computational tools empower models to perform detailed quantitative analyses and simulation management—crucial for materials research and quantum computing.

Practical Applications in Advanced Scientific Domains

The synergy of multimodal capabilities, autonomous operations, fine-tuning strategies, and advanced reasoning is unlocking new applications across scientific domains:

-

Materials Science Analysis: Interpreting spectroscopy data, modeling novel materials, and predicting material behaviors.

-

Quantum Computing Research: Managing real-time simulations, interpreting quantum states, and optimizing quantum algorithms.

-

Experimental Automation: Streamlining complex experimental protocols and dynamically adjusting research parameters.

-

Collaborative Research: Democratizing cutting-edge analytical capabilities through open-source platforms, enhancing global scientific collaboration.

Conclusion and Future Outlook

The rapid evolution of LLMs such as Gemini 2.5, LLaMA 4, and DeepSeek signifies a transformative leap in scientific research capabilities. Fueled by competitive innovation, these AI systems continue to advance research efficiency, data interpretation accuracy, and predictive modeling sophistication. As these technologies mature, they are poised to become indispensable tools for addressing complex scientific challenges, driving breakthroughs in materials science, quantum computing, and beyond.

References

-

Meta AI. (2025). The Llama 4 herd: The beginning of a new era of natively multimodal intelligence. Meta AI Blog.

-

Mistral AI Team. (2023). Mistral 7B. arXiv preprint arXiv:2310.06825.

-

Anthropic. (2023). How large is Claude’s Context Window? Anthropic Help Center.

-

OpenAI. (2023). GPT-4 Technical Report. arXiv preprint arXiv:2303.08774.

-

Google DeepMind. (2024). Introducing Gemini 2.0: Our new AI model for the agentic era. Google Blog.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in