Digital health devices: More choice does not necessarily mean better choices

Published in Healthcare & Nursing

Measuring every aspect of human performance and behaviour is big business these days. The use of digital devices to do so, is even bigger business. Health and wellbeing devices, whether medical or consumer grade, are creating massive research and commercial opportunity. However, with great choice, comes great confusion for digital health professionals seeking to choose the right device. Which device is most reliable, accurate, evidence-based, usable, and which one is the best fit for my research study or for the service I’m offering? These are questions facing professionals, but unfortunately, there is no standardized way of helping them to sift through the mass of available devices, evaluate them, and then compare them against each other to determine which one is the most appropriate choice.

Without this type of process, issues may arise. What we have observed from working in the digital health space is that in many cases, when a device was chosen and later proven to be problematic, this didn’t necessarily mean that the device itself was to blame. Often, the issue was that at the time of selection, the device was not fit-for-purpose given the specific needs and requirements of the service provider and/or the user. For example, deploying a device that is made of very small moving parts to a cohort of elderly people may result in adoption issues because such a device does not cater for those people with poorer levels of finger dexterity. Traditionally however, such human factors issues are frequently uncovered long after the decision to invest in the device or several devices is made, emphasizing the importance of understanding and prioritizing the application requirements at the time of selection We suggest extending the evaluation of devices to the pre-purchase phase, where the application requirements are the driver of the device selection process.

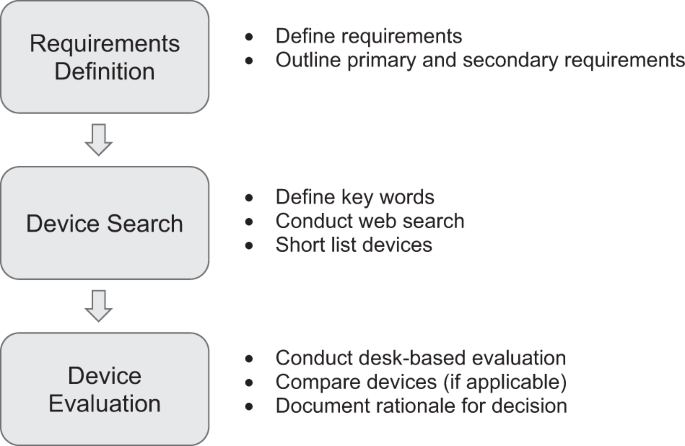

Our paper offers a framework for evaluating human performance technologies that aims to address the issues outlined above. The framework guides professionals through a 3-step systematic and rigorous process, (1) Requirements definition, (2) Device Search and (3) Device Evaluation. The aim is to help professionals avoid expenditure on devices that are not fit-for-purpose as well as providing them the best opportunity to choose a device that meets the needs of their end-users. We are not suggesting that the approach recommended in our paper is the 'best' or only way to address this issue. Rather, we are proposing this evaluation framework to the digital health research community as one way of addressing the issue, and would love to receive feedback on how it could be refined or improved. Ultimately, not all sensors are created equal, but we hope that our framework for the evaluation of human performance devices can be a step along the path towards solving the confusion challenge for those trying to identify the ones that are a best fit for them.

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in