After the Paper: Scoping studies: Advancing the Methodology

Published in Healthcare & Nursing and Public Health

Here, we reflect on the conceptualization, writing and publication of Advancing the Methodology, and describe three lessons drawn from the experience that have influenced our career trajectories.

We wrote Advancing the Methodology as trainees (DL and HC were PhD students, and KKO was a postdoctoral fellow) in the School of Rehabilitation Science at McMaster University. Each of us had recently used Arksey & O'Malley's (2005; fondly referred to hereafter as A&O) pioneering methodological paper to undertake an (at the time!) novel method of knowledge synthesis: the scoping review. Their paper offered an excellent road map, but also illuminated new challenges. A&O deftly recognized the work to be done, stating:

“One of the purposes of the present paper is to stimulate discussion about the merits of scoping studies, and help develop appropriate methods for conducting such reviews……We look forward to seeing how the debate progresses.”(p31)

As trainees, we felt ourselves to be less than ideal candidates to answer this call, but a fortuitous occasion of sage mentorship from DL’s doctoral supervisor, Dr. Cheryl Missiuna, changed our minds. Dr. Missiuna suggested that our collective experiences (published by DL in Research in Developmental Disabilities, HC in the Canandian Journal of Occupational Therapy , and KKO in Aids and Behavior) had value, and that the three of us partner to take on this challenge. Her encouragement gave us the confidence to undertake the work. She taught us Lesson #1: Look for ways to foster confidence in your trainees. She embodied this lesson by promoting our independence. Once the seed was planted to write a paper about our collective experiences, we were left to organize ourselves and do the work. Which we did! In addition to encouragement, Dr. Missiuna provided the funds to ensure the paper was open access. Her belief in our abilities, combined with the post-publication evidence of interest in the paper, nurtured our confidence as early career researchers, helping to establish us early on as methodologists in a rapidly developing field.

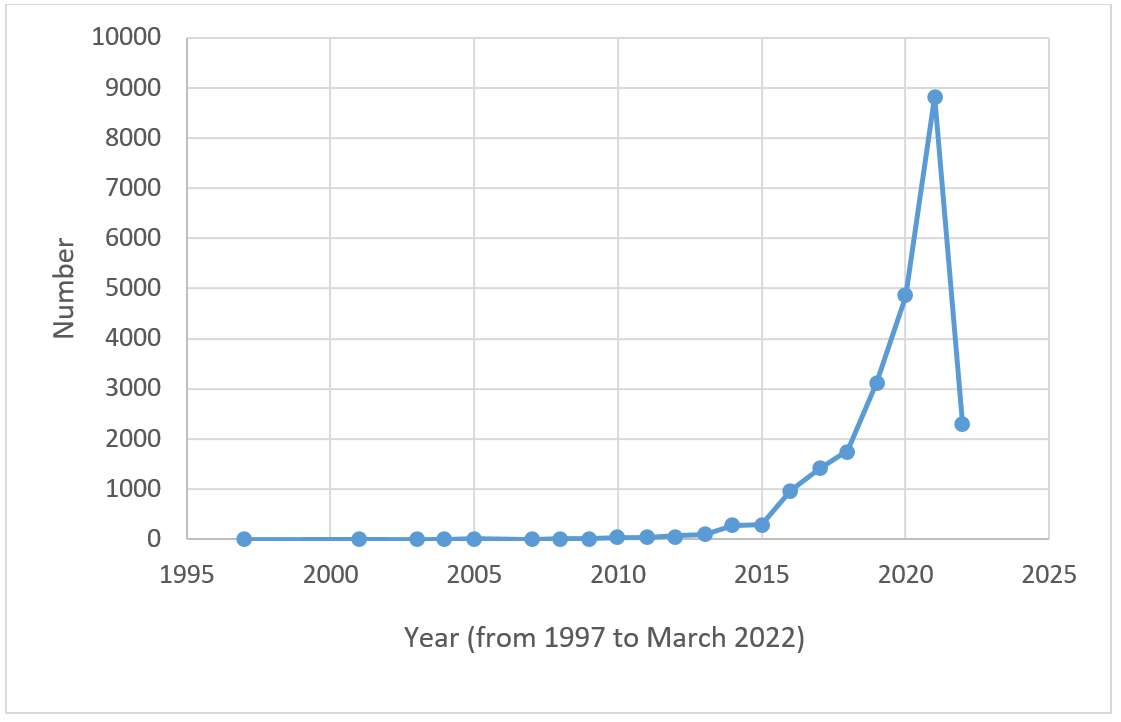

It helped that we were in the right place at the right time. Figure 1 illustrates the growth in scoping review (or scoping study) publications between 1997 and 2022, showing how the rate of scoping review publication was on the cusp of rapid acceleration following the 2005 A&O paper. However, we combined good luck with adequate preparation: we had each read widely, illuminating the gap that needed to be filled. In retrospect, we see this as Lesson #2: Question the status quo. We embody this lesson in nudging our students (and our colleagues!) to consider how advancing existing methodologies could be a component of their work.

For example, HC was an external examiner on a student’s comprehensive exam that occurred just a week before publication of the PRISMA scoping review reporting guidance. She asked the student to reflect on how the new guidance might influence her (already written in preparation for publication!) review. That question, along with sage encouragement from HC, spurred the student to write an editorial based on their experiences of altering their paper to reflect the new reporting guidance. We encourage our trainees to follow existing methodical guidance, but we also prompt them to continually reflect on how a methodology could be adapted to better address the research question. Over the years, lesson #2 has motivated us to question the other aspects of our field that could benefit from disrupting the status quo.

Figure 1: Number of scoping reviews by year

Note: Figure was created by conducting an electronic search for ‘scoping study’ or ‘scoping review’ from 1997 to March 2022 in Medline, EMBASE, CINAHL, and PsycINFO.

Importantly, we also benefited from mentors who believed in the value of collaboration. Combining our experiences into one paper made it stronger than if any one of us had written it alone, leading us to Lesson #3: Consider the process of doing the work to be equally important as its outcome. This can be difficult to remember in academia, where outcomes are key to advancement, specifically those that can be tallied in an annual progress or impact report. Yet the impactful, collaborative process of co-ideating and co-writing gave us an early model to replicate and expand in our subsequent careers.

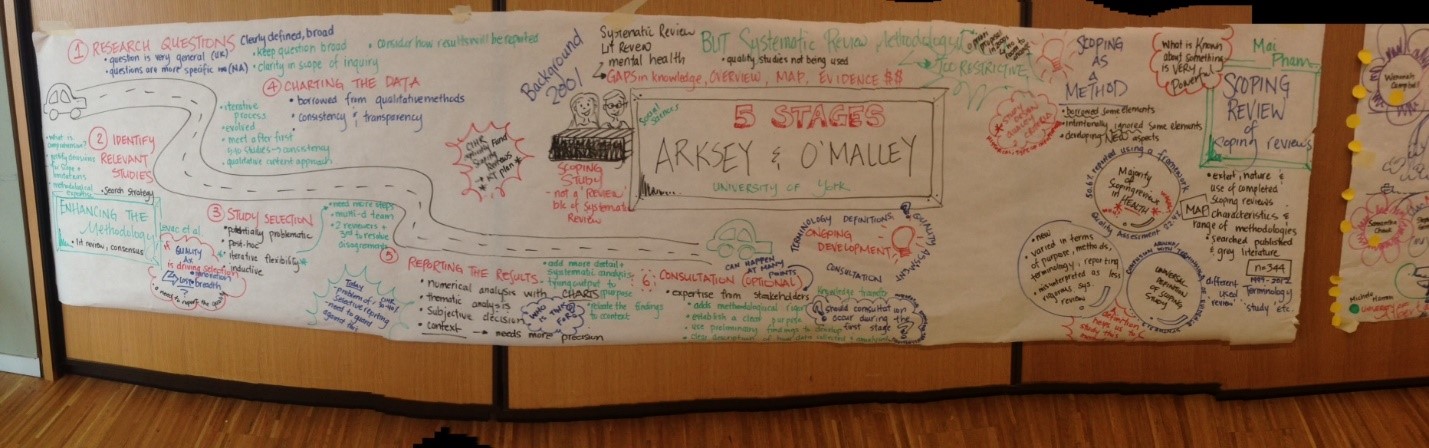

Indeed, Advancing the Methodology spurred new collaborations with researchers doing similar knowledge synthesis methodological work, formalized in a Canadian Institutes of Health Research Knowledge Dissemination grant in 2015 led by KKO. The grant brought together an international working group in Toronto to discuss scoping review methodological improvements. At this meeting, Dr. O’Malley herself presented the historical perspectives and origins of the scoping study (NB: A&O used the term scoping study). Figure 2 shows one of our brainstorming boards from this meeting – as you can see, a rich idea generation experience!

We joined forces with other groups working in this same area to continue collaborative efforts, including Drs S. Strauss and A. Tricco's group at the University of Toronto, who had expertise in knowledge syntheses and developing reporting guidelines (including a scoping review published in 2016 in BMC Research Methodology. This collaboration led to a 2014 Editorial in the Journal of Clinical Epidemiology and the publication of the PRISMA guidelines for Scoping Reviews. Further collaborations expanded to include another team working on reporting guidance for scoping reviews: Mikah Peters from the Joanna Briggs Institute (JBI).

Figure 2: Graphic Facilitation from the Advancing the Field of Scoping Study Methodology International Meeting held in 2015 (funded by the Canadian Institutes of Health Research) brainstorming board.

Amazingly, as of March 2022, Advancing the Methodology has been cited 6792 times (Google Scholar) and downloaded over 170,000 times. We never anticipated the attention the paper has received, nor the wide-ranging impacts of its publication on our careers. These impacts include the considerable time and energy spent on mentoring, advising, and presenting on scoping reviews, exposing us to a plethora of new research interests, perspectives, and worldviews.

Scoping reviews: Looking to the future

Many lively discussions have been held with the goal of debating the identity, conceptualization, definitions, and terminology related to scoping reviews. The most interesting were debates related to the value of quality assessment of included studies in a scoping review, the debate on terminology – is it a study, or is it a review? - and the pros and cons, from a scientific perspective, of the inherently iterative nature of the scoping process, as emphasized in the A&O paper. Interestingly, these issues remain relevant to this day. We also note emerging areas of debate such as how best to present scoping results for a wide range of stakeholders, how best to conduct a qualitative synthesis of data, and when to conduct the (optional) consultation exercise. We look forward to ongoing discussions!

We end with an expression of our collective gratitude for this experience. Publishing this paper had both measurable (the citations!) and immeasurable impacts on our career trajectories, influencing our dedication to the essential scientific pursuits of collaboration and mentorship. We would like to amplify A&O’s original call by encouraging others - at any stage of their careers - to join in methodological advancement efforts to benefit evidence-informed practice, research and policy.

Acknowledgements

The authors acknowledge the contributions of Sarah Zarshenas, Research Associate at University of Toronto, in gathering the data for and creating Figure 1. Kelly K. O’Brien (KKO) is supported by a Canada Research Chair in Episodic Disability and Rehabilitation from the Canada Research Chairs Program.

References

- Levac, D., Colquhoun, H. & O’Brien, K. K. Scoping studies: Advancing the methodology. Implement. Sci. 5, (2010).

- Arksey, H. & O’Malley, L. Scoping studies: towards a methodological framework. Int. J. Soc. Res. Methodol. 8, 19–32 (2005).

- Levac, D., Rivard, L. & Missiuna, C. Defining the active ingredients of interactive computer play interventions for children with neuromotor impairments: A scoping review. Res. Dev. Disabil. 33, (2012).

- Colquhoun, H. L., Letts, L. J., Law, M. C., MacDermid, J. C. & Missiuna, C. A. A scoping review of the use of theory in studies of knowledge translation. Can. J. Occup. Ther. 77, 270–279 (2010).

- O’Brien, K., Wilkins, A., Zack, E. & Solomon, P. Scoping the field: identifying key research priorities in HIV and rehabilitation. AIDS Behav. 14, 448–458 (2010).

- Tricco, A. C. et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 169, 467–473 (2018).

- Miller, E. & Colquhoun, H. The importance and value of reporting guidance for scoping reviews: A rehabilitation science example. Aust. J. Adv. Nurs. 37, (2020).

- O’Brien, K. K. et al. Advancing scoping study methodology: A web-based survey and consultation of perceptions on terminology, definition and methodological steps. BMC Health Serv. Res. 16, (2016).

- Tricco, A. C. et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med. Res. Methodol. 16, (2016).

- Colquhoun, H. L. et al. Scoping reviews: Time for clarity in definition, methods, and reporting. J. Clin. Epidemiol. 67, (2014).

11. Peters, M. D. J. et al. Scoping reviews: reinforcing and advancing the methodology and application. Syst. Rev. 10, 263 (2021).

Follow the Topic

-

Implementation Science

This journal publishes research relevant to the scientific study of methods to promote the uptake of research findings into routine healthcare in clinical, organizational, or policy contexts.

Related Collections

With Collections, you can get published faster and increase your visibility.

Advancing the Science and Metrics on the Pace of Implementation

The persistent 17-year span between discovery and application of evidence in practice has been a rallying cry for implementation science. That frequently quoted time period often implies that implementation needs to occur faster. But what do we really know about the time required to implement new evidence-based practices into routine settings of care. Does implementation take 17 years? Is implementation too slow? Can it be accelerated? Or, does a slower pace of implementing new evidence-based innovations serve a critical function? In many cases—pandemics, health inequities, urgent social crises—pressing needs demand timely implementation of rapidly accruing evidence to reduce morbidity and mortality. Yet many central tenets of implementation, such as trust, constituent inclusion, and adaptation, take time and may require a slow pace to ensure acceptability and sustained uptake.

To date, little attention and scant data address the pace of implementation. Speed is a rarely studied or reported metric in implementation science. Few in the field can answer the question, “how long does implementation take?” Answering that question requires data on how long various implementation phases take, or how long it takes to achieve implementation outcomes such as fidelity, adoption, and sustainment. Importantly, we lack good data on how different implementation strategies may influence the amount of time to achieve given outcomes.

To advance knowledge about how long implementation takes and how long it “should optimally” take, this collection seeks to stimulate the publication of papers that can advance the measurement of implementation speed, along with the systematic study of influences on and impacts of speed across diverse contexts, to more adequately respond to emerging health crises and benefit from emerging health innovations for practice and policy. In particular, we welcome submissions on 1) methodological papers that facilitate development, specification, and reporting on metrics of speed, and 2) data-based research (descriptive or inferential) that reports on implementation speed metrics, contextual factors and/or active strategies that affect speed, or the effects of implementation speed on important outcomes in various contexts.

Areas of interest include but are not limited to:

• Data based papers documenting pace of moving through various implementation phases, and identifying factors (e.g., implementation context, process, strategies) that affect pace of implementation (e.g., accelerators and inhibitors)

• Data based papers from multi-site, including multi-national, studies comparing pace of innovation adoption, implementation, and sustainment across various contexts

• Data based papers reporting time to implementation in the face of urgent social conditions (e.g., climate change, disaster relief) Papers on how to accelerate time to delivery of treatment discoveries for specific health conditions (e.g., cancer, infectious disease, suicidality, opioid epidemic)

• Data based papers on the timeliness of policy implementation, including factors influencing the time from data synthesis to policy recommendation, and from policy recommendation to implementation

• Span of time needed to: achieve partner collaboration, including global health partnerships adapt interventions to make them more feasible, usable, or acceptable achieve specific implementation outcomes (e.g., adoption, fidelity, scale-up, sustainment) de-implement harmful or low-value innovations, or to identify failed implementation efforts

• Effect of implementation pace on attainment of key outcomes such as constituent engagement, intervention acceptability or sustainability, health equity, or other evidence of clinical, community, economic, and/or policy benefits.

• Papers addressing the interplay between pace and health equity, speed and sustainability, and other considerations that impact decision-making on implementation

• Methodological pieces that advance designs for testing speed or metrics for capturing the pace of implementation

• This Collection welcomes submission of a range of article types. Should you wish to submit to this Collection, please read the submission guidelines of the journal you are submitting to Implementation Science or Implementation Science Communications to confirm that type is accepted by the journal you are submitting to.

• Articles for this Collection should be submitted via our submission systems in Implementation Science or Implementation Science Communications. During the submission process you will be asked whether you are submitting to a Collection, please select "Advancing the Science and Metrics on the Pace of Implementation" from the dropdown menu.

• Articles will undergo the standard peer-review process of the journal they are considered in Implementation Science or Implementation Science Communications and are subject to all of the journal’s standard policies. Articles will be added to the Collection as they are published.

• The Editors have no competing interests with the submissions which they handle through the peer-review process. The peer-review of any submissions for which the Editors have competing interests is handled by another Editorial Board Member who has no competing interests.

Publishing Model: Open Access

Deadline: Jun 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in