Behind the Paper: Teaching AI to See the Tracks Ahead — A Computer Vision Approach to Railway Safety -

Published in Computational Sciences

The Problem That Sparked Our Research

Every day, railway systems around the world transport billions of passengers and countless tons of freight. It's one of the safest forms of transportation we have — but when accidents happen, they can be catastrophic. Derailments, collisions with obstacles, and track obstructions continue to pose significant threats to railway operations globally.

Traditional methods for detecting track obstructions rely heavily on two things: manual track inspections and the vigilance of locomotive operators. But here's the challenge — human attention has limits. Fatigue sets in during long shifts. Visibility drops in fog, rain, or at night. A split-second distraction can mean the difference between spotting an obstacle in time or not.

We asked ourselves: What if we could give train operators an AI co-pilot that never gets tired and never looks away?

Our Approach: Teaching Computers to "See" Like Train Drivers

Our research, published in Discover Computing, introduces a visual intelligence framework that uses deep learning and computer vision to segment railway tracks in real-time and assess potential hazards based on their location.

Breaking Down the Track into Safety Zones

One of our key innovations was dividing the track area visible from the locomotive into four distinct regions, each representing a different threat level:

| Zone | Description | Threat Level |

|---|---|---|

| Major | The main track area directly in front of the locomotive | Highest (on-track, near field) |

| Front Minor | The track area further ahead along the path | Medium (on-track, far field) |

| Left Minor | The area immediately to the left of the track | Lower (off-track, near field) |

| Right Minor | The area immediately to the right of the track | Lower (off-track, near field) |

![Track Segmentation Zones Diagram] Figure: The four safety zones our system identifies in every frame

This classification scheme allows our system to not just detect that something is on or near the tracks, but to immediately assess how dangerous it is based on where it's located. An object in the "Major" zone requires immediate attention, while something in the "Left Minor" zone might be monitored but isn't an immediate threat.

Building Our Own Dataset: When No One Has Done This Before

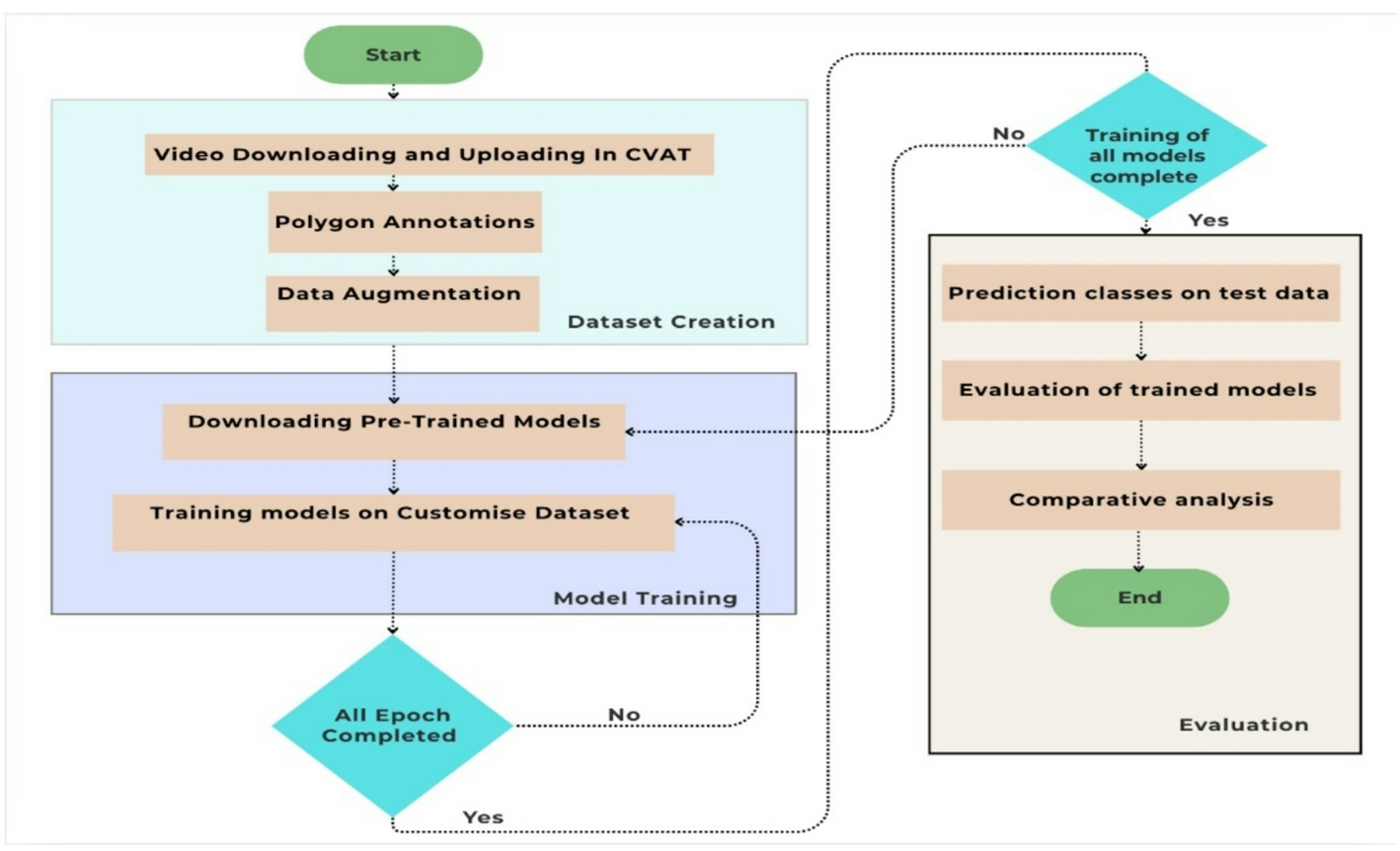

One of the biggest challenges we faced was the lack of existing datasets specifically designed for railway track segmentation. While there are plenty of datasets for autonomous vehicles on roads, railway environments present unique challenges — different track types, platform configurations, curves, and infrastructure elements.

So we built our own.

We extracted frames from publicly available videos filmed from locomotive cab views, capturing diverse scenarios:

- Straight tracks and curved tracks (both left and right curves)

- Stations with platforms on one side, both sides, or no platforms

- Various lighting conditions and infrastructure configurations

Each of the 1,638 images in our dataset was meticulously annotated using polygon shapes to define the exact boundaries of each safety zone. We then used data augmentation techniques — including mosaic composition, horizontal flipping, and multi-scale scaling — to make our models more robust to real-world variations.

The YOLO Family: Our Detection Champions

For our detection engine, we turned to the YOLO (You Only Look Once) family of algorithms — renowned for their speed and accuracy in real-time object detection. We evaluated six different YOLO variants across three generations:

- YOLOv8: YOLO-n8 (nano) and YOLO-m8 (medium)

- YOLOv9: YOLO-c9 (compact) and YOLO-e9 (enhanced)

- YOLOv11: YOLO-n11 (nano) and YOLO-m11 (medium)

Why so many? Because different deployment scenarios have different requirements. A system running on a powerful cloud server can handle larger, more accurate models. But a system deployed directly on a locomotive's embedded computer needs to be lightweight while still reliable.

The Results: High Accuracy Across All Safety Zones

After training our models for 70 epochs on an NVIDIA A100 GPU, we achieved impressive results:

Best Performers: YOLO-m8 and YOLO-c9

- Mean Average Precision (mAP@0.5): 94%

- Precision: 97%

- Recall: 96%

Class-wise Accuracy (YOLO-c9):

- Major zone: 89.3%

- Front Minor: 87.9%

- Left Minor: 84.7%

- Right Minor: 83.5%

What's particularly encouraging is that the "Major" zone — representing the highest-threat area directly in the locomotive's path — achieved the highest accuracy. This is exactly where we need the system to perform best.

Watching the AI Learn

One of the fascinating aspects of our research was observing how the models improved over time. Early in training (around epoch 10), the models struggled with the "Major" class, achieving confidence scores as low as 0.76. But by epoch 70, this same class reached confidence scores of 0.97-0.98.

The learning curves told an interesting story: most models achieved about 90% of their final performance within just 20-30 epochs, with incremental refinements thereafter. This finding has practical implications — for rapid prototyping or resource-constrained deployments, shorter training periods might be acceptable.

Connecting to India's Railway Modernization

Our work aligns with real-world railway safety initiatives, particularly India's Gati Shakti Project and the deployment of Kavach — an Automatic Train Protection (ATP) system designed to prevent collisions.

Current ATP systems like Kavach use radio frequency-based communication for collision avoidance, but they haven't yet fully leveraged computer vision for enhanced situational awareness. Our framework provides a pathway for integrating visual intelligence into these existing systems, adding another layer of safety without replacing proven technologies.

Limitations and the Road Ahead

We're proud of our results, but we're also honest about our limitations:

- Geographic Scope: Our dataset primarily covers specific regions and track types. Expanding to include diverse international railway systems would improve generalizability.

- Weather Conditions: Further validation under adverse weather (heavy rain, fog, snow) and extreme lighting is needed.

- Complete System Integration: Our current framework focuses on track segmentation. A complete safety system would need integration with obstacle detection and classification modules.

- Field Testing: Extended trials under continuous operational conditions are required before real-world deployment.

What This Means for the Future of Railway Safety

Our research demonstrates that AI-powered visual intelligence can achieve the accuracy and speed needed for real-time railway safety applications. The ability to segment tracks into threat-level zones in milliseconds opens possibilities for:

- Real-time driver alerts when obstacles enter high-threat zones

- Reduced cognitive burden on locomotive operators during long shifts

- Complementary safety layer to existing ATP systems

- Data collection for improved track monitoring and maintenance

As railways continue to modernize and speeds increase, the need for intelligent, automated safety systems will only grow. We hope our framework provides a foundation for future developments in this critical area.

The Team Behind the Research

This research was conducted at the Center of Excellence in Signal and Image Processing at the Electronics and Telecommunication Department of COEP Technological University, Pune, India.

Authors:

- Yogesh Madhukar Gorane (Corresponding Author)

- Radhika D. Joshi

Published in Discover Computing (2025) 28:328

DOI: 10.1007/s10791-025-09872-z

Journal Badge: Discover Computing

Channel: Behind the Paper

Topics: #ComputerVision #MachineLearning #RailwaySafety #DeepLearning #YOLO #InstanceSegmentation #TransportationSafety #ArtificialIntelligence #IndianRailways #AutonomousSystems

The datasets generated during this study are available from the corresponding author upon reasonable request.

This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Follow the Topic

-

Discover Computing

Previously Information Retrieval Journal. Discover Computing is an open access journal publishing research from all fields relevant to computer science.

Related Collections

With Collections, you can get published faster and increase your visibility.

Bio-Inspired Adaptive Systems for Collective Behaviors and Intelligent Optimization

This Collection aims to explore cutting-edge research in bio-inspired algorithms and adaptive systems, focusing on their applications in collective behavior, intelligent optimization, and decision-making processes. We invite submissions that investigate the theoretical development, practical implementation, and interdisciplinary approach, emphasizing the role of bio-inspired algorithms in modeling and solving complex real-world problems. The Collection will also highlight how these systems contribute to understanding and modeling social behaviors, improving multi-agent coordination, and solving optimization problems in domains such as transportation systems, crowd management, and resource allocation.

This Collection supports and amplifies research related to SDG 9.

Keywords: Bio-inspired algorithms, Adaptive systems, Collective behaviors, Ant Colony Optimization (ACO), Multi-agent systems, Self-organization, Artificial Intelligence, Agent-based Models and Intelligent optimization

Publishing Model: Open Access

Deadline: May 31, 2026

Advanced Technologies and Intelligent Applications for Unmanned Swarm Systems

Most recently, unmanned swarm systems have played an increasingly important role in the national economy and human social life across many fields, such as traffic monitoring, disaster relief, anti-terrorism operations and target acquisition, and are considered one of the most exciting and innovative technologies in the field of artificial intelligence. Unmanned swarm systems can share their detected information (e.g., physical surroundings, collision events, threat messages) with others via various communication systems (e.g., aircraft addressing and reporting systems, vehicular ad hoc networks, long-term evolution, and 4G/5G mobile networks) for cooperation and coordination. Compared with manned vehicles, unmanned swarm systems can relieve humans from dull, dirty, and dangerous tasks and perform operations more efficiently. With the advances in various computing models and control strategies, a growing number of researchers and practitioners have begun actively focusing on the key technologies and intelligent applications of unmanned swarm systems. Meanwhile, with the integration of artificial intelligence, machine learning, data mining, signal processing and other technologies, many intelligent applications of unmanned swarm systems are rapidly evolving and being widely adopted. Advances in unmanned swarm systems affect every part of life, business, industry, and education, and have become an important driver of innovation and value for many companies and organizations.

This collection aims to bring together world-class researchers to present state-of-the-art research achievements and advances in unmanned swarm systems in terms of advanced technologies and intelligent applications for self-driving cars, unmanned surface vehicles, unmanned aerial vehicles, and more. Review articles are also encouraged.

Topics of interest may include (but are not limited to):

- Knowledge-based AI for vehicle perception, control, and decision-making

- Artificial intelligence applications in unmanned aerial vehicles

- Data science approaches for autonomous vehicle systems

- Localization, mapping, and semantic segmentation for unmanned aerial vehicles

- Collaborative perception and control of vehicle swarms

- Highly Safe and Reliable Communication Networks

- Simulation and verification of autonomous vehicle systems

- Task allocation and resource scheduling for multi-agent systems

- Fault detection and diagnosis for unmanned aerial vehicles

- Human-robot interaction for autonomous robots

- Motion drive and teleoperation control for unmanned aerial vehicles

This Collection supports and amplifies research related to SDG 9.

Publishing Model: Open Access

Deadline: Jul 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in

Visual intelligence framework for track division and obstruction risk estimation in rail transport | Discover Computing