Blind Color Image Watermarking Using Deep Artificial Neural Network Using Statistical Features

Published in Electrical & Electronic Engineering, Protocols & Methods, and Computational Sciences

1. Introduction:

With increasing digital content over the internet it is very important to secure the digital contents in such a way that the identity and integrity of data is preserved in some way and with lots of advancements in these fields, it is very important to protect digital contents such as healthcare data and electronic records. It is also significant to protect the digital contents for a smart city scenario where mostly records or data floats electronically. This paper presents a methodology for copyright protection and authorship of digital contents for smart city. In order to achieve a balance between imperceptibility and robustness a robust watermarking scheme is proposed using deep artificial neural network (DNN) and lifting wavelet transform in YIQ colour domain. YIQ colour model is utilized for digital health image decomposition. Statistical features have been obtained for creating training and testing set from the healthcare datasets. Here watermark extraction is done as a binary classification technique and PCA is utilized for reducing the feature set. Ten standard images have been used for image watermarking and for threshold value 0.3 it shows the average imperceptibility of 51.08 dB and shows good robustness under various image attacks.

2. Contribution of the work:

The contributions of the proposed work are as follows:

• It is blind by nature, therefore it doesn't require a cover image or watermark.

• The usage of LWT produces watermarked images of high quality and offers considerable robustness even in the face of several image attacks.

• The use of DANN offers a fair level of balance between PSNR and NC.

• The system's security is increased by the use of secret

seed keys for the randomization at various levels.

3. Datasets:

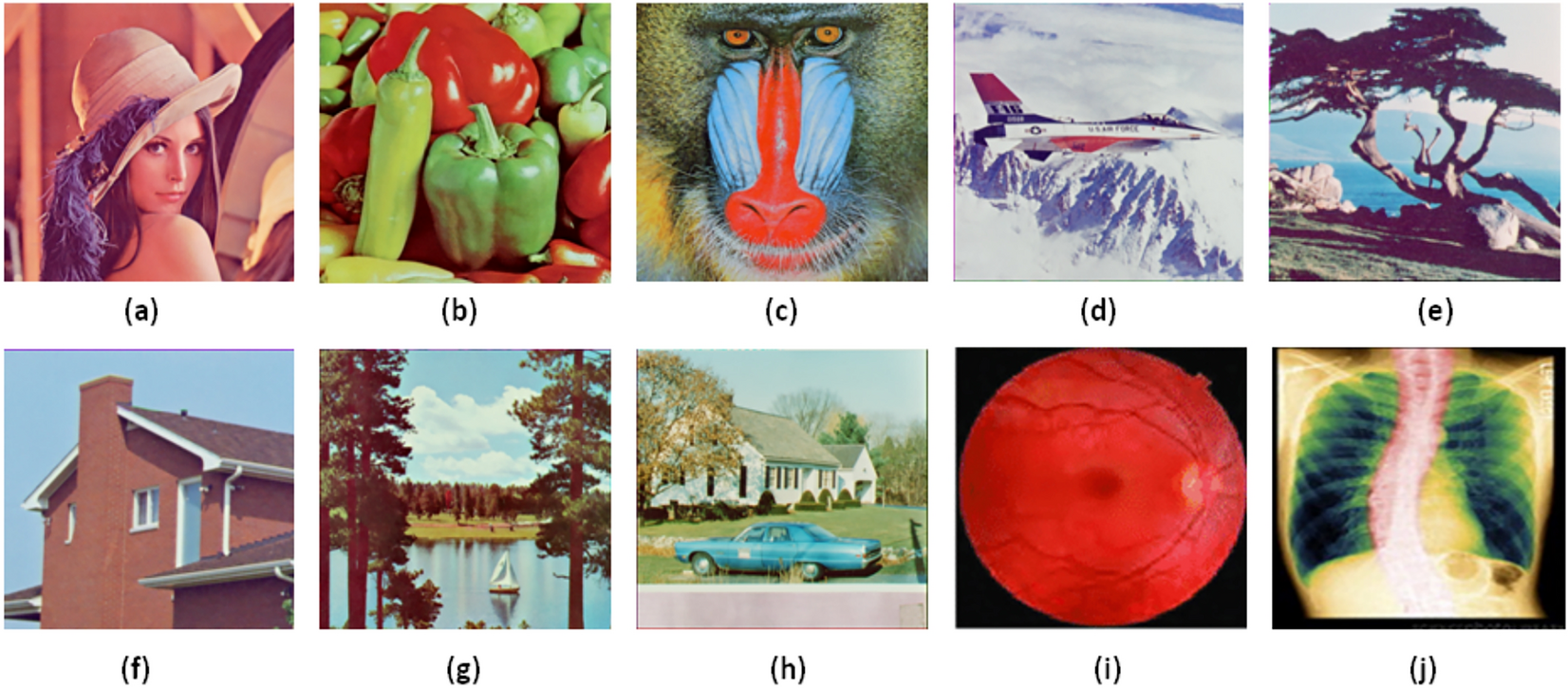

This section deals with the dataset used for the proposed robust color image watermarking scheme and for the experiment purpose ten color cover image such as Lena, Peppers, Mandril and Jet, Tree, House, Sailboat, Car, Retina, X-ray each of dimension 512*512, and a pixel is represented using 24 bits and one binary watermark image ‘CSIT’ of size 16*32 is used.

4. Proposed methodology:

This study suggests a blind color image watermarking method that makes use of deep artificial neural network and extracts the watermark image using the binary classification method for providing safety to digital healthcare data in the smart city scenario. Ten statistical features out

of eighteen statistical features in the planned work were taken into account for training and testing. With the aid of a secret key, a reference watermark (randomly generated) of length 512 bits and a signature (original) watermark (SW) of length 512 bits are combined and quantized into a color cover image coefficient of length 1024, 2 × 2 matrix. 1024 2 × 2 matrixes are separated into 512-bit blocks, one for adding .the reference watermark and the other for the signature watermark, for the purpose of adding randomly generated watermark bits.

5. Results:

For the experiment purpose different types of attacks like Salt and Pepper noise (SLP) for noise intensity 0.01 and 0.02, Speckle noise (SPLN) for noise intensity 0.01 and 0.02, Gaussian noise (GN) for noise intensity 0.01, Scaling (SCL) for factor 0.9, 1.5, 2.0, Rotation (RT) for 10°, and

Average filter (AVG) for 3 × 3 pixels, Cropping (CR) for and 20%, Histogram attack (HE) and Median filter (MF) for filter size 3 × 3 attacks have been considered. The proposed watermarking method performs well against SLP (0.01), SPLN (0.01) and SCL (2.0) attacks, but for GN (0.01) attack it is not performing well, it could be because the GN (0.01) attacks affects the pixels relationship badly.

This work is my PhD work and I am a new researcher so I request the community to kindly go through it and cite my work if they find it interesting. I am open for any request and also looking for Post Doc position in the foreign. This article can be found at https://doi.org/10.1007/s42979-024-03030-w

Follow the Topic

-

SN Computer Science

SN Computer Science is a broad-based, hybrid, peer reviewed journal that publishes original research in all the disciplines of computer science including various inter-disciplinary aspects.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in