Depth in focus: D component of RGB-D images and videos

Published in Electrical & Electronic Engineering

The Red Green Blue – Depth (RGB-D) format revolutionizes the way we perceive images and videos. While the RGB component, depicting color information, is widely understood; the D component, representing depth information, remains relatively less familiar to the broader public. This depth component, represented in the form of depth maps, adds a new dimension to visual data, enabling applications that go beyond traditional imaging techniques.

Depth maps

A RGB-D image is a combination of a RGB image and its corresponding depth image, better known as depth map. Depth maps are 2D grayscale images of the same size as the RGB images they are associated with. The gray level of each pixel of the depth map indicates the distance to the camera of its corresponding RGB pixel. In the depth map, each pixel sets the position in the Z-axis of the corresponding RGB pixel. The 0 value of the depth pixels corresponds to the farthest points of the 3D scene to the capture camera. The value 255 means that the pixels are located at the nearest planes.

RGB image (left) and its corresponding depth map (right)

Depth maps have been subject to promising and increasing interests as they:

- enable immersive 3D experiences and deliver lifelike stereoscopic displays to users, enhancing realism and engagement in visual content.

- provide the ability for users to interactively navigate and perceive any viewpoint in the video scene even if this viewpoint is not actually captured. Thanks to depth maps content, synthesis, i.e., reconstruction, of new views is possible. Indeed, depth maps are typically used for view synthesis and not themselves displayed.

Being different from RGB images, depth maps are distinguished by two intrinsic features:

- Their piecewise planar definition: Each plane corresponds to an object of the scene where intensities of its coplanar pixels smoothly vary (as pixels of the same object have almost the same distance to the camera). Each contour, placed at the edges of objects, reproduces a sharp depth discontinuity between objects in foreground and background.

- Impact of depth discontinuities on the quality of the synthesized views: Distortions, due to compression, filtering, noise, … at discontinuities and at pixels near them result in a highly visible degradation of the synthesized views. Nevertheless, it is not the case for smooth planes where distortions have a considerably less noticeable or even limited effect on view synthesis.

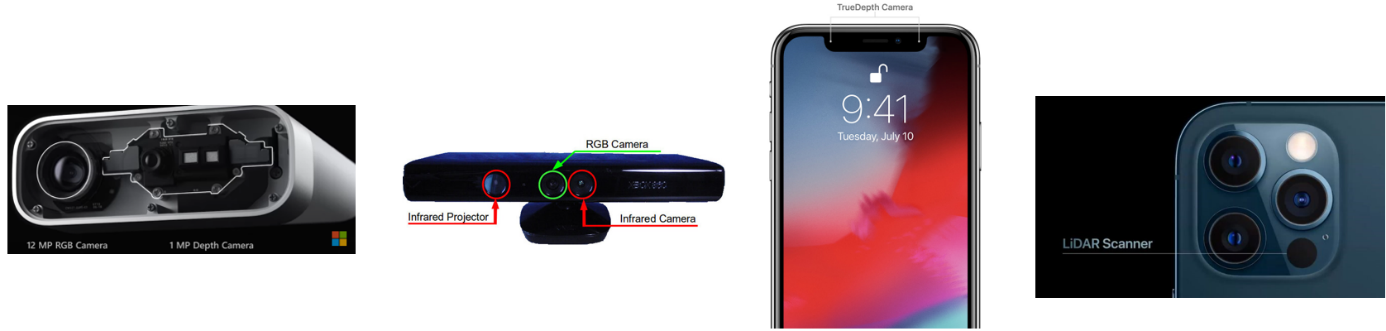

RGB-D sensors

RGB-D sensors are devices designed to capture not only traditional color information (RGB) but also depth data (D). There are primarily two types of RGB-D active sensors: time-of-flight (ToF) sensors and structured light sensors. Time-of-flight sensors, such as Azure Kinect, measure depth by measuring the time it takes for light to travel from the sensor to the object and back. Structured light sensors, like the Microsoft Kinect, project a known pattern onto the scene and analyze its deformation to calculate depth information.

In recent years, the integration of depth sensors into smartphones, such as the TrueDepth camera and the LiDAR scanner on some iPhone models, has brought this technology to a broader audience. These smartphones incorporate depth-sensing capabilities, making it more accessible for the general public to enjoy augmented reality experiences, improved portrait mode photography, and enhanced security features like facial recognition. As depth sensors continue to evolve and become standard in more devices, we can expect even greater innovation and new applications that leverage the power of RGB-D technology.

If specialized hardware is not available, it is possible to estimate depth maps from RGB images using advanced image processing algorithms. These approaches frequently rely on stereo vision techniques. Driven by matching algorithms, stereo vision is a field in computer vision dedicated to estimate depth maps from RGB images captured by multiple cameras or image pairs. These algorithms function by looking at how objects appear slightly different in two pictures taken from different angles. By using this information, they figure out how far away things are, much like how our eyes perceive depth by comparing what each eye sees. That is indeed how some old movies, originally filmed in 2D version, have been converted to 3D. This is the case of Jurassic Park (1993), The Lion King (1994), Titanic (1997), and Star Wars: Episode I - The Phantom Menace (1999).

RGB-D vision applications

Depth maps have gained a great deal of interest due to the geometrical information they include, and that provides a comprehensive understanding of the captured scene. Further, depth information, unlike the RGB data, is not influenced by the viewing angle, low light intensity, and objects texture and colors that may impact performances of many applications, including virtual/augmented reality and computer vision (gesture recognition, tracking and activity recognition, action recognition system, human detection, robotics navigation, …). Even for Internet of Things (IoT), the future is for 3D vision and depth-enabled IoT to provide machines, like robots, autonomous cars, and drones, with a deep perception that closely resembles that of human beings. Here are some potential RGB-D vision applications:

- Autonomous systems: With their capacity to enable real-time interactions and navigations in the actual world, depth maps hold enormous promise for expanding autonomy technologies into various fields. Enabling autonomous devices to accurately interpret their environments through providing essential information, they offer remarkable precision. By employing depth maps, self-driving cars enhance safety through improved detection capabilities. Drones equipped with depth sensors enhance crop management through precise measurement of crops and assessment of their health. Assembly process inspections are carried out by robots fitted with depth sensors. Thus, identification of flaws (such as surface blemishes, cracks, or deformation) via reference models enables maintenance of exceptionally high production quality. Depth maps also allow collaborative robots, i.e., cobots, to safely share space with humans by detecting their presence in real-time.

- Healthcare: Significant advancements have been made thanks to the leveraging of depth maps within the healthcare industry, leading to novel diagnostic and therapeutic approaches. By means of depth sensors, physicians and surgeons receive accurate 3D representations of their patients body parts, resulting in improved procedure accuracy. Depth maps enhance surgical precision by providing detailed information regarding internal tissue arrangements. Moreover, depth cameras help create tailored medical devices like prosthetics and orthotics via detailed body measurements that go beyond just imaging procedures. Depth maps also prove valuable when it comes to recognizing respiratory movement. Real-time monitoring enables accurate tracking of respiratory patterns through continual observation facilitated by depth-sensing technology. In radiation therapy, the capability to precisely locate tumors proves vital. Accurate placement of radiation upon cancer cells depends on the incorporation of depth which consider individual differences in respiratory habits or periodic changes. Through this method, there is less chance of damaging healthy cells, thus improving therapy results.

- Video surveillance and security: By incorporating depth perception into conventional video streams, more precise monitoring, categorization, and understanding of surroundings become possible. Practical implementation leads to substantial improvements in security measures. By leveraging depth maps, security personnel in crowded places like airports can identify irregular actions, ranging from forgotten suitcases to pedestrians going counter to the normal flow of foot traffic. Access control platforms may boost identification accuracy thanks to improved face detection capabilities brought about by RGB-D sensors working under challenging lighting circumstances. Also, the leverage of depth maps allows a reliable intruder detection near boundaries and improves perimeter security.

- Virtual and augmented reality: Depth maps play an essential role in enabling precise object location inside augmented reality. By leveraging depth maps, smartphone AR applications provide integrated and uninterrupted interactions. By preventing collisions with real-world objects, depth images enable a far more enjoyable and secure VR experience. Users can move virtual objects around naturally by using hand motions thanks to depth sensors. These sensors replicate how things behave physically in the world. By leveraging depth data, we might better simulate authentic illumination situations in virtual settings, while maneuvering shadows and optimizing ambient brightness.

With ongoing advancements in depth map acquisition and processing technologies, it is likely that many more applications will be developed in the future. To ensure efficient utilization of geometric information in the aforementioned applications and beyond, it is crucial to preserve compact, high-quality representations of depth maps during the compression process; ideally producing semantically coherent compressed maps even at low bit rates. Otherwise, unpleasant surprises can occur, leading to significant losses and damages, sometimes even irreversibly for certain use cases.

Depth maps compression

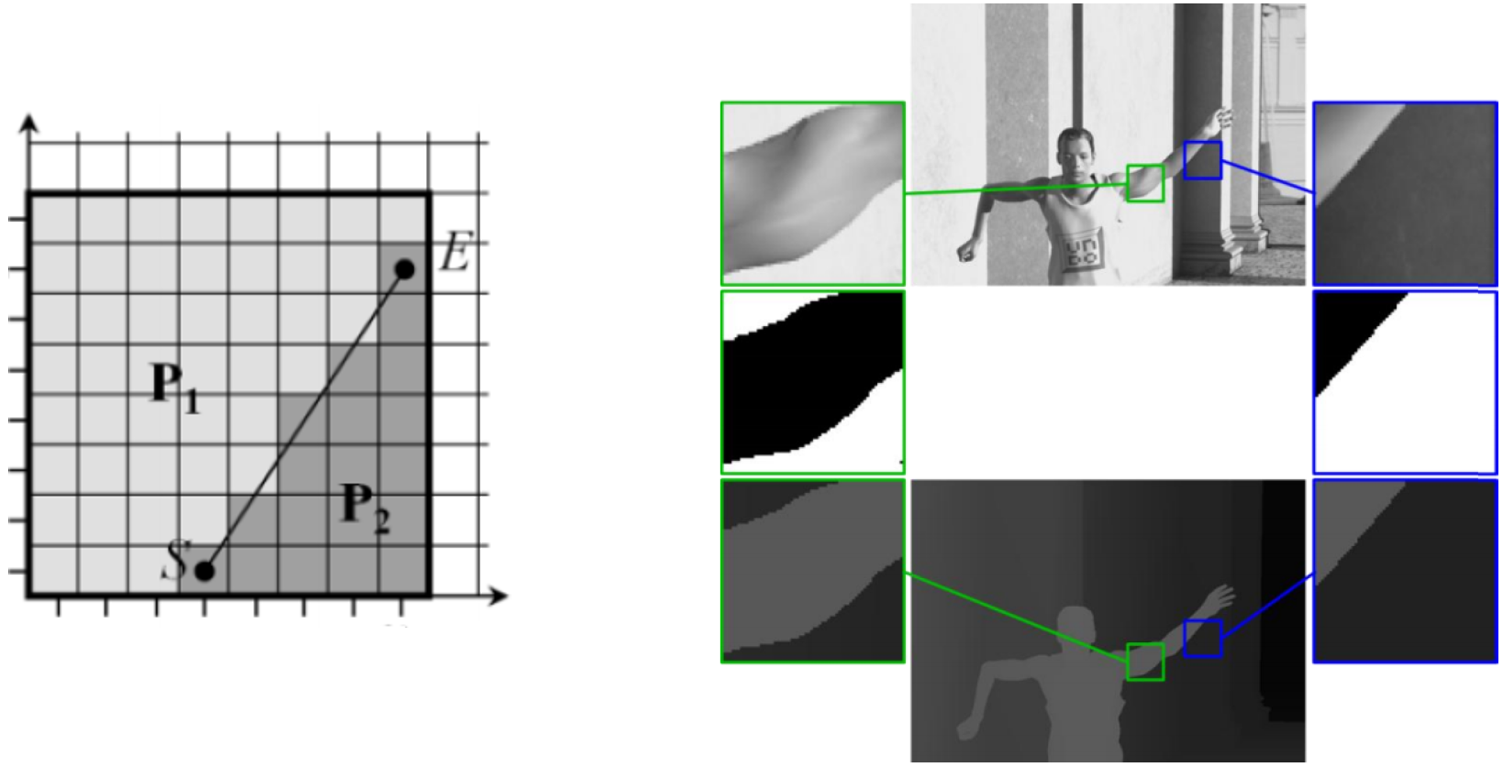

Compression modes included in texture image compression standards (e.g., Versatile Video Coding (VVC) and High Efficiency Video Coding (HEVC)) cannot be directly applied to depth map compression due to the specific characteristics of these latter compared to texture images. This is why the Joint Collaborative Team on 3D Video (JCT-3V) developed the 3D-High Efficiency Video Coding (3D-HEVC) standard. Finalized in 2015, it incorporates new prediction modes tailored for depth map compression. These modes, known as Depth Modeling Modes (DMM), were designed to preserve sharp discontinuities rather than the overall visual quality of the depth map. These DMMs primarily utilize wedgelet functions to capture depth discontinuities by placing a subdivision line along discontinuities. Each resulting sub-region from the depth block partition is estimated by a constant value. We distinguish between 2 DMMs:

- "Explicit wedgelet partitioning" mode: Although complex and resource-intensive, this mode aims to achieve the best wedgelet partition of a block among all possible partitions.

- "Inter-component-predicted contour partitioning" mode: unlike the first mode, the texture block is used to predict the wedgelet partition of the depth block as contours are redundant information between the texture and depth images. Also unlike the first mode, this does not involve a straight subdivision line but rather an arbitrary contour. Sub-regions in this case have arbitrary shapes so that to predict blocks that cannot be easily modeled by a straight line, as the wedgelet could.

Left: “Explicit wedgelet partitioning” mode, and Right: “Inter-component-predicted contour partitioning” mode (straight line (in blue) vs. contours (in green))

Datasets

Here are some datasets that cater to a wide range of computer vision tasks and real-world applications:

- TUM RGB-D: This dataset includes indoor and outdoor RGB-D sequences and is widely used for visual Simultaneous Localization and Mapping (SLAM) research in robotics.

- EuRoC MAV: Focused on Micro Aerial Vehicles (MAVs), this dataset provides RGB-D sequences for tasks such as visual odometry and 3D mapping.

- Colonoscopy CG: a synthetic dataset composed of endoscopic data of the colon, the predominant form of data containing depth maps within the medical field.

- SUN RGB-D: covers multiple scenarios, including indoor, outdoor, and robotic applications. They are suitable for semantic segmentation and object detection and pose.

- RGB-D Object: A collection of everyday objects captured with RGB-D sensors, often used for object recognition and manipulation tasks in robotics.

- Agricultural Robot: Designed for agricultural robotics, this dataset contains RGB-D data for crop detection, weed classification, and other agricultural tasks.

- NYU Hand Pose: contains 72757 training set and 8252 test set frames of RGB-D data for hand pose estimation.

- STanford AI Robot (STAIR) Actions: This dataset contains RGB-D videos of various human actions and activities in indoor environments, which can be valuable for healthcare applications involving activity recognition and monitoring.

- UTD Multimodal Human Action Dataset (UTD-MHAD): is used for research in Human Activity Recognition (HAR), particularly in the field of mobile and wearable computing. It is often used for activities related to healthcare and rehabilitation, including action recognition and fall detection.

- MobiAct: This dataset contains RGB-D videos for Human Physical Activity Recognition (HPAR), e.g., walking, exercising, cooking, drinking, phone conversation, brushing hair, using computer, … It can be applied to healthcare and fitness monitoring.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in