Enhancing Privacy-Preserving Learning for Biomedical Applications with Large-Scale Distributed Data

Published in Bioengineering & Biotechnology, Computational Sciences, and Biomedical Research

Federated learning (FL) is a technique that allows distributed data holders (e.g., hospitals) to collaboratively train an AI model without sharing the data. Departing from the conventional learning model, FL provides a new venue to secure local data while still benefiting from external data sources.

The Gap Needs to be Filled Between Theory and Practice

The existing research on FL is mostly conducted on image and generic data in a simulated environment but lacks the study of biomedical data with real-world implementation considerations such as model size, collaboration scale, distribution shift, etc. In real-world implementation, especially clinical deployment, these factors, although often ignored, are critical concerns. For example, hospitals may be equipped with varied computing resources and infrastructure which restricts the choice of model architecture and model size; collaboration may be conducted on a small network or in large cohorts naturally resulting in a tradeoff between efficiency and performance; different hospitals may collect data in different formats or from distinct demographic groups leading to severe data heterogeneities. Blindly applying FL without considering these factors will result in a waste of resources, lower performance, or even termination of collaborations.

Instead of bringing the data to the model, FL brings the model to the data—training algorithms where the data resides.

Preliminary Works and Our Approach

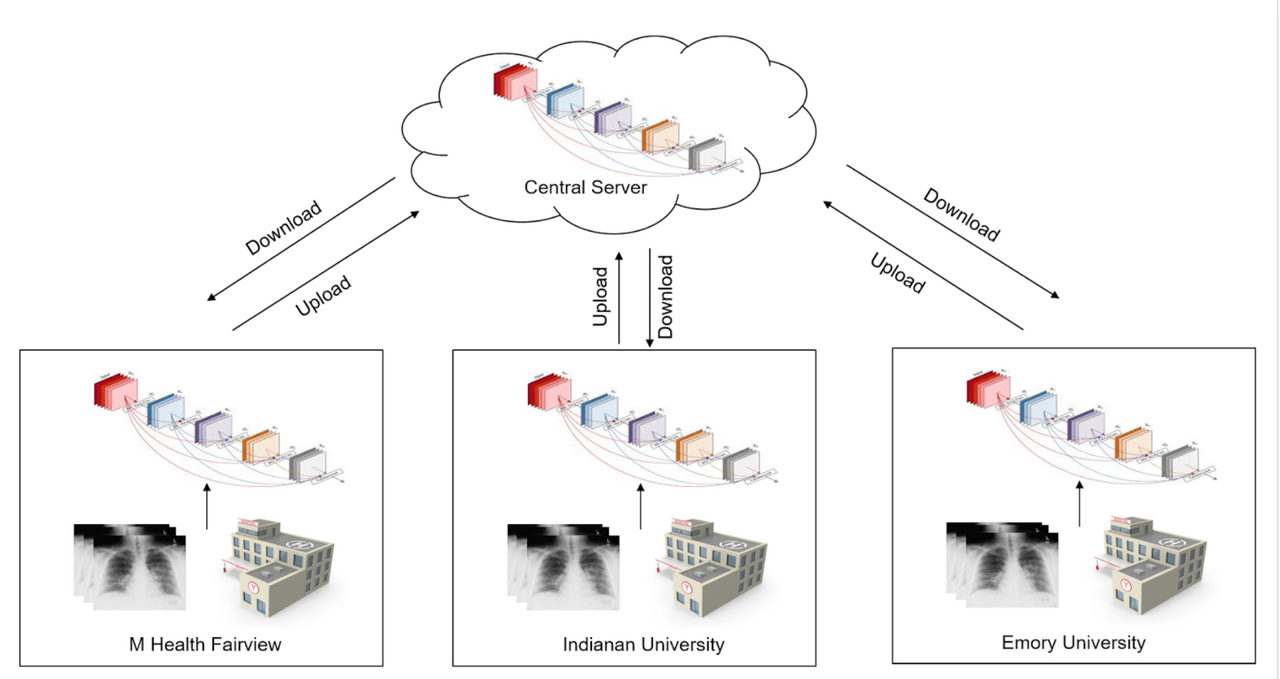

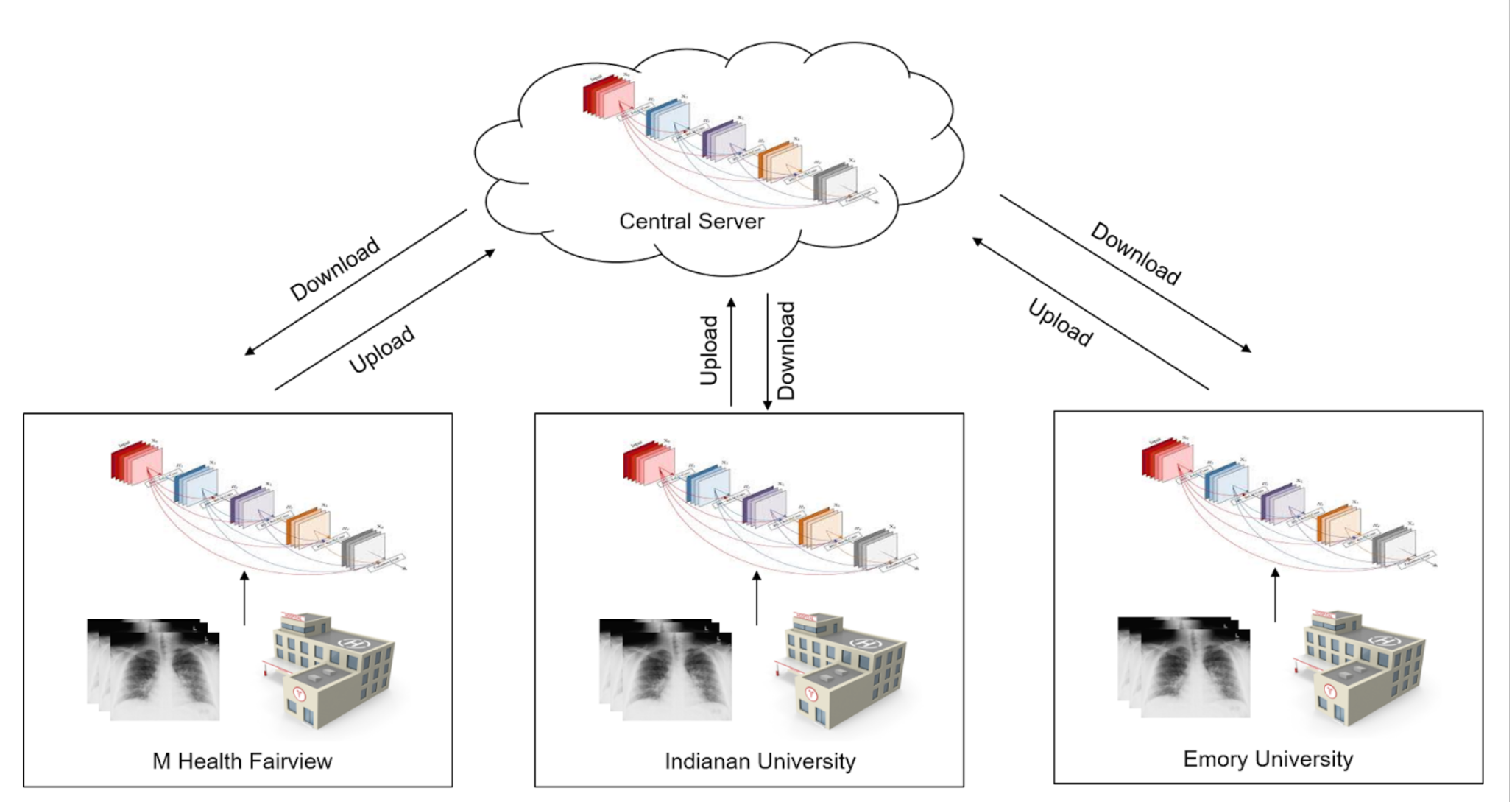

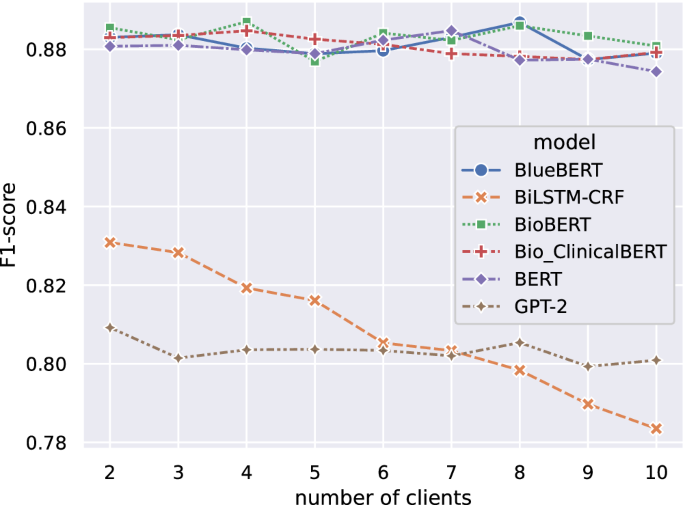

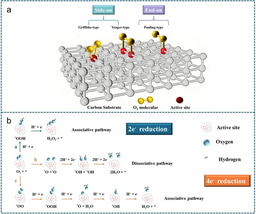

Our group is dedicated to bridging the gap between theory and practice by advancing FL for real-world deployments. In our previous collaborative work with Indiana University, Emory University, and MHealth Fairview, we developed a COVID-19 diagnosis model from chest radiographs and deployed it into real-world healthcare systems across three sites, among the first nationwide (Fig. 1) [1]. During the development process, we encountered several practical challenges, including data and system heterogeneity, resource constraints, and high communication costs. This inspired us to investigate more from a practitioner's perspective. As in our latest work [2], we studied two biomedical information extraction tasks and investigated FL under various real-world learning scenarios such as varied federation scales (Fig. 2), different model architectures (Fig. 3), data heterogeneities, and comparisons with LLMs. Our result shed light on several key practical insights:

- FL is beneficial regardless of the existence of data heterogeneity or not

- Large pretrained LMs are more robust against perturbation of federation scales

- FL significantly outperforms LLMs with few-shot prompting

.png)

.png)

Future Perspectives and Remarks

Our study is still restricted to applications where all FL participants share the same tasks which are highly coupled to the target learning problems. A broader application of FL in healthcare could be less-coupled where different sites aim for different tasks while data is weakly correlated. This may inspire future work on improving FL with multi-task learning or training foundation models using FL. Potential research to advance these research includes accelerating FL to enable the training and fine-tuning of large foundation models and handling massive datasets. Additionally, addressing "free riding" and ensuring fairness among participants is crucial especially when resources among different participants are unequal.

References

[1] Peng, L., Luo, G., Walker, A., Zaiman, Z., Jones, E.K., Gupta, H., Kersten, K., Burns, J.L., Harle, C.A., Magoc, T. and Shickel, B. "Evaluation of federated learning variations for COVID-19 diagnosis using chest radiographs from 42 US and European hospitals." Journal of the American Medical Informatics Association, (JAMIA), 30(1), pp.54-63.

[2] Peng, L., Luo, G., Zhou, S., Chen, J., Xu, Z., Sun, J., Zhang, R."An in-depth evaluation of federated learning on biomedical natural language processing for information extraction." npj Digital Medicine, 7.1 (2024): 127.

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Impact of Agentic AI on Care Delivery

Publishing Model: Open Access

Deadline: Jul 12, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in