“Learned Sensing” with Reconfigurable Metasurface Technology

Published in Electrical & Electronic Engineering

Humans and animals use light and sound to monitor their surroundings. Our eyes and ears are incredibly elaborate sensors to capture optical and acoustic waves. Over the course of evolution, Nature has optimized these “sensors”, as well as the way in which the information they acquire is interpreted by our brain. With the advent of “smart” devices, capable, for instance, of interacting with humans by recognizing their gestures, engineers face the question of how to endow man-made devices with similar features to monitor the device’s surroundings, that is to gain “context-awareness”.

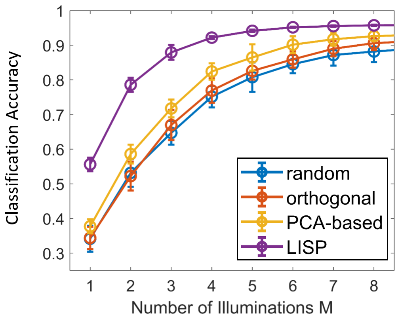

For many “smart” devices, it is important to reduce as much as possible the time required for both acquiring and processing data to answer a specific question, such as “what hand gesture is being displayed?” or “is there a pedestrian on the road ahead?”. For instance, autonomous vehicles must make decisions within tens of milliseconds. Ultimately, this means that each measurement should contain as much information as possible that is relevant to the sensor’s task. To date, however, the conception of state-of-the-art sensors considers hardware and software separately. Consequently, available knowledge about the expected scene, the task to be performed, and the measurement constraints is ignored; yet this a priori knowledge could help craft more efficient measurement procedures.

On the hardware side, the use of microwaves (rather than light or sound) for context-aware devices is emerging as promising alternative since it enables operation through optically opaque materials and fog, since it is independent of external lighting and target color, and because it limits privacy infringements. Yet generating wave fronts with specific shapes to illuminate the scene is challenging in the microwave domain. Traditional solutions involving arrays of antennas or arrays of tunable reflectors are very costly and bulky, respectively. Most context-aware applications demand simple, cheap and flat solutions. An enticing alternative is the use of reconfigurable metasurface-based hardware: the waves inside a cavity or waveguide, originating from a single excitation, can be leaked to the scene via tiny metamaterial elements patterned into a wall of the structure. Thanks to the tunability of the metamaterial elements (in the simplest case, on/off), wave fronts with different shapes can be generated. More specifically, by carefully tailoring the settings of the metamaterial elements, the wave fronts can be programmed almost at wish.

On the software side, machine-learning (ML) tools like artificial neural networks, inspired by the way in which our brain processes information, gain traction in order to analyze scene images. ML allows one to perform complicated tasks for which one cannot write down analytical instructions, provided one is given a large data set of examples. In a nutshell, the ML “black box” has tunable knobs and given enough training data these knobs can be adjusted so that the “black box” performs a desired complicated task. A wide-spread believe is that it is first necessary to generate an image of the scene before one can process the image to answer a specific question. However, this intermediate imaging step requires additional information that is irrelevant to the specific question and hence it wastes resources.

In this project, we sought to reap the benefits of new ML techniques also on the hardware level by jointly optimizing measurement settings and processing algorithm for a given sensing task. The goal was to make use of all available a priori knowledge in order to create scene illuminations that extract as much relevant information per measurement as possible, and hence to reduce the number of necessary measurements. The key idea to this end was to interpret the reconfigurable measurement process as an additional trainable layer that could simply be added to the ML pipeline: the tunable metamaterial elements constitute the knobs of this physical layer. In our paper, we refer to this ML architecture containing both physical and digital layers as “Learned Integrated Sensing Pipeline” (LISP).

Our project is a perfect example of how it takes more than one area of expertise to tackle a challenging problem. The project involved a team from three different groups: the Institut de Physique de Nice in France (where I am currently based), the Metamaterials Group led by Prof. David Smith at Duke University, and Prof. Roarke Horstmeyer from the Computational Optics Lab, also at Duke. I was pondering about ideas related to the identification of specific settings of the dynamic metasurface transceivers in order to program certain properties of the radiated wave fronts, as opposed to the hitherto conventional use of random settings. When my co-author Dr. Mohammadreza Imani mentioned that this could be useful to help classify gestures, I immediately thought about a recent work by Roarke in a very different context: learning how to illuminate cells in a microscope to decide if they are malaria-infected. I was very excited by the prospect of applying this idea to microwave sensing with metasurface hardware because of its potential to drastically reduce latency and computational burden.

Writing the ML code for this “learned integrated sensing pipeline” was a twofold challenge. On the one hand, I had to familiarize myself with the coupled-dipole model that had been developed over years in the Metamaterials Group to analytically describe the wave fields radiated and captured by the dynamic metasurface transceivers. Mohammadreza's help to grasp all of its subtleties in countless discussions was crucial. On the other hand, learning how to use TensorFlow (an open-source ML library), and in particular its low-level programming interface to code custom layers corresponding to this analytical forward model, allowed me to discover the world of ML. Roarke was a valuable guide in this process, for instance, by pointing out that a ‘temperature parameter’ trick allows one to end up with binary weights – which is required for the on/off tunable metamaterial elements we considered in this work.

Interestingly, in our work an idea was transposed from optics to the microwave domain. In the past, I had seen many examples of ideas first being explored and deployed with lower-frequency wave phenomena like acoustics and microwaves (where experiments are in general easier due to the larger wavelengths) before eventually being transposed to optics. But ML is clearly finding its way into the wave engineering community via the optical domain, probably because computer vision traditionally uses optical images.

At a point when our project had already made considerable progress, a related study was published by a different group: “Machine-Learning Reprogrammable Metasurface Imager”. At first sight, this title appeared to ruin the impact of our work. However, we quickly realized that this study only made use of a priori knowledge of the scene and did not consider a jointly optimized hardware-software pipeline. The existence of this study motivated us to go further than initially intended by benchmarking our method against the one used in this study. Ultimately, I believe that this benchmarking made our paper stronger and more convincing.

Looking ahead, our main goal now is to demonstrate our principle in experiments with real-life tasks. Given the widespread need for microwave sensing, our work is poised to impact a wide range of highly topical areas of our modern society, such as health care (ambient-assisted living), security (concealed-threat detection), mobility (autonomous vehicles) and human-device interaction. Besides its role in “context-awareness”, we would also like our work to contribute to the proliferation of the “learned sensing” paradigm in other areas of engineering. In fact, any reconfigurable measurement procedure used to answer a specific question can benefit from “learned sensing”. We are now in the process of talking to researchers in different fields that could potentially benefit from “learned sensing”.

Our work contributes to making smart devices a little smarter: with the processing task “at the back of their mind”, sensors can now discriminate between relevant and irrelevant information already during data acquisition. Yet there is still a lot of inspiration to be taken from Nature: for instance, our brain makes on-the-fly decisions about how to guide our eyes and visual attention, based on what we have just seen. In a similar spirit, it may be possible to upgrade our method using recurrent architectures.

Reference:

Philipp del Hougne, Mohammadreza F. Imani, Aaron V. Diebold, Roarke Horstmeyer, David R. Smith, “Learned Integrated Sensing Pipeline: Reconfigurable Metasurface Transceivers as Trainable Physical Layer in an Artificial Neural Network”, Advanced Science 1901913 (2019).

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in