Mobile phones may bring machine learning technology to resource-limited settings

Published in Healthcare & Nursing

Chest X-rays (CXRs) are a fundamental investigation in clinical practice. In Europe, CXRs are the diagnostic investigation most frequently requested by general practitioners and in the United States an estimated 110 million CXRs are performed annually.1,2 Given the ubiquitous use of CXR in Western countries, it is difficult to imagine caring for patients without access to this seemingly simple diagnostic test. Lower respiratory tract infections are the 2nd leading cause of death in low-income countries and the leading cause of child mortality worldwide.3-5 Despite this, access to CXR and radiologists is extremely limited in low- and middle-income countries (LMICs).6,7 Sub-Saharan Africa is reported to have 0.9 radiologists per 1 million population (e.g. two radiologists in Zambia, with a population of almost 12 million people), compared with 104.4 radiologists per 1 million population in the United States.8 Correcting the inequitable access to radiologists may be an extremely effective intervention in resource-limited settings, particularly given the high burden of respiratory diseases in these locations.3 While the development of radiology training programs in LMICs likely represents the ideal solution to this issue, the utilisation of machine learning has been identified as a potential strategy to improve patient care in the interim.8

As machine learning and artificial intelligence have emerged in medicine, the interpretation of radiological images has proven to be a popular application for the technology.9 Convolutional neural networks have been used to develop algorithms that interpret CXRs, with an accuracy rivalling that of expert radiologists.10-15 While diagnostic tools such as these have the potential to be extremely useful in LMICs, the infrastructure and resources required may limit their feasibility.16,17 However, the increasingly widespread availability of mobile phones and internet connectivity may allow those in resource-limited settings to access machine learning technology.18,19 The most practical workflow for such a process would involve using a mobile phone to take a photograph of a CXR displayed on a monitor, if such photographs could then be reliably analysed using machine learning algorithms.

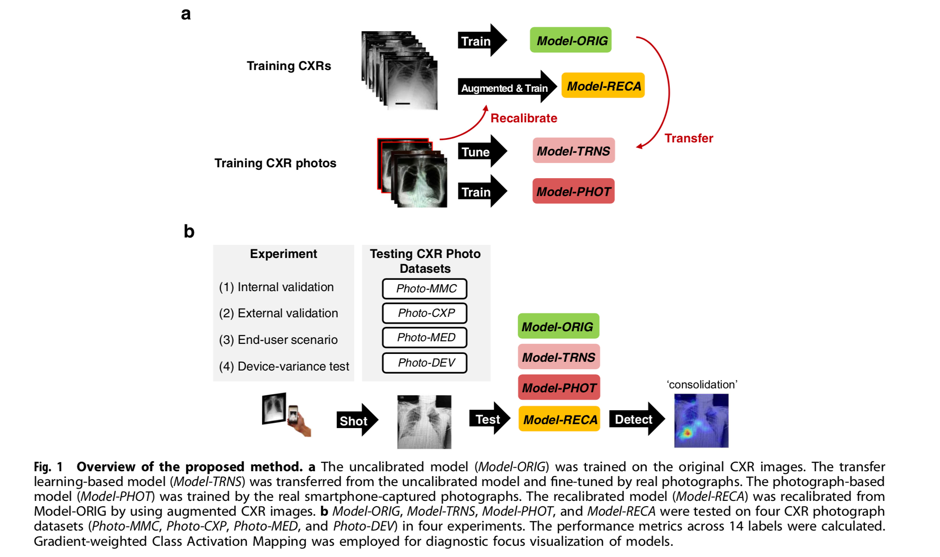

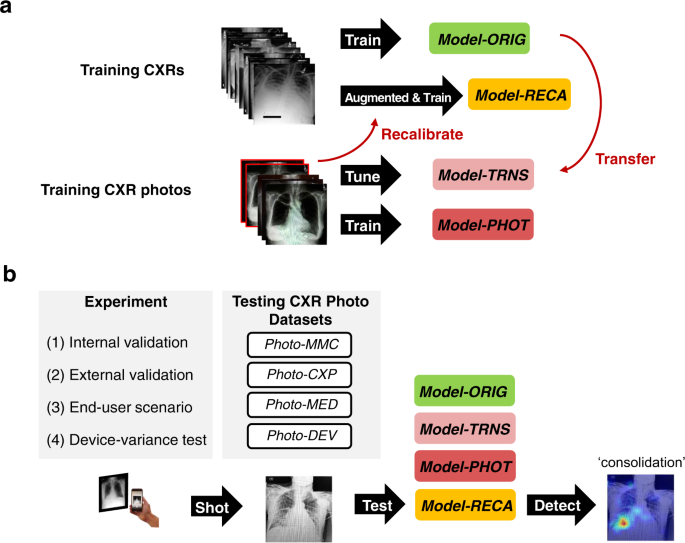

In their article “Recalibration of deep learning models for abnormality detection in smartphone-captured chest radiograph”, Kuo et al outline a method through which machine learning models can be recalibrated to analyse photographs of CXRs taken on mobile phones.20 First, deep learning models were developed using 1,759 photographs from the MIMIC-CXR dataset.21 One such model (Model-RECA) was recalibrated using simulated CXR images which had been specifically generated to contain noise (“photographic-like”), as would be expected if they were photographs taken using mobile phones. External validation was performed using 1,337 photographs from the CheXpert CXR dataset.22 The models were subsequently tested in an ‘end-user scenario’ whereby a group of medical residents took photographs of CXRs using their own mobile phones and computer monitors. Finally, a device-variance test was performed, whereby a single physician photographed the same set of CheXpert CXRs ten times using varying combinations of smartphones and computer monitors (Figure 1).

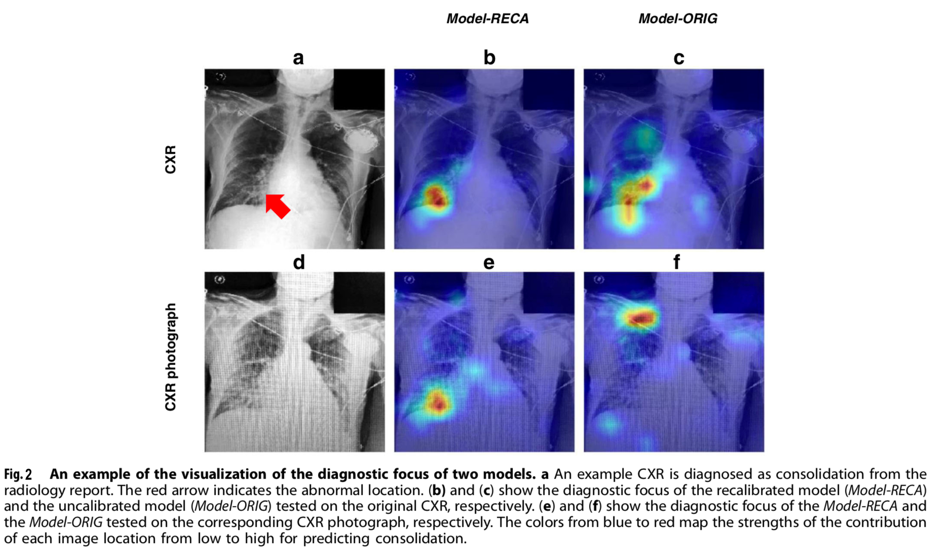

Kuo et al demonstrated that conventional deep learning algorithms perform poorly when applied to photographs of CXRs. However, by recalibrating the model using a small number of photographs of CXRs from publically available datasets, the performance could be largely recovered. Furthermore, when applied in an ‘end-user’ scenario, the recalibrated model demonstrated the best performance, which was equal to the comparison reference (Area Under the Receiver Operating Characteristics, AUROC=0.75). Additionally, the performance did not vary significantly across devices or operators. An example visualisation of the recalibrated and original models detecting a diagnostic focus on a CXR and a CXR photograph is displayed in Figure 2.

While the results are promising, the authors highlight the limitations of their work. They explain that all CXRs used in the study were from patients treated in the United States. As has been seen previously, machine learning models developed elsewhere may not be generalizable to those in LMICs, and have the potential to contain innate biases.23,24 Consequently, prior to implementation in a resource-limited setting, the model should be calibrated using CXRs and photographs sourced from the local population as radiological findings may vary. Additionally, the relative importance of model performance metrics depends on the clinical situation in which the tool may be used. As explained by the authors, while the study reported AUROC as an assessment of model discrimination, clinicians may value precision or sensitivity variably depending on the purpose of the tool (e.g. for screening, whereby sensitivity may be the priority). Furthermore, all models investigated showed varying performance across different pathologies, with substantial variation between the ability to detect cardiomegaly, edema, consolidation, atelectasis, pneumothorax and pulmonary embolism. Clinicians using this tool would need to be aware of such variations, and account for the possibility of false-negative and false-positive results in their clinical practice, as with any other diagnostic investigation.

In summary, Kuo et al envision that their work may assist clinicians in resource-limited settings, where radiologists may not be available to interpret CXRs. They outline a process through which mobile phones may be used to access machine learning technology, with a method to recalibrate deep learning models to account for the reduction in image quality when a photograph is taken on a mobile phone. While this does not replace the need to train radiologists in resource-limited settings, if such a tool were validated it may represent a method that could assist clinicians at the bedside.

References

- Mettler Jr, F.A., et al. Patient exposure from radiologic and nuclear medicine procedures in the United States: procedure volume and effective dose for the period 2006–2016. Radiology 295, 418-427 (2020).

- Speets, A., et al. Chest radiography and pneumonia in primary care: diagnostic yield and consequences for patient management. European Respiratory Journal 28, 933-938 (2006).

- W.H.O. The top 10 causes of death. https://www.who.int/news-room/fact-sheets/detail/the-top-10-causes-of-death (2020).

- Bryce, J., Boschi-Pinto, C., Shibuya, K., Black, R.E. & Group, W.C.H.E.R. WHO estimates of the causes of death in children. The Lancet 365, 1147-1152 (2005).

- Chen, K.-C., et al. Diagnosis of common pulmonary diseases in children by X-ray images and deep learning. Scientific reports 10, 1-9 (2020).

- Maru, D.S.-R., et al. Turning a blind eye: the mobilization of radiology services in resource-poor regions. Globalization and health 6, 1-8 (2010).

- Silverstein, J. Most of the World Doesn't Have Access to X-Rays. in The Atlantic, https://www.theatlantic.com/health/archive/2016/09/radiology-gap/501803/ (2016).

- Rosman, D.A., Bamporiki, J., Stein-Wexler, R. & Harris, R.D. Developing diagnostic radiology training in low resource countries. Current Radiology Reports 7, 1-7 (2019).

- Reardon, S. Rise of robot radiologists. Nature 576, S54-S54 (2019).

- Rajpurkar, P., et al. Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning. arXiv preprint arXiv:1711.05225 (2017).

- Rajpurkar, P., et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS medicine 15, e1002686 (2018).

- Wang, X., et al. Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. in Proceedings of the IEEE conference on computer vision and pattern recognition 2097-2106 (2017).

- Lakhani, P. & Sundaram, B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 284, 574-582 (2017).

- Tang, Y.-X., et al. Automated abnormality classification of chest radiographs using deep convolutional neural networks. NPJ digital medicine 3, 1-8 (2020).

- Guendel, S., et al. Learning to recognize abnormalities in chest x-rays with location-aware dense networks. in Iberoamerican Congress on Pattern Recognition 757-765 (Springer, 2018).

- Wahl, B., Cossy-Gantner, A., Germann, S. & Schwalbe, N.R. Artificial intelligence (AI) and global health: how can AI contribute to health in resource-poor settings? BMJ global health 3, e000798 (2018).

- Reddy, C.L., Mitra, S., Meara, J.G., Atun, R. & Afshar, S. Artificial intelligence and its role in surgical care in low-income and middle-income countries. The Lancet Digital Health 1, e384-e386 (2019).

- Arie, S. Can mobile phones transform healthcare in low and middle income countries? Bmj 350(2015).

- Aranda-Jan, C.B., Mohutsiwa-Dibe, N. & Loukanova, S. Systematic review on what works, what does not work and why of implementation of mobile health (mHealth) projects in Africa. BMC public health 14, 1-15 (2014).

- Kuo, P.-C., et al. Recalibration of deep learning models for abnormality detection in smartphone-captured chest radiograph. NPJ digital medicine 4, 1-10 (2021).

- Johnson, A.E., et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Scientific data 6, 1-8 (2019).

- Irvin, J., et al. Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison. in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33 590-597 (2019).

- Wright, J. & Verity, A. Artificial Intelligence Principles For Vulnerable Populations in Humanitarian Contexts. Digital Humanitarian Network (2020).

- McCoy, L.G., Banja, J.D., Ghassemi, M. & Celi, L.A. Ensuring machine learning for healthcare works for all. BMJ Health & Care Informatics 27(2020).

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in