Plenty of Reviewers Worldwide, Just Don't Use Google Scholar to Find Them

Published in Healthcare & Nursing, Astronomy, and Social Sciences

Back in 2023, Scholarly Kitchen’s Rick Anderson identified the shrinking reviewer pool as one of the most pressing issues for the future of peer review. Anecdotally, horror stories floating around editorial circles tell of as many as 50 reviewer invitations for a single manuscript.

Last year’s MIT Access to Science and Scholarship report points out that growing publication volume is one of the main culprits:

The growth in scholarly literature produced every year is also causing the number of peer review requests to skyrocket, raising concerns that the scientific community cannot sustain the pressure of increased requests to peer review publications and that continued increases in peer review requests could potentially erode the overall quality of peer review.

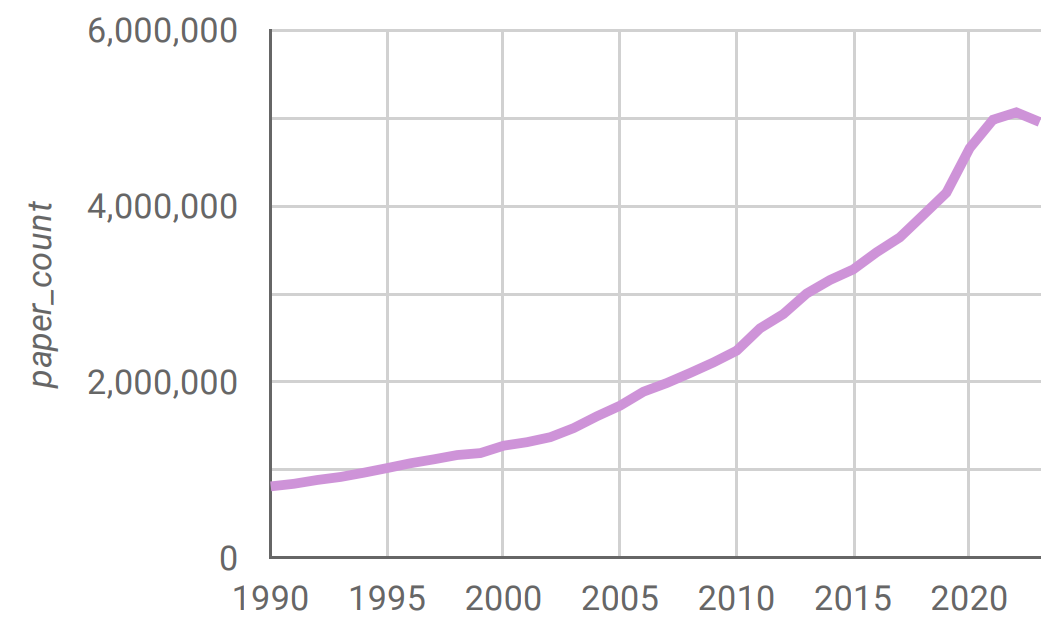

Indeed, the yearly publication volume has been hovering around 5M articles since 2021. This is more than 290% increase over the last 20 years, driven both by expansion in the existing publishers’ portfolios, and the emergence of new born-OA titles.

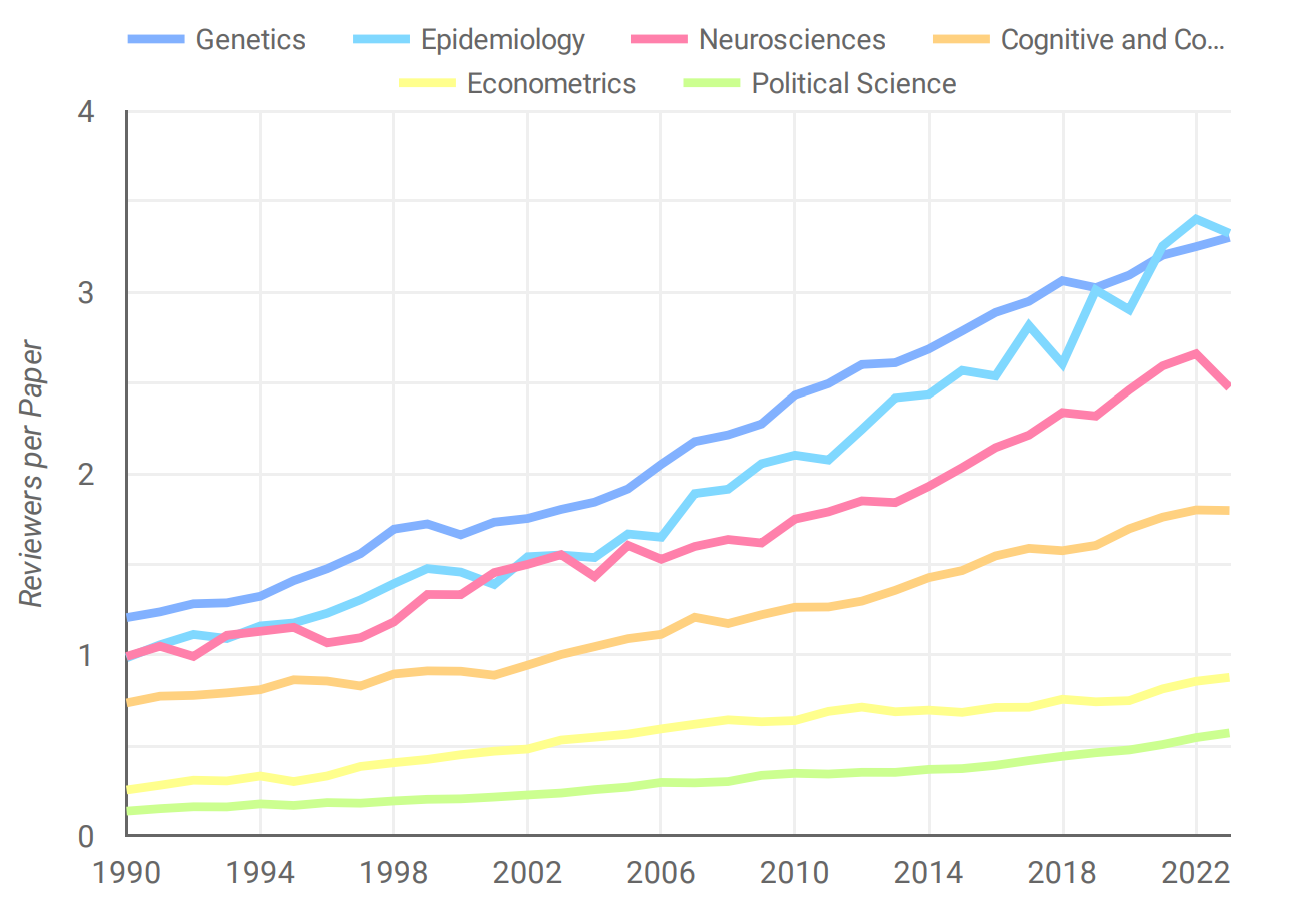

But what this data trend does not show is how the eligible reviewer pool has changed in the same time period. With a few lazy assumptions about who qualifies as a potential reviewer*, I calculated the relative size of the global reviewer pool for a number of fields.

The trend is universal — across the majority of fields, we now have more reviewers than we did 10 years ago, and there are no signs that the growth is slowing. While authors publish more, they also publish in larger teams as Wuchty et. al showed in 2007. This means that the number of unique authors per paper is increasing.

If the overall reviewer pool is growing faster than publication output in every field, why is it so difficult to get a paper reviewed these days? The reasons are likely multiple and complex. Increasing workloads of existing reviewers, frustration with commercial publishers, lack of good incentives, and thematic match all likely play a role.

But above all, the reviewer list that many selective journals use is a limited and non-representative subset of the global knowledge pool. The same reviewers tend to be invited repeatedly, because of the editor’s familiarity with their work or perceived importance in the field. Others are left out completely. As a result, more and more invitations get declined. Turn around times get longer and editorial workloads heavier.

So to more effectively use the global reviewer pool, academic editors and publishers could consider the following strategies.

Don’t use Google Scholar

For many editors Google Scholar is a go-to resource for all search tasks. Checking if the findings are new? Google Scholar. Researching a topic? Google Scholar. Reviewer search? You guessed it. Journals even have dedicated DIY tools and search string generators for GS based conflict-of-interest checks.

But Google Scholar is not a reviewer finding tool. We don’t really know what the algorithm behind GS search does. Or which outputs it decides to serve first and which ones are way down the list. The results are biased, likely in a way that excludes a large part of the global reviewer pool. The results are not reproducible and the data are externally owned. It is easily manipulable. It is slow to work with, and requires additional time to locate reviewer contact details (again, much easier for reviewers already in the system, which is part of the problem).

Instead, develop and use tools designed for the purpose, built around smart and transparent matching, featuring automated suggestions, frequent refreshes of the underlying data and good global coverage. Integrate them into existing editorial systems, and track global invitation/acceptance rates. Use filters that exclude over-invited reviewers and western institutions.

Showcase value for junior reviewers

Consider relying much more on junior faculty and postdocs as expert reviewers. In many cases, postdocs are the best and most attentive referees going above and beyond of what their senior peers contribute.

But in today’s academic environment and with its increasing resentment towards commercial publishers, first-time reviewers need to more clearly see the added value of participating in peer review. Editors need to show that there is a good alignment between what journals want (publishing good quality papers) and what ERC’s want (career development, networking, and recognition). So consider offering workshops, masterclasses, reviewer-only networking events, or personal invitations to write. Perhaps send a thank-you postcard once a year.

Embrace non-human reviewers

Part of the reason peer review is considered a waste of time by many is that a lot of it consists of drone tasks. Things like checking stats reporting, significance levels, statements regarding research ethics or consent, data availability, code, and so on.

These tasks can be partially automated using old-fashioned algorithms, but LLMs make it easy. Short of the upfront investment in infrastructure and the general AI anxiety, I see no good reasons why LLM-based checks would not become a norm in scholarly publishing within the next couple of years, alleviating a large part of the current reviewer workload.

These review assistants should seamlessly integrate with whatever editorial system the journal uses. Whenever a human reviewer sees the paper for the first time, it should already come with an initial pre-review report.

Pay them

This is a highly controversial discussion, I know, and something that has been talked about publicly; mostly by reviewers themselves (who see the need) and less so by publishers (who would presumably organize the transaction) and science funders (who would probably foot most of the final bill).

People have raised concerns related to ethics and potential inequalities around financial incentives in this space, but I don’t believe they are valid. I mostly agree with what Stanford’s Mario Malicki said in his recent talk on The Future of Peer Review and Reproducible Science at DeSci Foundation: paying for the service also allows you to demand a high quality product.

Paying for stats review gives you the right to demand a full and comprehensive evaluation; similarly, paying for code review means you can actually ask that the referee runs the code and checks validity. Rather than compromising research integrity, this would actually increase the quality of research outputs — another wildly cited problem in today’s peer review ecosystem.

Then there is the issue of cost, which could fall on funders or be integrated into more equitable OA models that do not necessarily cut into current margins.

For a large selective OA journal such as Nature Communications, compensating peer reviewers could add an extra expenditure of $800 per peer-reviewed paper. This translates into a roughly $2,000 increase in APC ($6,790 currently), but only because accepted papers effectively subsidize peer review services provided to rejected manuscripts.

Alternative unbundled fee-for-service models without cross-paper subsidies could more equitably distribute review costs, ultimately reducing APCs for accepted manuscripts. Science funders already pay for grant reviews, and so the extension to reviewing the final outcomes would seem natural. This can create other beneficial by products, such as incentives to only get a paper reviewed once or submit to a journal where the paper is more likely to succeed.

*I assumed that an author can be consider an eligible reviewer in year X if they are actively publishing and have an above-threshold cumulative citations number. For the figure above that threshold is 20.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in