RoboMNIST: A Multimodal Dataset for Multi-Robot Activity Recognition Using WiFi Sensing, Video, and Audio

Published in Electrical & Electronic Engineering and Mathematical & Computational Engineering Applications

In today’s rapidly advancing world, the ability of robots to perceive their surroundings accurately is more important than ever. Traditional computer vision has paved the way for many breakthroughs, but it can fall short in real-world settings where lighting is poor or objects block the view. RoboMNIST addresses these challenges by combining three types of sensor data, offering a more complete picture of robot activity.

Reimagining a Classic Benchmark

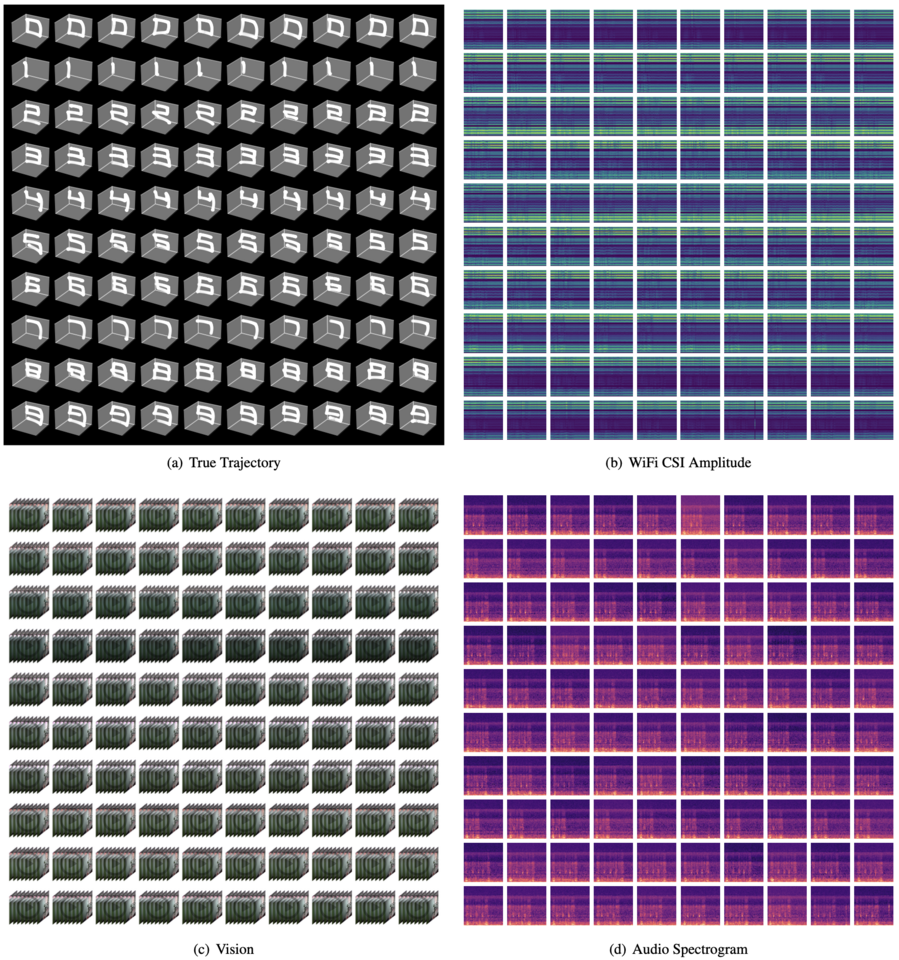

For many years, the MNIST dataset has been a key resource in the field of deep learning. It provided a simple yet effective challenge of recognizing handwritten digits. Inspired by this legacy, we created RoboMNIST—not to recognize ink on paper, but to capture the motion of robotic arms as they “write” digits in space. This shift from static images to dynamic movement opens up new possibilities for studying and improving how robots perceive and interact with their environments.

The Strength of Combining Multiple Sensors

In real-world environments, relying on just one type of sensor can lead to problems. Poor lighting, obstructions, or noise can limit a camera’s ability to capture important details. RoboMNIST overcomes these limitations by fusing data from three sources:

-

WiFi Signals:

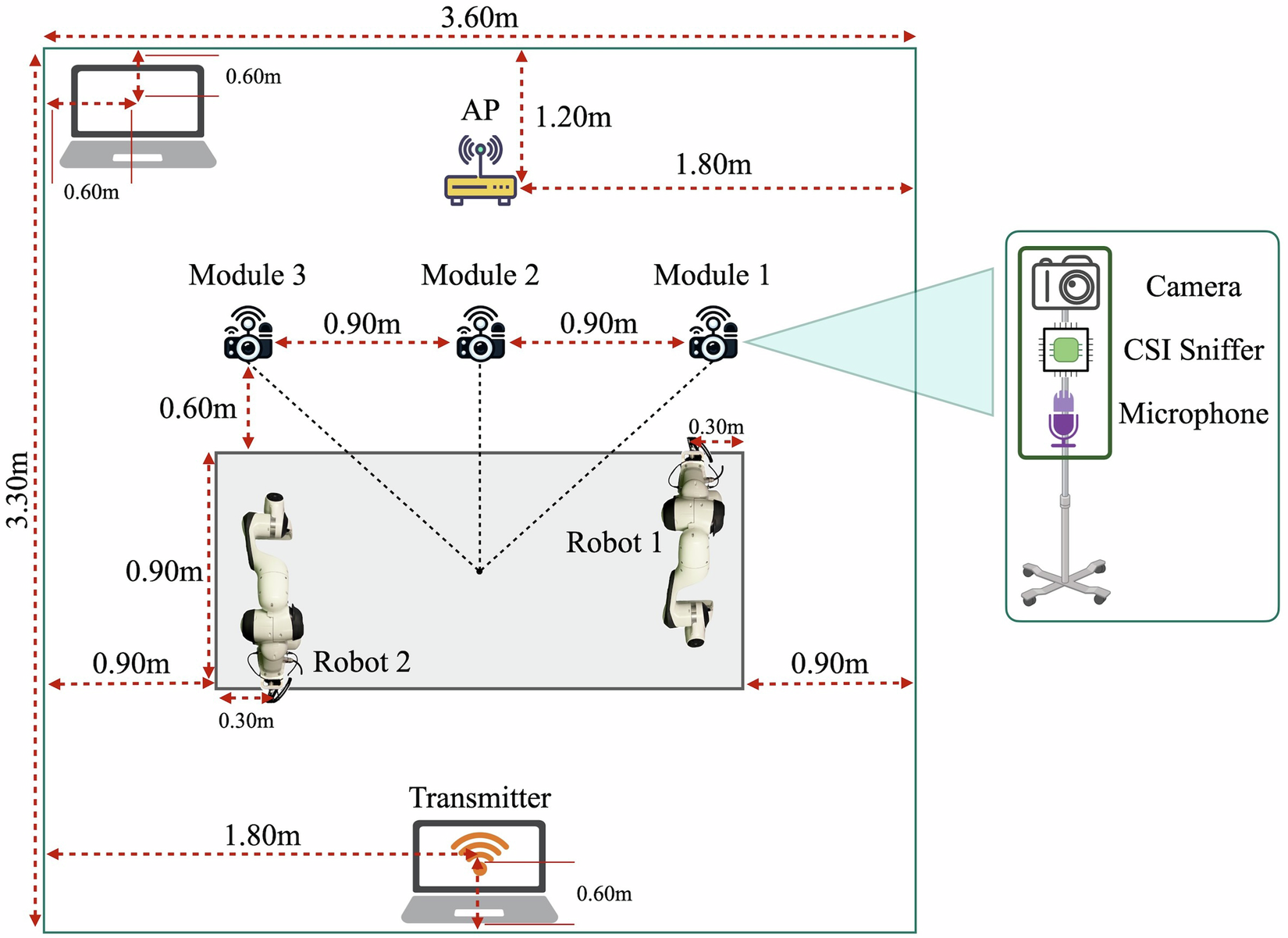

By recording subtle changes in WiFi signals with three sensors, we can detect movements even when visual information is lacking. This approach works well in conditions where cameras might struggle. -

Video:

Three cameras capture detailed images of the robotic arms as they move, providing clear spatial and temporal information about the digit-writing process. -

Audio:

Three microphones record the sounds produced by the robotic movements. These audio cues add another layer of detail that can help verify and complement the visual and WiFi data.

This blend of sensors ensures that the dataset remains reliable even in challenging conditions.

How RoboMNIST Was Built

To create RoboMNIST, we used two Franka Emika robotic arms programmed to “write” the digits 0 through 9. The process was designed to be both precise and varied, capturing the natural differences that can occur during repetitive tasks. Here’s how we did it:

-

Diverse Activity Combinations:

Each digit-writing task was performed by two robots at three different speeds. This resulted in 60 primary combinations of movements, reflecting a range of possible variations in real-world operations. -

Multiple Repetitions:

We recorded each activity 32 times. These repetitions help ensure that the data is robust and can support thorough analysis. -

Synchronized Recordings:

All three sensor types—WiFi, video, and audio—were recorded in perfect sync with the robot’s actual movements. This careful coordination means that the dataset provides a complete and accurate record of each activity.

Applications and Impact

RoboMNIST is designed to support a wide range of research and practical applications. By offering a complete view of robotic activity through multiple sensors, it provides a strong foundation for several exciting areas:

-

Industrial Automation and Logistics:

In environments like factories or warehouses, having reliable sensor data is essential for smooth operations. RoboMNIST can help improve coordination among robots, making processes more efficient. -

Human-Robot Interaction:

In settings such as healthcare or service industries, robots that can interpret multimodal signals can interact more naturally and safely with people. The dataset offers a way to test and refine these interactions. -

Smart Environments:

Whether in smart homes or public spaces, the ability to recognize and respond to human activities is a growing area of interest. RoboMNIST’s integration of common signals like WiFi makes it a cost-effective tool for developing such technologies.

A Step Toward More Accessible Robotics Research

One of our main goals with RoboMNIST was to create a resource that is both powerful and accessible. High-end sensors can be expensive, and systems that rely on them may not be practical for every research group or application. By leveraging commonly available WiFi signals along with standard video and audio recordings, we have developed a dataset that anyone can use to explore and improve robot perception.

This approach not only reduces costs but also opens the door for more widespread experimentation and innovation in the field of robotics.

Getting Started with RoboMNIST

We invite researchers, developers, and robotics enthusiasts to explore RoboMNIST and see how it can support their work. The dataset and its accompanying code are available for public use, allowing anyone to dive into the data and start experimenting.

-

Watch the Robot Movement in Action:

See for yourself how the robotic arms execute the digit-writing tasks. -

Access the Dataset:

Download RoboMNIST on Figshare

Explore the complete dataset, along with detailed documentation and ground truth annotations. -

Review the Code:

Explore the RoboMNIST GitHub Repository

Check out our implementation and start developing your own applications.

Looking Ahead

The field of robotics continues to evolve, and the need for systems that can accurately perceive and understand their environment will only grow. RoboMNIST is our contribution to this ongoing effort—a tool designed to help build robots that are more adaptable, reliable, and capable in a variety of settings.

By merging data from multiple sources, we offer a clearer and more comprehensive view of robot activity. This not only helps improve current technologies but also paves the way for new innovations in autonomous systems and sensor integration.

Join Us in Shaping the Future of Robotics

RoboMNIST is more than just a dataset—it’s an opportunity to join a growing community dedicated to advancing the state of the art in robotics. We encourage you to explore the dataset, share your findings, and contribute to a collaborative effort that spans academia and industry.

Together, we can build smarter, more capable robots that enhance everyday life and transform the way we interact with technology.

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Data to support drug discovery

Publishing Model: Open Access

Deadline: Apr 22, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in