Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare

Published in Healthcare & Nursing

Precision Medicine is radically changing the way we do biomedical research and deliver health care to patients, by pursuing a person-centered outlook to prevention, diagnosis, prognosis and treatment. A central role in the advancement of Precision Medicine is being played by Artificial Intelligence (AI) systems able to perform complex tasks to support human activities, including those in the medical field.

However, in order to successfully achieve the integration of AI in Precision Medicine, several hurdles need to be overcome. One of the most crucial is the gap that exists in addressing sex and gender differences in health and disease. Sex and gender differences have been reported in chronic diseases such as diabetes, cardiovascular disorders, neurological diseases, mental health disorders, cancer, and still there are plenty of health areas that remain unexplored.

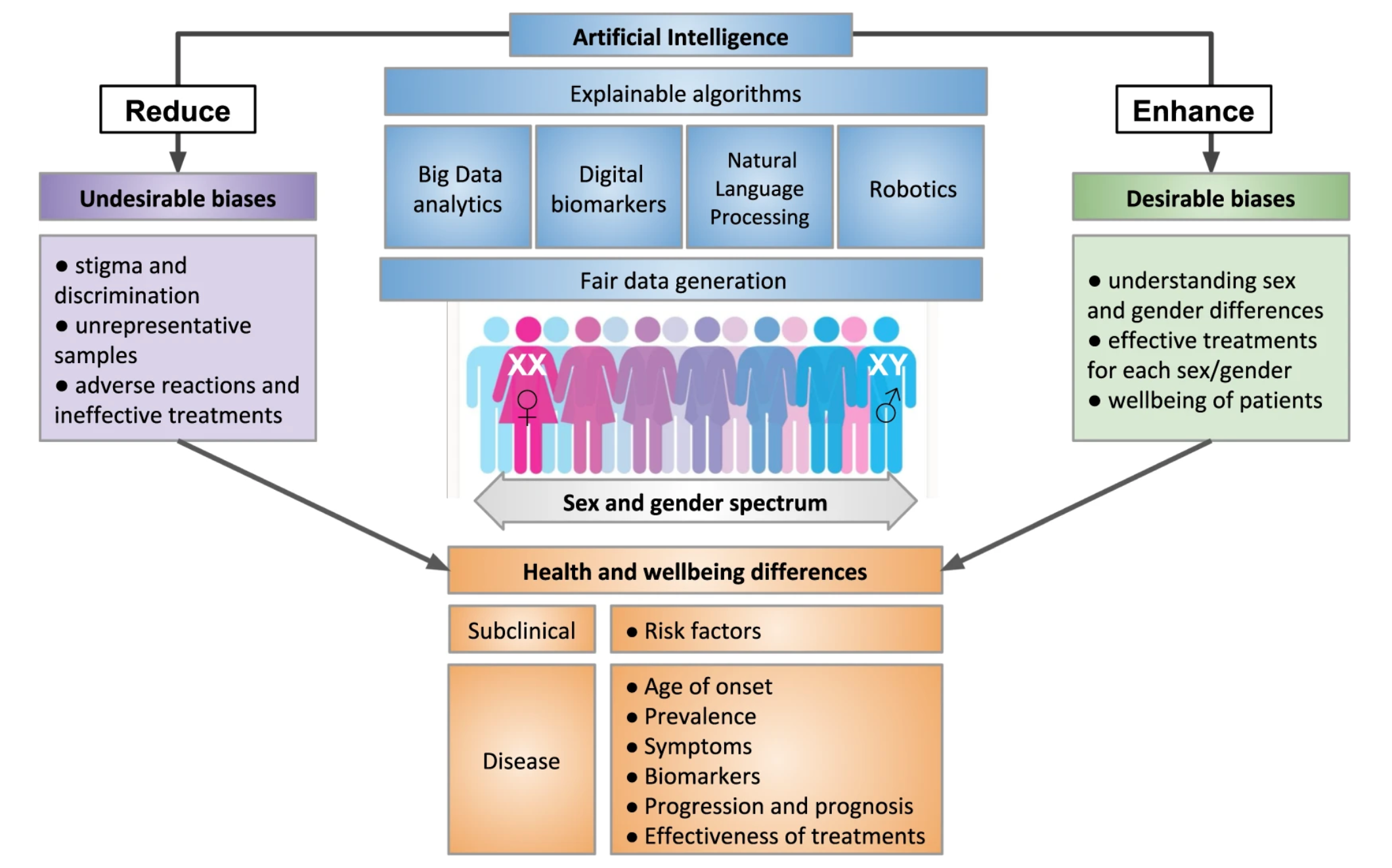

Neglecting such differences in both the generation of health data and the development of AI for Precision Medicine will lead not only to suboptimal health practices but also to discriminatory outcomes. In this regard, AI can act as a double-edged sword. On one hand, if developed without removing biases and confounding factors, it can magnify and perpetuate existing sex and gender inequalities. On the other hand, if designed properly, AI has the potential to mitigate inequalities by accounting for sex and gender differences in disease and using this information for more accurate diagnosis and treatment.

Our work focuses on the existing sex and gender biases in the generation of biomedical, clinical and digital health data as well as on AI-based technological areas that are largely exposed to the risk of including sex and gender undesirable biases, namely Big Data analytics, Natural Language Processing (NLP), and Robotics.

The accessibility to large volumes of clinical and patient-generated information, collectively called biomedical Big Data, sets down an exceptional challenge for Precision Medicine. This is embodied in the development of AI solutions that combine heterogeneous data, ranging from social media information to genomics data. Thanks to the ever-growing improvement of sequencing techniques and platforms, genomics data represents one of the largest biomedical Big Data sets. In order to be unbiased and to allow for the identification of sex and gender differences on disease, equal representation of women and men as well as other groups across the gender spectrum should be granted.

The accessibility to large volumes of clinical and patient-generated information, collectively called biomedical Big Data, sets down an exceptional challenge for Precision Medicine. This is embodied in the development of AI solutions that combine heterogeneous data, ranging from social media information to genomics data. Thanks to the ever-growing improvement of sequencing techniques and platforms, genomics data represents one of the largest biomedical Big Data sets. In order to be unbiased and to allow for the identification of sex and gender differences on disease, equal representation of women and men as well as other groups across the gender spectrum should be granted.

Health-related documents and texts, such as medical notes in electronic health records, are worked through NLP for the extraction and exploitation of biological knowledge. Several notable examples of sex and gender biases in NLP, such as the gendered semantic context in which non-definitional words (e.g. babysitter) appear, provide plain evidence of sex and gender biases residing the textual data that is used to train NLP algorithms.

Likewise, robotics is also subjected to sex and gender biases. This is in particular when a robot’s gendered appearance does not serve a functional purpose and thus only strengthens stereotypes.

An effective way we recommend to avoid undesired biases and identify sex and gender differences in disease is to pursue explainability in AI. These techniques can uncover the reasons why and how a certain outcome or prediction is generated, reducing the complexity that characterizes those machine decisions that are otherwise unintelligible for humans.

Finally, we advocate to increase awareness of sex and gender differences and biases by incorporating policy regulations and ethical considerations during every stage of data generation and AI development, to ensure that the systems maximize wellbeing and health of the population.

This work is written on behalf of the Women’s Brain Project (WBP) (www.womensbrainproject.com), an international organization advocating for women’s brain and mental health through scientific research, debate and public engagement.

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in