The Forest Is No Longer a Hiding Place: How an Intelligent Drone Swarm Sees Through the Canopy

Published in Electrical & Electronic Engineering and Computational Sciences

Finding a person or object hidden beneath a dense forest canopy has long been a nearly impossible task for aerial surveillance. The leaves and branches create a visual shield that defeats conventional cameras and even advanced AI. But what if, instead of one drone, you had a team of them working together as a single, intelligent unit? Researchers at Johannes Kepler University (JKU) and the German Aerospace Center (DLR) have now developed a fully autonomous drone swarm that does exactly that, using a clever combination of biology-inspired coordination and a powerful imaging technique to literally see through the trees.

No time? See video abstract.

More time? See previous work.

The Core Problem: The Chaos of Occlusion

The main challenge in forested environments is occlusion. From above, the canopy is a random, shifting pattern of light and shadow. A person on the ground might be visible for a split second through a tiny gap from one specific angle, but completely invisible from just a meter away. Traditional object-detection AI, trained on clear images of cars or people, fails miserably here. The randomness of the occlusion is something these algorithms cannot generalize—they haven't been trained on every possible pattern of leaves and branches. It’s like trying to recognize a friend in an image by seeing only a handful, randomly shifting set of pixels every few seconds.

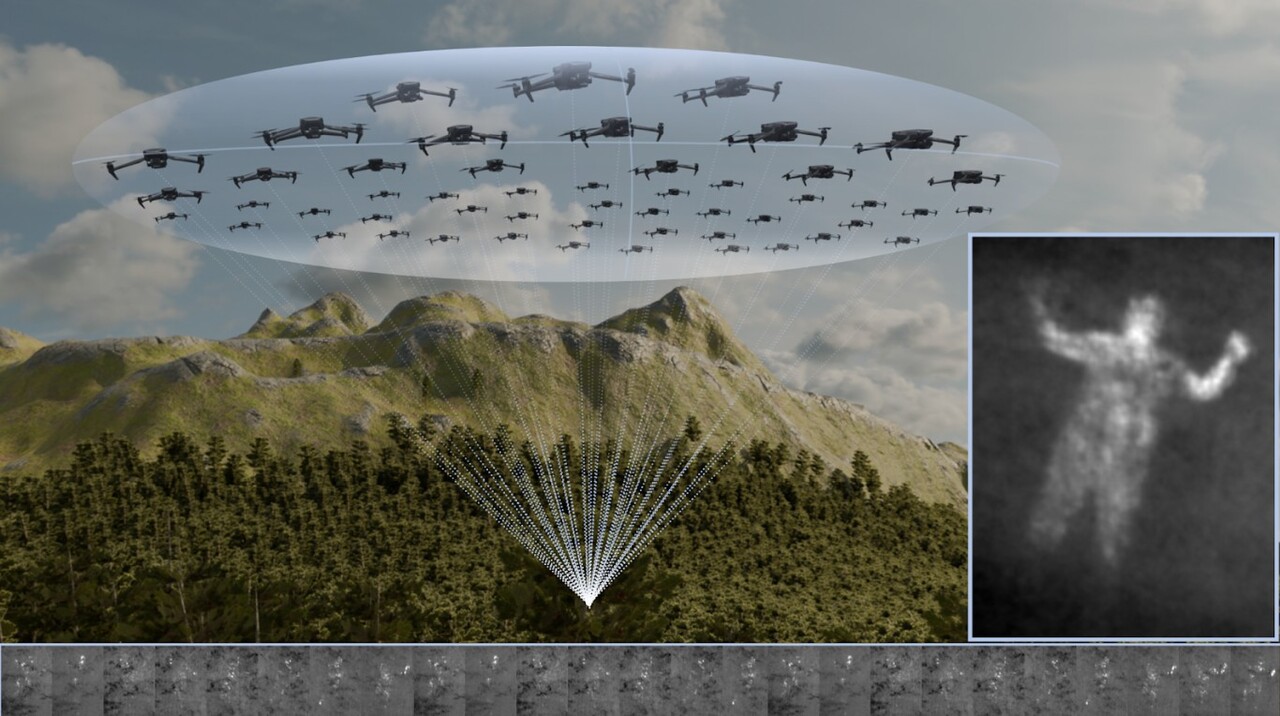

The Solution Part 1: Creating a Super-Lens in the Sky

The breakthrough comes from a technique called synthetic aperture sensing. The key idea is to combine the views from multiple drones to create a single, virtual "super-lens" with a massive aperture. Think of it like a giant, floating eyeglass lens the size of the entire swarm's flight path.

-

A Single Drone has a small lens, which means a large depth of field. Everything from the treetops to the ground is in focus, resulting in a cluttered image full of leaves and branches.

-

The Drone Swarm, when its images are computationally combined, mimics a lens so large that it has an extremely shallow depth of field. The authors' approach focuses this virtual lens on a specific plane—the forest floor. Everything in front of or behind this plane (i.e., the foliage) becomes extremely blurry and cancels out in the resulting image signal. What remains in focus is the target on the ground.

This is similar to how astronomers link radio-telescopes across the globe to create a virtual dish the size of the Earth, achieving unparalleled resolution.

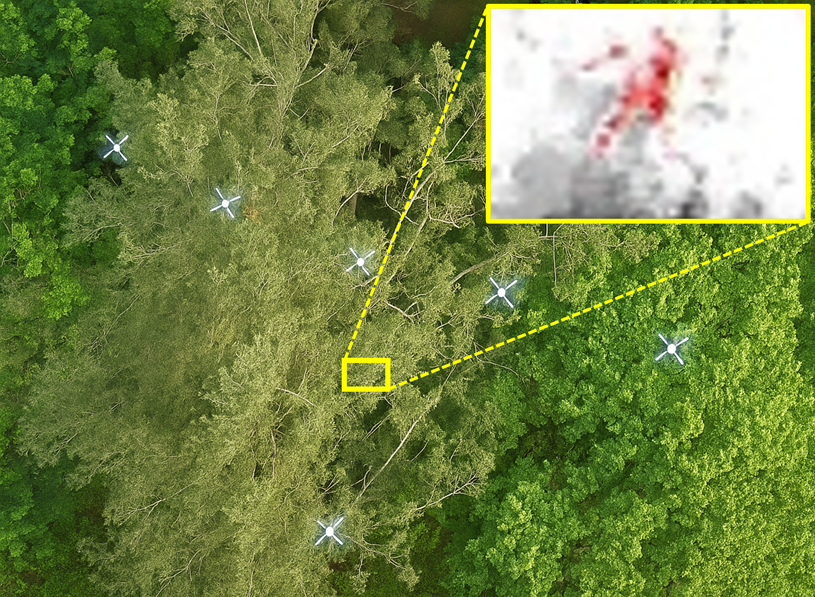

The Solution Part 2: Looking for the Unusual, Not the Known

Instead of asking "Is this a car?" or "Is this a person?"—questions that fail under occlusion—the swarm asks a simpler, more powerful question: "What here looks abnormal?"

This process, called anomaly detection, scans each drone's video feed for pixels that stand out from their immediate surroundings. This could be an unusual color (like the red of a jacket against green and brown), or a distinct heat signature (a human body against cool ground). These "anomalous" pixels are marked in each drone's view.

The synthetic aperture process then integrates these anomaly maps, not the full-color or thermal images. The random foliage, which is the "normal" background, gets suppressed, while the consistent anomaly of the target on the ground shines through, forming a bright, visible cluster in the synthetic aperture image. This method requires no pre-training and can detect anything unexpected, making it incredibly versatile.

The Solution Part 3: An Adaptive, Intelligent Swarm

This isn't a swarm that just flies in a pre-set pattern. It’s adaptive and intelligent, using a method inspired by the flocking behavior of birds and insects, known as Particle Swarm Optimization (PSO).

The swarm has two main modes to make the super-lens in the sky change it shape adaptively with respect to the forest vegetation it sees:

-

Explore Mode: When no target is found, the drones spread out into a wide formation and sweep across the area like a search party, systematically scanning for any anomalies.

-

Exploit/Track Mode: The moment a target is detected, the swarm's behavior changes dramatically. The drones stop scanning and dynamically converge on the target's location, continuously adjusting their positions like a pack of wolves circling prey. They autonomously optimize their formation to collectively get the clearest possible view, adapting to the local density of the trees and the target's movement.

The Solution Part 4: Turning Weakness into Strength

One of the biggest practical hurdles was sensor noise. The compasses in consumer-grade drones, for instance, are notoriously imprecise, drifting over time. A miscalculation of just a few degrees would cause the images from different drones to misalign, ruining the synthetic aperture effect.

Instead of trying to solve this with computationally expensive corrections, the researchers found an elegant workaround. They embraced the uncertainty. The system doesn't search for one perfect alignment; instead, it generates multiple synthetic aperture images, each with slightly different sensor corrections for the drones, and then combines those. The correct alignment, where the target is visible, gets reinforced and bright. The incorrect alignments, where the target is blurred, are suppressed. This clever trick turns a major hardware limitation into a solvable software problem.

Finally: Proven in the Field

The system was put to the test in real-world experiments:

-

In a sparse forest, it successfully tracked a moving vehicle with high accuracy.

-

In a dense forest at dawn, it located the heat signatures of both a lying and a standing person who were invisible to the naked eye from above.

-

It autonomously detected and tracked people walking through thick woodland, achieving an impressive average positional accuracy of 0.39 meters with 93.2% precision.

This technology marks a significant leap from previous simulation of the approach to reality. By mimicking natural swarm behavior and leveraging synthetic aperture sensing, this autonomous drone swarm almost turns the once-impenetrable forest into a transparent environment, opening new frontiers for search and rescue, wildlife conservation, surveillance, and environmental monitoring.

Follow the Topic

-

Communications Engineering

A selective open access journal from Nature Portfolio publishing high-quality research, reviews and commentary in all areas of engineering.

Related Collections

With Collections, you can get published faster and increase your visibility.

Applications of magnetic particles in biomedical imaging, diagnostics and therapies

Publishing Model: Open Access

Deadline: May 31, 2026

Integrated Photonics for High-Speed Wireless Communication

Publishing Model: Open Access

Deadline: Mar 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in