The Future of Alzheimer's Diagnosis: Unlocking Insights with Multi-modal Imaging Models

Published in Neuroscience, Computational Sciences, and Mathematics

Introduction

Dementia affects approximately 55 million individuals worldwide, with Alzheimer’s disease (AD) being the predominant type, accounting for 60-70% of all cases. Despite its staggering prevalence, a cure remains elusive, highlighting the crucial need for early diagnosis, as it enables timely and optimal management strategies that significantly benefit patients, families, and caregivers alike.

Artificial Intelligence (AI), particularly Deep Learning, shows promise in transforming AD diagnosis. Neuroimaging techniques like Magnetic Resonance Imaging (MRI) and amyloid Positron Emission Tomography (PET) scans offer valuable biomarkers. Previous research has primarily focused on uni-modal approaches, utilizing mainly a single imaging technique for the diagnosis. Multi-modal neuroimaging, instead, still needs to be deeply explored, with few studies aiming to maximize performance by leveraging the combined strengths of different imaging modalities.

We addressed the gap by proposing and evaluating Convolutional Neural Network (CNN) models using 2D and 3D MRI and PET scans in both uni-modal and multi-modal configurations. Additionally, the incorporation of eXplainable Artificial Intelligence (XAI) enhances transparency in decision-making for AD diagnosis.

Our Research

Our models leverage CNNs as the foundational architecture, tailored to accommodate the specific characteristics of the input scans—either in terms of their dimensionality or modality. CNNS are a particular type of artificial neural networks that can learn to recognize patterns and features in pictures, by breaking them down to smaller pieces. This enables CNNs to identify objects or patterns in a way similar to how our brain processes visual information.The rationale behind selecting CNNs is rooted in their proven capability and efficiency in handling image data, particularly in medical imaging and diagnostics, as they excel in automatically detecting critical features.

Our study systematically explored several variations of the proposed CNN architecture, tailored to different imaging modalities and dimensionalities. We experimented with several 2D and 3D approaches using MRIs and PET scans.

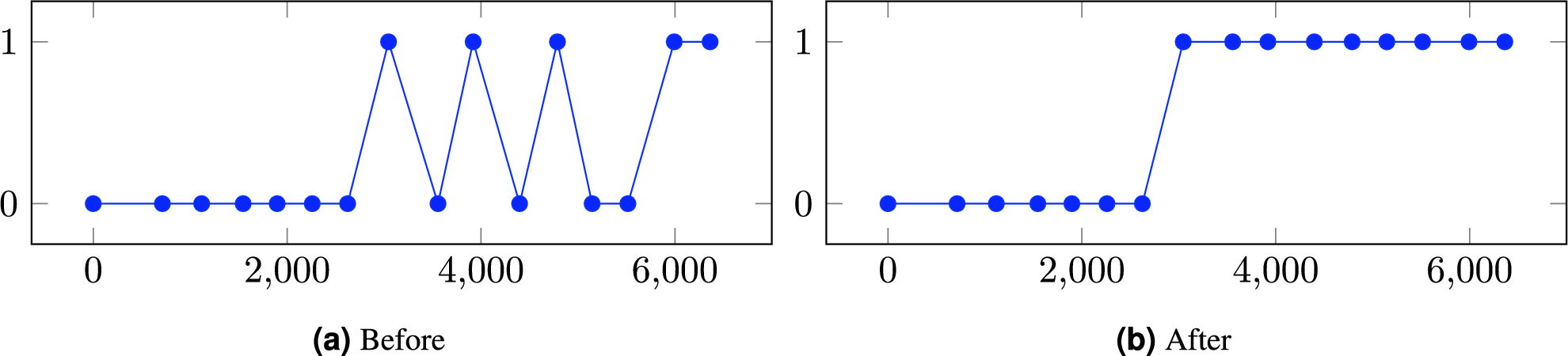

The results are promising: all models reached a 70–95% diagnostic accuracy. Notably, models leveraging three-dimensional inputs outperformed their two-dimensional counterparts. Furthermore, our analysis revealed that MRI scans in 2D or 3D formats consistently provided superior results to amyloid PET scans, with an accuracy difference of approximately 8–10%. Utilizing a combination of both 3D MRIs and 3D amyloid PET scans in a “fusion” model allowed us to reach a staggering accuracy of 95%. This suggests that MRI and PET scans fulfill complementary roles in disease prediction, while MRI proves crucial in uni-modal scenarios. Furthermore, the fusion model’s remarkable sensitivity (the ability to avoid false negatives) is particularly advantageous for disease detection.

The Need for Explainability

As Machine Learning and AI play increasingly vital roles across diverse applications, there is a growing interest in XAI to shed light on the hidden AI decision-making process. The need for transparency is particularly crucial in medicine, where opaque algorithms’ ethical and safety implications cannot be overlooked.

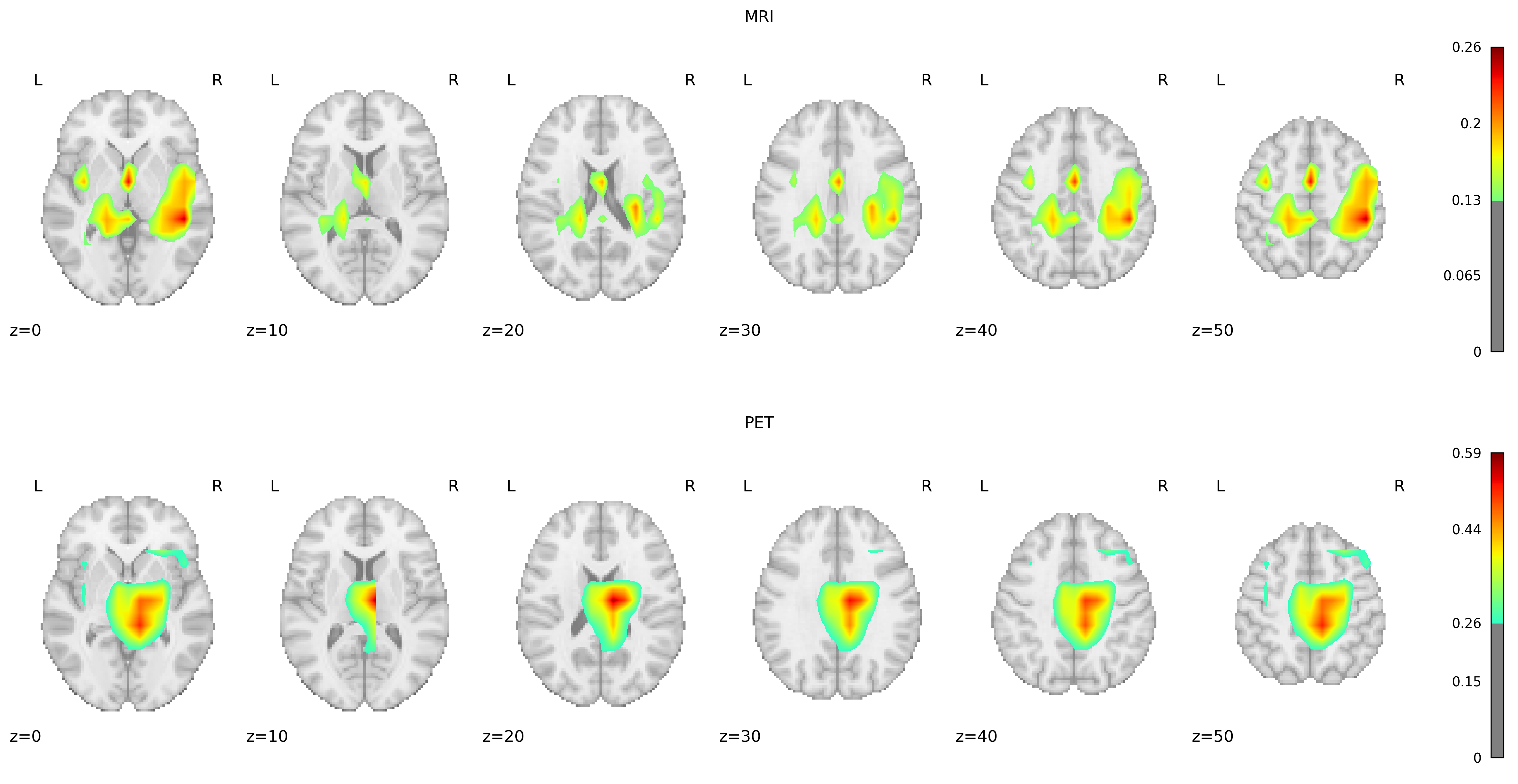

Our models are inherently “black-box” in nature, as they do not offer interpretable or explainable outcomes without further analysis. To bridge this gap, we applied a state-of-the-art technique, Gradient-weighted Class Activation Mapping (Grad-CAM), to generate maps that emphasize the regions within the original image most influential in predicting the disease or its absence.

Understanding the Model’s Insights

Overlaying Grad-CAM heatmaps onto the AAL2 atlas, a detailed anatomical guide encompassing 120 brain regions, allowed us to precisely identify the key areas influencing the model’s classifications. Identifying regions with mean Grad-CAM values in the top 90th percentile revealed their critical role in the model’s decision-making process, emphasizing their potential relevance to Alzheimer’s disease pathology.

For MRI scans, a notable consistency exists in the regions highlighted across both groups, with the Temporal Lobe emerging as the most critical area for classification. This observation aligns with established research indicating Temporal Lobe atrophy as a crucial predictor of AD and other dementia forms, particularly emphasizing the significance of the Medial Temporal Lobe. Interestingly, the Middle Cingulate Gyrus and the Left Inferior Parietal Gyrus were identified as unique indicators for the negative and positive groups. Additionally, the Precentral Gyrus and Precuneus, both associated with AD pathology, were identified as relevant, supporting their roles as early biomarkers of AD.

PET scans revealed a substantial overlap in significant regions between the positive and negative groups, similar to MRI findings. Apart from the Middle Temporal Gyrus, Precentral Gyrus, and Precuneus, the Frontal Gyrus (encompassing the superior, middle, and inferior triangular parts) was markedly significant in AD detection. The overlap of significant regions across both groups, for MRI and PET, suggests that our model consistently focuses on the same areas for discrimination.

Conclusion

In our research, we worked on creating a multi-modal diagnostic model for Alzheimer’s Disease using both 3D MRI and amyloid PET imaging. We discovered that these modalities give us different but helpful views, making our AD prediction models better. Our experimental results show that our new methods not only match but might even beat the best methods we have now. Furthermore, by gaining insights into the regions of the images the AI models were focusing on, we highlighted critical areas in the brain related to AD, agreeing with what other researchers are finding nowadays. For additional details on the methods and results of our study, we invite readers to explore the open-access paper linked at the start of this post.

Follow the Topic

-

Scientific Reports

An open access journal publishing original research from across all areas of the natural sciences, psychology, medicine and engineering.

Related Collections

With Collections, you can get published faster and increase your visibility.

Reproductive Health

Publishing Model: Hybrid

Deadline: Mar 30, 2026

Women’s Health

Publishing Model: Open Access

Deadline: Feb 28, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in