Who Gets to Say How You Feel? The story behind "Formal and Computational Foundations for Implementing Affective Sovereignty in Emotion AI Systems"

Published in Social Sciences, Neuroscience, and Computational Sciences

A few years ago, I watched a job candidate receive an automated rejection. The system had analyzed her video interview and scored her "enthusiasm" as low. She told me she had been nervous, not disengaged — and that in her culture, restraint is a sign of respect. There was no way to contest the score. No way to say, "That is not what I felt." The label was already in the file, and it outranked her.

That moment did not leave me. Not because it was unusual, but because it was becoming ordinary. Emotion AI — systems that infer what we feel from our faces, voices, text, or physiology — is now embedded in hiring platforms, mental health apps, companion chatbots, driver monitoring, and customer service. The promise is personalization and care. The risk, I came to believe, is something deeper than misclassification. It is the quiet transfer of interpretive authority: the moment a system's label about your inner life begins to outrank your own.

I looked for a name for this problem. Privacy did not quite cover it — privacy protects data, but the issue here is meaning. Transparency was necessary but insufficient — knowing that a system read you as "anxious" does not give you the power to say, "No, I was tired." Fairness frameworks addressed demographic bias, but not the more fundamental asymmetry: that a statistical model was claiming to know someone's feelings better than the person themselves.

So I proposed a concept: Affective Sovereignty. The right to remain the final interpreter of one's own emotions.

From principle to formalism

The concept alone was not enough. Ethics guidelines are abundant in AI; enforcement is scarce. I wanted to ask a harder question: can you build sovereignty into the mathematics of a system — not as an afterthought, but as a structural constraint?

The paper that resulted, now published in Discover Artificial Intelligence, attempts exactly that. It decomposes the risk function of an emotion AI system into three terms: conventional prediction loss, an override cost that makes it expensive for the system to contradict a user's self-report, and a manipulation penalty that flags coercive or deceptive influence on emotional states. When the system is uncertain, or when a pending action would violate a user's stated preferences, the optimal policy is to abstain and ask — not to assert.

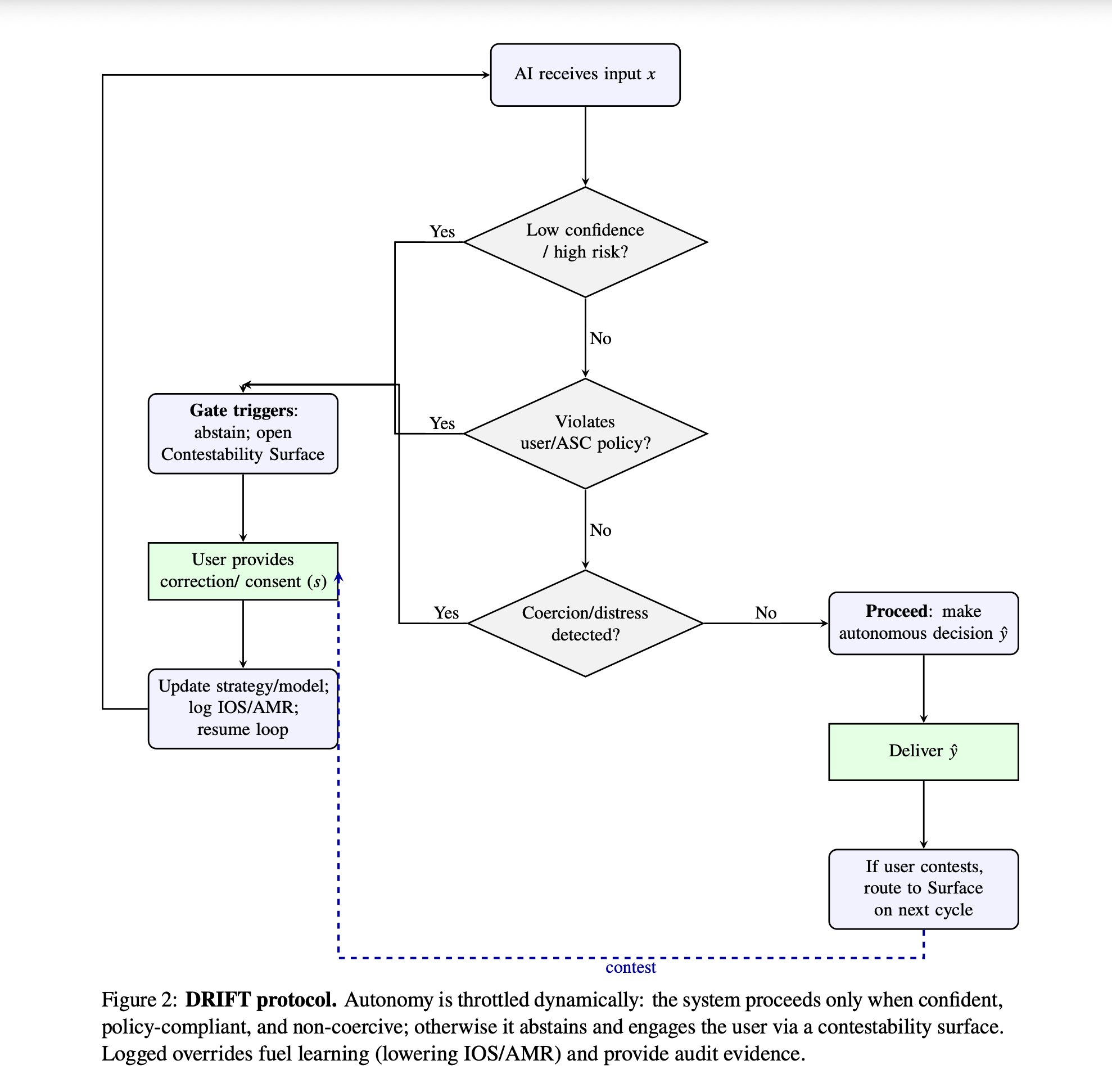

This is operationalized through what we call DRIFT: Dynamic Risk and Interpretability Feedback Throttling. DRIFT is a runtime protocol with a series of gates — uncertainty, policy compliance, coercion detection — that throttle the system's autonomy and route decisions to a contestability surface where the user can confirm, correct, or decline the system's reading.

We also introduced three alignment metrics. The Interpretive Override Score (IOS) measures how often the system contradicts the user's self-report. The After-correction Misalignment Rate (AMR) tracks whether the system repeats the same mistake after being corrected. Affective Divergence (AD) captures the distributional gap between what the model thinks you feel and what you report over time. Together, these make sovereignty auditable — not just declarable.

What the simulation showed

In proof-of-mechanism simulations, enforcing DRIFT with policy constraints reduced the Interpretive Override Score from 32.4% to 14.1%, and the repeat-error rate dropped from 36.4% to 13.2%. The cost was high abstention — roughly 70% of decisions were deferred to the user. That number might look like a flaw. I see it as the point. In domains where a system is interpreting your inner life, deferring to you is not inefficiency. It is respect.

These are simulation results, not field data. A preregistered human-subject study is planned. The claims are deliberately modest in method. But the implication is strong: when you price uncertainty and interpretive risk into the design, alignment improves without requiring any leap toward artificial general intelligence. Sovereignty is an engineering choice, not a philosophical luxury.

The road to this paper

The idea did not arrive in a single moment. It grew across disciplines — from psychoanalytic thinking about how meaning is constructed, through phenomenological accounts of first-person experience, to the regulatory architecture of the EU AI Act, which now places strict limitations on emotion inference in workplaces and schools but lacks the runtime mechanisms to enforce that prohibition at the point of inference.

I presented an earlier version of this framework at the SIpEIA Conference in Rome, under the theme "Accountability and Care." The response from philosophers, engineers, and legal scholars confirmed something I had suspected: the gap between ethical principles and technical enforcement is not just a policy problem. It is a design problem. And the design vocabulary did not yet exist.

This paper tries to supply part of that vocabulary. The Affective Sovereignty Contract (ASC) is a machine-readable policy layer that encodes user preferences and domain rules — disabling emotion inference in banned contexts, setting interruption budgets, specifying which emotions the system may even ask about. The Declaration of Affective Sovereignty, included in the paper, is not a constitutional charter but a normative design brief: eight testable tenets with measurable acceptance criteria and auditable hooks.

What this opens

The implications reach beyond emotion recognition. Any system that models, infers, or acts upon subjective human states — recommender systems shaping mood, therapeutic bots interpreting distress, companion agents managing attachment — faces the same question: who holds interpretive authority?

This paper is one element of a broader research program — one that examines how affective meaning is constructed, defended, and sometimes fractured, across computational, clinical, and narrative domains.

I believe the answer must be structural, not aspirational. Sovereignty must be embedded in objective functions, enforced at runtime, and measured over time. The person is the final arbiter of their inner life — not because self-reports are infallible, but because the alternative is a world in which machines quietly become the judges of what we feel.

Even the most perceptive AI should be counsel, never judge.

Follow the Topic

-

Discover Artificial Intelligence

This is a transdisciplinary, international journal that publishes papers on all aspects of the theory, the methodology and the applications of artificial intelligence (AI).

Related Collections

With Collections, you can get published faster and increase your visibility.

Enhancing Trust in Healthcare: Implementing Explainable AI

Healthcare increasingly relies on Artificial Intelligence (AI) to assist in various tasks, including decision-making, diagnosis, and treatment planning. However, integrating AI into healthcare presents challenges. These are primarily related to enhancing trust in its trustworthiness, which encompasses aspects such as transparency, fairness, privacy, safety, accountability, and effectiveness. Patients, doctors, stakeholders, and society need to have confidence in the ability of AI systems to deliver trustworthy healthcare. Explainable AI (XAI) is a critical tool that provides insights into AI decisions, making them more comprehensible (i.e., explainable/interpretable) and thus contributing to their trustworthiness. This topical collection explores the contribution of XAI in ensuring the trustworthiness of healthcare AI and enhancing the trust of all involved parties. In particular, the topical collection seeks to investigate the impact of trustworthiness on patient acceptance, clinician adoption, and system effectiveness. It also delves into recent advancements in making healthcare AI decisions trustworthy, especially in complex scenarios. Furthermore, it underscores the real-world applications of XAI in healthcare and addresses ethical considerations tied to diverse aspects such as transparency, fairness, and accountability.

We invite contributions to research into the theoretical underpinnings of XAI in healthcare and its applications. Specifically, we solicit original (interdisciplinary) research articles that present novel methods, share empirical studies, or present insightful case reports. We also welcome comprehensive reviews of the existing literature on XAI in healthcare, offering unique perspectives on the challenges, opportunities, and future trajectories. Furthermore, we are interested in practical implementations that showcase real-world, trustworthy AI-driven systems for healthcare delivery that highlight lessons learned.

We invite submissions related to the following topics (but not limited to):

- Theoretical foundations and practical applications of trustworthy healthcare AI: from design and development to deployment and integration.

- Transparency and responsibility of healthcare AI.

- Fairness and bias mitigation.

- Patient engagement.

- Clinical decision support.

- Patient safety.

- Privacy preservation.

- Clinical validation.

- Ethical, regulatory, and legal compliance.

Publishing Model: Open Access

Deadline: Sep 10, 2026

AI and Big Data-Driven Finance and Management

This collection aims to bring together cutting-edge research and practical advancements at the intersection of artificial intelligence, big data analytics, finance, and management. As AI technologies and data-driven methodologies increasingly shape the future of financial services, corporate governance, and industrial decision-making, there is a growing need to explore their applications, implications, and innovations in real-world contexts.

The scope of this collection includes, but is not limited to, the following areas:

- AI models for financial forecasting, fraud detection, credit risk assessment, and regulatory compliance

- Machine learning techniques for portfolio optimization, stock price prediction, and trading strategies

- Data-driven approaches in corporate decision-making, performance evaluation, and strategic planning

- Intelligent systems for industrial optimization, logistics, and supply chain management

- Fintech innovations, digital assets, and algorithmic finance

- Ethical, regulatory, and societal considerations in deploying AI across financial and managerial domains

By highlighting both theoretical developments and real-world applications, this collection seeks to offer valuable insights to researchers, practitioners, and policymakers. Contributions that emphasize interdisciplinary approaches, practical relevance, and explainable AI are especially encouraged.

This Collection supports and amplifies research related to SDG 8 and SDG 9.

Keywords: AI in Finance, Accountability, Applied Machine Learning, Artificial Intelligence, Big Data

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in