Reading True Emotions: Subject-independent multi-channel voting for EEG-based emotion recognition using wavelet scattering deep network and advanced signal metrics

Published in Bioengineering & Biotechnology and Computational Sciences

Emotion recognition using Electroencephalography (EEG) is a rapidly growing field with applications ranging from healthcare to autonomous systems. However, EEG signals are notoriously complex, non-stationary, and noisy.

This study introduces a novel, hand-crafted feature extraction framework that bypasses the computational heaviness of traditional Deep Learning (DL) models while achieving state-of-the-art accuracy in subject-independent tasks.

🧠 Key Innovations

1. Wavelet Scattering Transform (WST) over CNNs

Instead of using Convolutional Neural Networks (CNNs) that require extensive training to learn filters, this approach utilizes the Wavelet Scattering Transform (WST).

- Predefined Filters: WST uses mathematically predefined wavelets (Morlet wavelet) rather than data-driven filters, eliminating the need for learning parameters.

- Stability: This method produces signal representations that are stable against noise and deformation and invariant to time shifts.

- Architecture: The model uses a two-layer WST network (J=6, Q=100) to capture both temporal and frequency components without static segmentation.

2. The Comprehensive Feature Set

The researchers did not stop at raw WST coefficients. They engineered a robust vector of 17 features extracted from both the raw EEG data and the 1st/2nd order WST coefficients.

-

Statistical Metrics: Mean, Median, Standard Deviation, Skewness, Kurtosis, etc..

- Advanced Metrics: Hjorth parameters, Information Entropy, and Higuchi Fractal Dimension.

- Result: A 9877-dimensional feature vector that captures the intrinsic properties of the signal , which is then reduced using Linear Discriminant Analysis (LDA).

3. Multi-Channel Majority Voting

Perhaps the most significant contribution to robustness is the majority voting scheme.

- The Problem: Individual EEG channels often produce variable predictions due to noise or artifacts.

- The Solution: Instead of averaging features, the model classifies the emotion for each channel independently using K-Nearest Neighbors (KNN). The final decision is determined by the mode (most frequent prediction) across all channels.

- The Impact: This filters out "noisy" channels that disagree with the consensus, drastically improving accuracy.

📊 Performance Benchmarks

The model was tested using Leave-One-Subject-Out (LOSO) validation to ensure true subject independence.

| Dataset | Task | Accuracy (No Voting) | Accuracy (With Voting) |

| GAMEEMO | 4-Classes (Horror, Boring, Calm, Funny) |

79.02% |

100% |

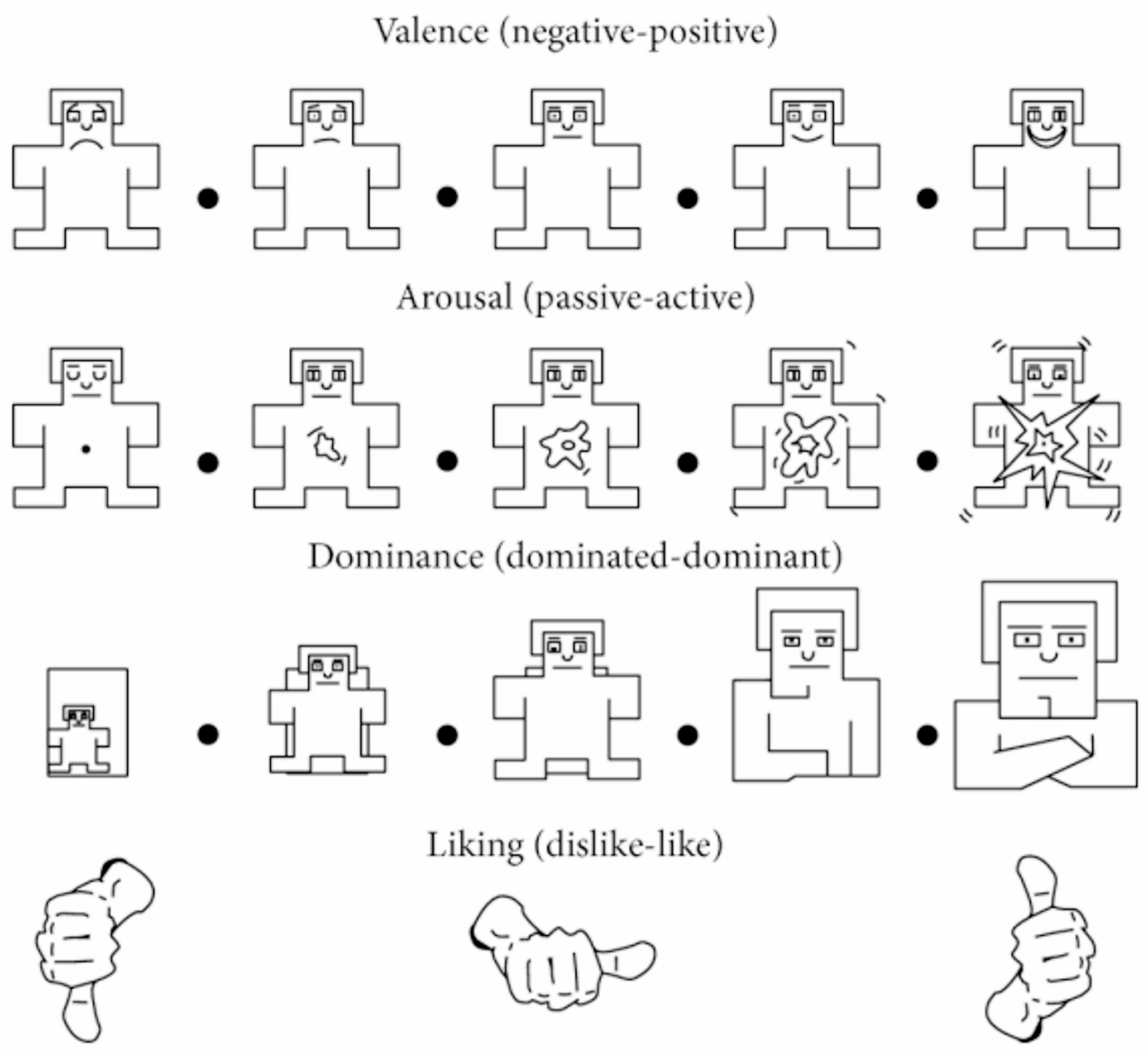

| DEAP | 2-Classes (Valence) |

77.83% |

98.44% |

| DEAP | 2-Classes (Arousal) |

79.20% |

99.22% |

| DEAP | 4-Classes (Happy, Relaxed, Angry, Sad) |

65.68% |

97.58% |

"Note: The ablation study revealed that removing the majority voting mechanism caused the most significant drop in performance after the removal of WST features themselves, highlighting its critical role."

💡 Why This Matters

- Computational Efficiency: By using predefined filters (WST) and simple classifiers (LDA + KNN), this model is far less computationally expensive than deep learning counterparts, making it ideal for real-time applications like wearable devices.

- Neuroscience Alignment: Heatmap analysis showed that the model correctly identified activations in the frontal, parietal, and occipital regions, aligning with established affective neuroscience.

- Robustness: The method proved stable even when artificial deformations (sine wave disturbances) were introduced to the signals.

Follow the Topic

-

Pattern Analysis and Applications

This journal presents original research that describes novel pattern analysis techniques as well as industrial and medical applications.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in