Why do women hold back on sharing dissatisfaction online?

Published in Social Sciences, Protocols & Methods, and Arts & Humanities

Imagine that you buy something, visit a restaurant, or watch a movie. Would you then post an online review about it? And is it more likely that you do this when you are happy with your experience rather than dissatisfied? New research, published in Nature Human Behavior, suggests that posting online reviews and the resulting rating differs between men and women.

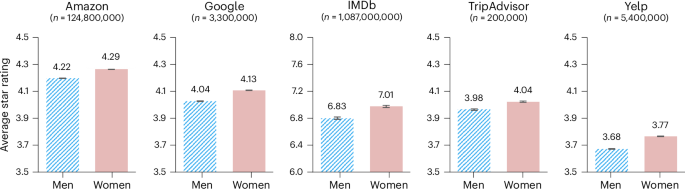

The authors call this phenomenon the "gender rating gap," where women's online reviews usually come with a higher star rating than men's. This was found in data of more than 1.2 billion reviews across countless products and services on several online review platforms such as Amazon, Google, IMDb, TripAdvisor and Yelp.

A logical next question is why. After considering various explanations, the authors ultimately identify the following as a key reason. Across several studies, the researchers asked men and women to share their genuine attitudes towards products. They were then asked if they wanted to post an online review about it. The study finds that when dissatisfied, women are less prone to share their opinion on the Internet than men. However, when satisfied, there is no evidence of a difference between the two genders.

So why could it be that women are less likely to share their dissatisfaction online? The findings show a higher fear of negative evaluation among women, which can explain the different propensities to share negative feedback and, ultimately, the gender rating gap.

Why should we care? Well, even on the relatively anonymous Internet, perhaps surprisingly, users tend to conform to societal expectations and gender roles. This raises concerns about how well the information millions of users rely on daily actually reflects reality. So, next time you read an online review, remember that it might not be as representative as you think.

But even above and beyond online reviews, think back to your last ChatGPT prompt, where you sought its help. Where do large language models (LLMs) like ChatGPT get their input? From the internet! The authors in this study demonstrate that the type of content appearing online—specifically through online reviews—exhibits gender-based bias. There is less critical content by users that the authors (and thus probably also an Artificial Intelligence [AI]) would infer as female. Thus, this skewed representation can shape the outputs of AI models, potentially reinforcing or amplifying gender stereotypes. When AI relies on data from sources with underlying biases, it may reflect these biases in its responses and recommendations, impacting how people engage with AI-driven tools. This research highlights the importance of understanding the data feeding into AI systems to ensure they produce fair and balanced insights valuable for users of any gender.

The full paper contains further analysis, such as how the gender rating gap varies across countries, categories and users' age. You can find the full paper published in Nature Human Behavior here:

Bayerl, A., Dover, Y., Riemer, H. et al. Gender rating gap in online reviews. Nat Hum Behav (2024). https://doi.org/10.1038/s41562-024-02003-6

Follow the Topic

-

Nature Human Behaviour

Drawing from a broad spectrum of social, biological, health, and physical science disciplines, this journal publishes research of outstanding significance into any aspect of individual or collective human behaviour.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in