Seeing other perspectives: Evaluating the use of virtual and augmented reality to simulate visual impairments (OpenVisSim)

Published in Healthcare & Nursing

For the

past 8 years I have worked to design more child-friendly vision tests, and a

question I often get asked is "What does my child see?". This

is a simple question, but a difficult one to answer, because modern vision

tests produce reams of complex numbers in strange units, many of which require

a medical degree to understand. “Well sir,

their logMAR is within normal bounds, but their pattern standard deviation

looks a few decibels off..”

My solution was to create a digital simulator to convert all of the numbers produced by various eye tests into a visual representation, so parents could experience directly what their child sees using Virtual/Augmented Reality.

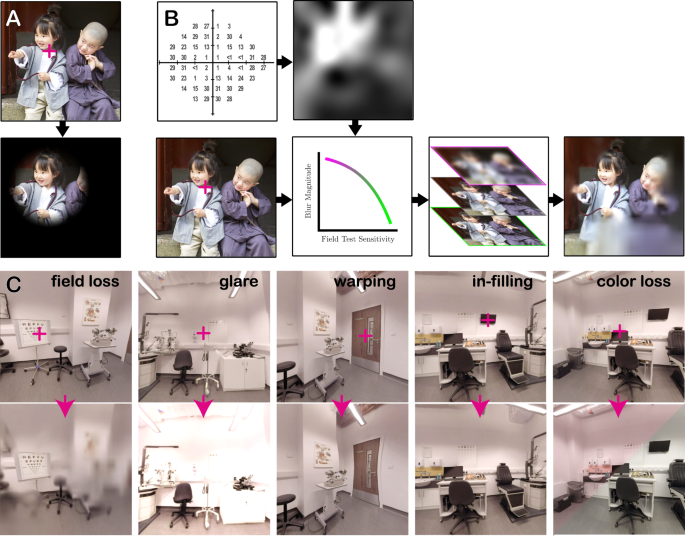

Like many simple ideas, however, its implementation proved complex. Despite what you find if you google an eye condition, it is not enough to simply draw black blobs over some parts of the screen. Most eye disease is nothing like that. Instead eye disease often involves all sorts of complex image manipulations, such as blurring, fading, or warping. And often eye disease will only affect some parts of the eye, so the location of the loss will move depending on where you’re looking.

What followed then was several years of technical work, where I developed the code to convert raw eye-test data into various ‘gaze contingent’ image manipulations (code which, as part of the present publication, I’ve made freely available online for others to use or develop). The result, ‘OpenVisSim’, is a series of stackable, data driven, digital effects (or: ‘shaders'), which can be applied to an image in real time. The nice thing is that the image itself can come from anywhere. It could be a static photo, but it can also be the live video from a camera (augmented reality), or can be computer-generated (virtual reality). So the wearer is still able to perform everyday tasks while wearing the VR/AR equipment, such as walking around, or making a cup of tea.

The next step was to validate the system. We will never know exactly what

someone else sees, so a perfect simulation is impossible. But any simulator

should, at the very least, be able to recreate in normally-sighted wearers, the

sorts of everyday difficulties and behaviors that real patients exhibit. We

know, for example, that people with glaucoma are slower at finding objects in

cluttered environments, and have issues with mobility. And we know that these

problems are exacerbated when the sight loss is inferior (i.e., affects vision

below the current point of fixation), versus when the vision loss is superior

(i.e., affects vision above the current point of fixation).

Our data showed that healthy controls behaved in a similar manner when wearing our simulator. Much like real patients, people experiencing simulated sight loss were slower to find a smartphone around a house (virtual reality), and were slower in real life to navigate a physical, human-sized ‘mouse maze’ (augmented reality). Moreover, as with patients, our participants were particularly slow when the simulated sight loss was inferior. Finally, using sensors embedded in the VR/AR headset, we were also able to show that slower task performance was accompanied by increases in the amount that people turned their head and moved their eyes: compensatory behaviors that have again been observed previously in real glaucoma patients.

These

findings are encouraging, as they suggest that digital simulators such as ours

are able to provide a ‘functional approximation’ of real sight loss. The hope

is that such simulators could be used in future to provide insights into

different sorts of eye disease, or to understand the everyday challenges a

particular individual is likely to face.

While conducting this research, it’s also become apparent that the technology has broad applications, beyond children and parents. I am now working with architects to explore whether sight-loss simulators can be used design more accessible buildings, and also with colleagues at City, University of London, to see whether simulators can be used to enhance the teaching of new undergraduate optometrists.

I would

like to end by saying a special thank you to Moorfields Eye Charity and Fight for

Sight (UK). Attracting funding for the development of new technologies is never

easy, and without the support of these eye charities and their benefactors,

none of this work would have been possible.

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in