Robust AI for Mental Health Prediction in Social Media

Published in Healthcare & Nursing

Did you know that Facebook has a “suicide prevention AI”? The popular social media website uses behavioral and linguistic patterns to guess if someone may harm themselves. NPR reports that this AI detects about 10 people every day. From there, Facebook can make an intervention to potentially save someone’s life. Now, Facebook isn’t the only one who has used social media data to make predictions about people’s well-being. In fact, researchers have been investigating this for nearly a decade – and these systems boast great promise for lifesaving and cost-reducing applications.

A crucial part of this work is the trustworthy evaluation of mental health from social media data. Without a doctor to make diagnosis or a screener to evaluate, how can we make sure the social media signals are measuring the thing we hope they measure? If these answers are wrong and we make them without human oversight, we risk making life-altering mistakes. We may allocate resources to a person who is not in distress and be overbearing, or the reverse, and miss a person who desperately needs assistance. As researchers who conduct this work, we were curious about practices in the larger research community - we wanted to know about the methods and dataset selection when important fields work together, like machine learning and clinical psychiatry. We set out to answer those questions.

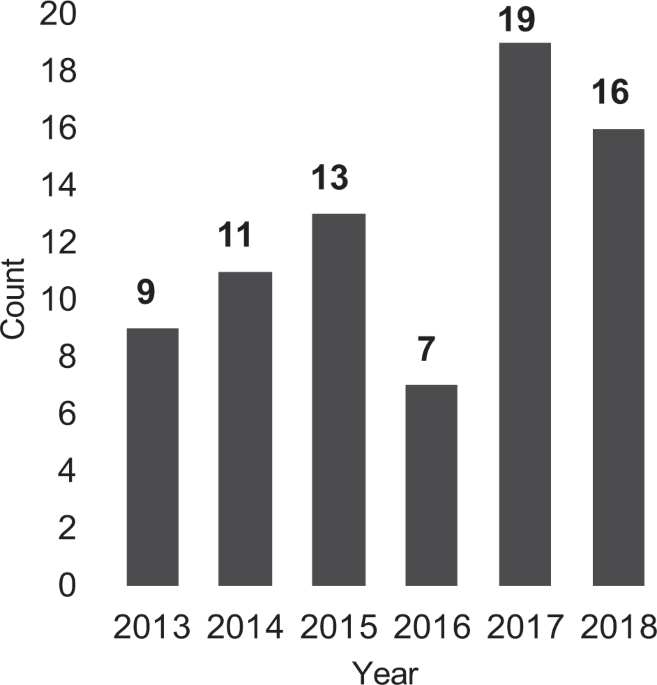

In our paper, we studied 75 scientific papers for predicting mental illness using machine learning and social media data – the state-of-the-art in predictions within the space over the last five years. What we found was that most papers have large gaps in scientific reporting and validation strategies for “clinical” assessment that is essential to making trustworthy predictions. Failing to validate one’s data can result in the risks we mentioned earlier, incorrectly allocating already limited monetary and human resources. Not following reporting standards can make it difficult, even borderline impossible for fellow scientists to independently evaluate and confirm a study. Either can be enough to prevent accurate research – in conjunction, they pose a major barrier to solving the pressing challenges posed by AI applied to mental health.

In light of these results, we are hopeful the field can improve in these areas. In the paper, we generate a list of reporting standards for what must be included in these papers. We also discuss promising opportunities for collaborations with medical researchers to align proxy signals with clinical findings. These both are crucial in developing trustworthy and accurate predictions that leads to better research, and eventually better tools that we all can use for helping solve the pressing challenge of mental illness diagnosis and treatment.

Read the entire paper here: https://www.nature.com/articles/s41746-020-0233-7

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in