A compressed representation of confidence guides choices in sequential decisions

Published in Social Sciences

Imagine looking at the data of your new experiment, and concluding they support a certain hypothesis. This is a decision – about what to believe given the evidence gathered. The next decision, between sharing your results or designing another experiment to gather more evidence, should depend on your confidence that the finding is robust (e.g. there are no hidden confounds or flaws in the method).

This example illustrates two points. First, much of our daily activities (in academia and beyond) involve sequences of interdependent decisions – no decision is an island. Second, it is often the case that the outcome of previous decisions is not known, so to guide the next decision we have to rely on our confidence - a subjective estimate of the probability that previous decisions were valid and correct.

These considerations provide the rationale for our study, in which we investigated confidence via sequences of interdependent decisions, a departure from the more popular approach that relies on self-report rating scales. These ideas date back to when I was working with Andrei Gorea, the then Director of the Laboratoire Psychologie de la Perception, at the Université Paris Descartes. Andrei, who passed away in 2019, was a colourful, brilliant, and funny person. We had many long and rather argumentative discussions. These discussions were particularly productive during our coffee breaks on the balcony, where we were joined by Gianluigi Mongillo, who would contribute many great ideas and become a co-author. In these balcony-meetings, the idea for this research was born, via a detour through the field of time perception.

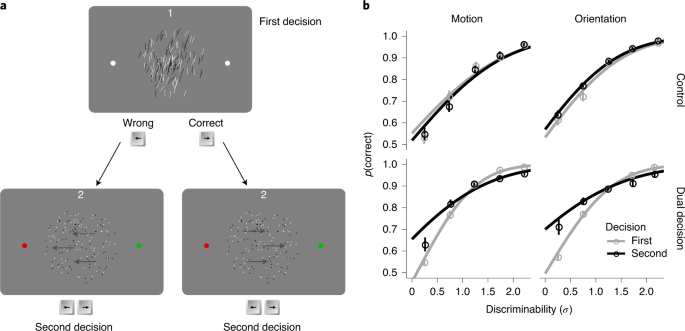

In an approach pioneered by Jazayeri & Shadlen1, people were found to be Bayesian-like when reproducing time: their decisions balance noisy information from the current interval with prior expectations based on the distribution of intervals presented thus far. However, an optimal Bayesian decision-maker should also be able to flexibly adjust prior expectation as needed, on a decision-by-decision basis. Andrei, Gianluigi and I wanted to develop an experimental protocol that could test if human observers can do this. We came up with a structure in which participants made two duration judgments each trial, with the confidence in the first judgment forming the prior for the second judgment. This dual-decision approach provided a different way to manipulate duration expectation flexibly on trial-by-trial basis, without requiring observers to learn a task-specific distribution of temporal intervals. Soon, however, we realised that applying this paradigm across different domains, could also provide a strong and general test of the normative theory that equates confidence with Bayesian probability2.

In an experiment testing this hypothesis, we found that people can use their confidence on a first decision to improve a second decision on a different task. However, their confidence appeared to be compressed to some discrete levels rather than reflecting the continuous function prescribed by the normative theory. Our modelling suggested that 1 bit of information (high vs. low) was sufficient to account for participants’ use of confidence in our dual-decision task. We believe that these findings are exciting, because they provide new insight in the limitations of humans intuitive understanding of uncertainty and probability, and provide a new way of probing metacognition without need to resort to self-report methods.

Being confident about our findings, we sought to publish them. It was only during late-stage revisions, when preparing data and code for sharing in a public repository, that we noted a flaw that required further data collection - work we did with Tessa Dekker and Georgia Milne. This anecdote not only demonstrates (yet again) that subjective confidence may be misleading but also highlights the importance of open science practices: it was the final sanity-check before publicly sharing code and data that revealed an error about to go unnoticed.

Unfortunately our time with Andrei was cut short. While we were finishing the paper, Andrei was diagnosed with cancer and passed away, to the great sadness of many friends and colleagues. The workshop that was organized in honour of his career became a tribute to his memory (https://sites.google.com/view/andrei-gorea-tribute). The findings of our study align with his long-standing belief that higher-order decision-making is unlikely to have access to full probability distributions. Indeed, our results suggests that confidence in perceptual decisions uses only a summary of the sensory representation, and that capacity limits in the metacognitive read-out of decision variables constrain the precision and calibration of subjective confidence.

Andrei Gorea, photo by J. Kevin O’Regan.

1. Jazayeri, M. & Shadlen, M. N. Temporal context calibrates interval timing. Nature Neuroscience 13, 1020–1026 (2010).

2. Meyniel, F., Sigman, M. & Mainen, Z. F. Confidence as Bayesian Probability: From Neural Origins to Behavior. Neuron 88, 78–92 (2015).

Follow the Topic

-

Nature Human Behaviour

Drawing from a broad spectrum of social, biological, health, and physical science disciplines, this journal publishes research of outstanding significance into any aspect of individual or collective human behaviour.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in

Very interesting article! i wonder how confident researchers are of their ability to measure such confidences?