A Multimodal Dataset for Mixed Emotion Recognition

Explore the Research

A Multimodal Dataset for Mixed Emotion Recognition - Scientific Data

Scientific Data - A Multimodal Dataset for Mixed Emotion Recognition

Background

Affective computing plays an increasingly important role, especially in the era of Emotional Intelligence1,2. Although these existing datasets greatly promote the research of emotion recognition, they either focus on discrete emotion classification or focus on emotion classification in valence-arousal (VA) space. Mixed emotions are an important topic in emotion analysis and have received increasing attention3,4,5, and it is urgent to establish a dataset for mixed emotion research. In this paper, we established the multimodal dataset that contains physiological and face video data of 73 participants. To the best of our knowledge, the proposed dataset is currently the only available dataset for mixed emotion recognition, and it may help advance research in mixed emotion analysis. The proposed dataset is available on Zenodo6 upon request.

Methods

All participants were given written informed consent upon arrival and were asked to read and voluntarily sign it. After that, they were informed about the experiment content, experiment protocol, meaning of affective scales, and instructions for completing the self-assessment form. After placing and checking the sensors, the experimenter ran the main program and a form was first presented on the screen to collect the name, age, gender, and other basic information of the participants. Then, the experiment started by the participant’s pressing the ‘OK’ button.

The experiment procedure mainly consisted of a practice stage, a baseline recording stage, and 4 blocks. Figure 1 shows the timing diagram of the experiment, which began with a practice stage containing a single practice trial to make participants familiar with the procedure of one trial. After practice, the participant was asked to look at the black screen and remain relaxed to collect a three-minute recording of the resting state. Next, 32 film clips were presented in 4 blocks, each containing 8 trials with one video clip in each trial. Note that the film clips were divided into 4 blocks according to their original emotion labels (i.e., positive, negative, mixed) to make the labels of film clips the same in each block. A set of arithmetic operations and a 1-minute break were arranged between two consecutive blocks to eliminate the effect of the previous block. The presentation order of the blocks followed the Latin square design to eliminate any possible influence that block presentation order might have. Each trial consisted of the following concrete steps:

- The display of one video clip for about 20-30 seconds.

- Self-report for the emotional adjectives (10-item short positive affect (PA) and negative affect (NA) schedules (PANAS)7).

- Self-report for arousal, valence, and dominance.

- Self-report for two discrete emotions, namely amusement and disgust. We collected the self-rating scores of these two emotions since the positive and negative emotions in Stanford film library8 mainly refer to amusement and disgust.

- A 5-second break before the next trial.

Technical Validation

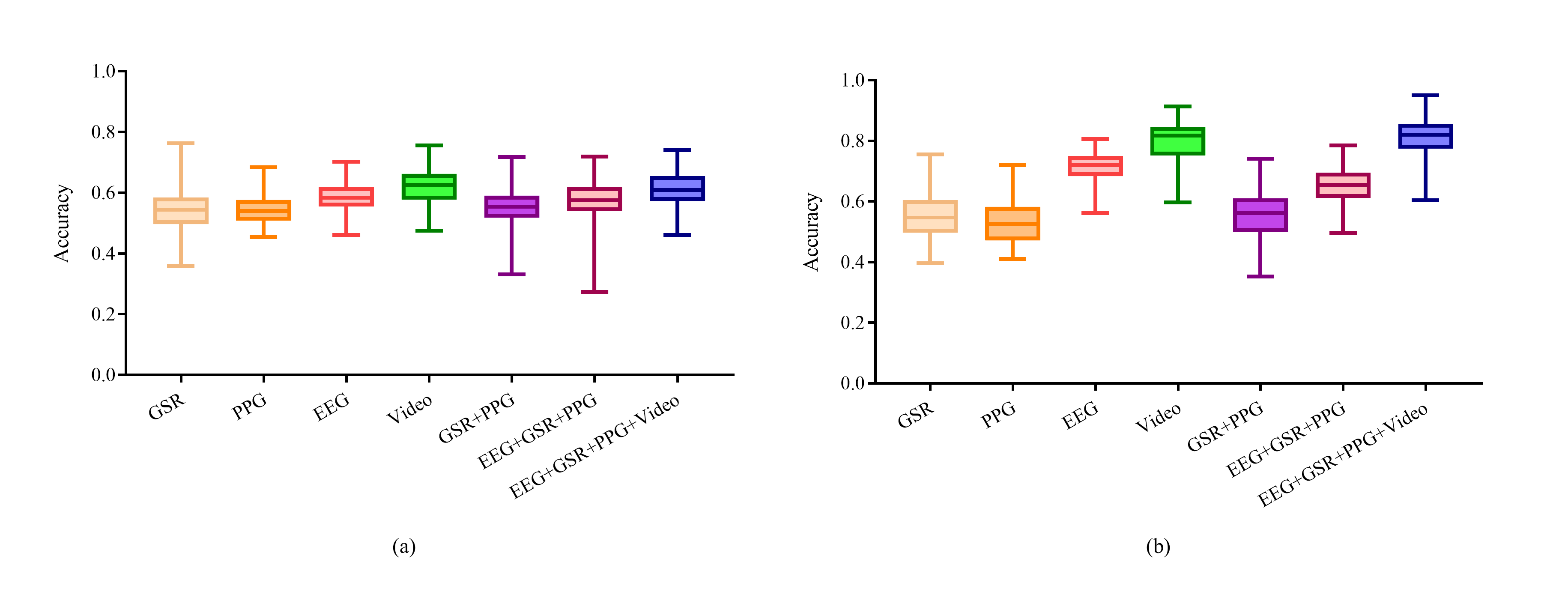

To verify the feasibility of mixed emotion classification from physiological signals and face videos, we conducted experiments using two typical classifiers (i.e., support vector machine (SVM) and random forest (RF)) for positive, negative, and mixed emotion classification. We validated the classification performance in a participant-dependent protocol. The physiological signals and face video of each trial were divided into two parts according to 4:1, and the first and the second parts of all trials formed the original data of the train set and test set, respectively.

The experimental results are presented in Fig. 2. We tested seven feature combinations, including four single modality features (i.e., EEG, GSR, PPG, Video) and three multiple modalities features (i.e., GSR+PPG, GSR+PPG+EEG, GSR+PPG+EEG+ Video). Results in Fig. 2 show that SVM and all features (i.e., EEG+GSR+PPG+Video) obtained the best accuracy. Besides, EEG performs better than other physiological signals: it achieves not only higher classification accuracy but also a smaller standard deviation.

Reference

1. Salovey, P., Mayer, J. & Caruso, D. Emotional intelligence: Theory, findings, and implications. Psychological inquiry 15, 197–215 (2004).

2. Seyitoğlu, F. & Ivanov, S. Robots and emotional intelligence: A thematic analysis. Technology in Society 77, 102512 (2024).

3. Larsen, J. T. & McGraw, A. P. Further evidence for mixed emotions. Journal of personality and social psychology 100, 1095 (2011).

4. Oh, V. Y. & Tong, E. M. Specificity in the study of mixed emotions: A theoretical framework. Personality and Social Psychology Review 26, 283–314 (2022).

5. Zhou, K., Sisman, B., Rana, R., Schuller, B. W. & Li, H. Speech synthesis with mixed emotions. IEEE Transactions on Affective Computing (2022).

6. Yang, P. et al. A multimodal dataset for mixed emotion recognition. zenodo https://doi.org/10.5281/zenodo.8002281 (2022).

7. Mackinnon, A. et al. A short form of the positive and negative affect schedule: Evaluation of factorial validity and invariance across demographic variables in a community sample. Personality and Individual differences 27, 405–416 (1999).

8. Samson, A. C., Kreibig, S. D., Soderstrom, B., Wade, A. A. & Gross, J. J. Eliciting positive, negative and mixed emotional states: A film library for affective scientists. Cognition and emotion 30, 827–856 (2016).

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Data to support drug discovery

Publishing Model: Open Access

Deadline: Apr 22, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in