After the Paper | Rolling the dice again… a look back at ‘Anthropogenic biases in chemical reactions hinder exploratory inorganic synthesis’

Published in Chemistry

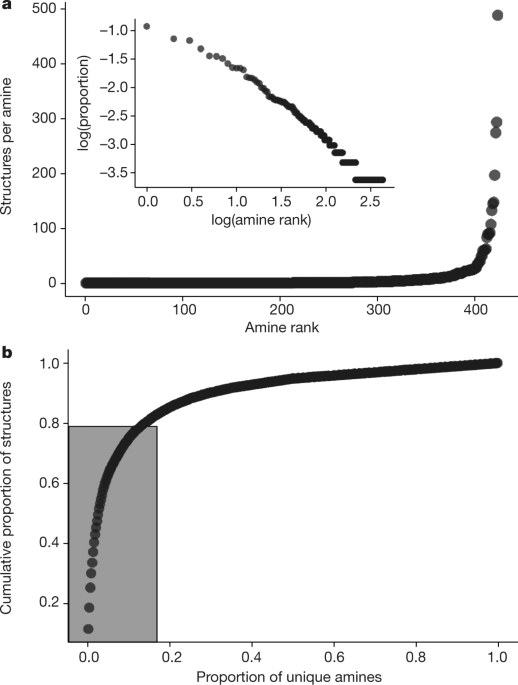

Much of our work during the last few years has involved the use of machine learning approaches to better understand chemical syntheses. We’ve been specifically focusing on organic inorganic metal oxides. In 2016 (Nature, 2016, 533, 73) we showed that the including experimental “failures” was important for training models for chemical synthesis. However, the chemical literature is “biased” in that it largely only contains reports of success. Models on reactions that nearly all succeed won’t be able to predict failure conditions. The dearth of failed experiments in the literature can represent a true challenge in predictive syntheses. That work led to us to wonder if other biases existed in our syntheses. Nearly every decision that humans make contains inherent biases. In the lab, this ranges from the selection of the reaction components we use to the specific experimental parameters that we employ. Essentially, we’re creatures of habit. We go back to the same reagents and the same narrow band of conditions again and again. In the active learning literature, there’s a continuum between exploration and exploitation. Explorations typically have high uncertainties, while exploitations have high predicted outcomes. All too often, we exploit what we’ve done in the past and marginally tweek the conditions in an attempt to replicate old successes. Make no mistake, there’s a place for such reactions in chemistry, but we’re exploratory chemists! To paraphrase John Muir, the chemical space is calling, and I must go.

Much of what we do is based upon searching unexplored or underexplored chemical space to find new compounds with unexpected compositions, structures and properties. But returning to the same ‘magic’ recipes again and again inherently limits our ability to explore. Last fall, we showed that we’d been doing the wrong reactions for years (Nature 2019, 573, 251) . We relied on the ‘tried and true’ approach of designing experiments such that a single variable is changed, allowing us to ascribe any differences in outcome to the variations we introduced. When a single variable exists, that approach is great. But what happens when a synthetic space exists in 10 dimensions? What about 15 dimensions? A linear march just does not work in high dimensional space, and that route becomes fantastically time and resource intensive. Instead, we showed that using probability density functions to randomize reaction parameters was far more efficient. Moreover, the machine learning models that were created from our random reactions were stronger and more useful. Our models were far better suited to finding new areas for exploration and new synthetic windows in which we could be successful. We found that our models showed an optimism that is critical in a domain where failed experiments are the norm. This is not to say that choosing random reactions is the best way to generate datasets for machine learning—there are many interesting experimental sampling strategies that have been developed—but merely that it is better than relying on humans.

So how has this paper affected our careers? What challenges and what opportunities have arisen? In short, we’ve been forced by our own work to question our instincts and to abandon the tools that we’ve used for far too long. We’ve had to face the fact that our old tricks are no longer good enough. So what new tricks have we learned?

New exploration campaigns. The system that we discussed in our paper used historical data, reactions that our students had run in the past. Using these data, coupled with new experiments and machine learning work, we hopefully were able to show a better approach. So now we need to walk and walk. We’ve launched a series of new experimental campaigns, investigations into systems that we’ve never touched using the techniques. It is a challenge, as these initial experiments are not really designed to isolate large single crystals but to define the working limits of each experimental variable. Having to say ‘we’ll come back to really optimize that reaction later’ is hard, but that’s the approach we’ve taken.

High throughput experimentation. If you can’t work smarter, work harder. Better yet, let the robot do the work! Sparse data represent a real challenge. As we’ve discussed above, we’re changing the way we choose which reactions to perform. But what if we didn’t have to choose, what if we could just do all the reactions? For the past few years, we’ve been using high throughput techniques to avoid the challenges of sparse data. In a recent paper, we’ve developed a system we call RAPID (Robot Accelerated Perovskite Investigation & Discovery) that does this for closely related metal halide perovskite systems.

Database work. We’re doing a better job, hopefully, of using publicly accessible data in responsible ways. As we showed in our paper, biases in the data will absolutely affect the utility of the model, so it’s important to use the data to ask the right questions. To quote Donald Rumsfeld, we can avoid the ‘known unknowns’ so that’s what we’re doing. The ‘unknown unknowns’ just might rear their ugly heads, and so we’re as careful and purposeful as we can possibly be.

Better yet, let’s create our own database! The data in lab notebooks tend to be rather coarse. We observe less than we should, write down less than we observe, and introduce bias in everything that we write. Certainly lab shutdowns over the past year have given many people time to reconsider and curate their past work, and we’ve developed some recommendations for chemists who want to start digitizing their work.

There are, of course, ways to capture more fine grained descriptions of experiments. We’ve been working on a platform for just this task. We call it ESCALATE, for Experiment Specification, Capture, and Laboratory Automation Technology. So far, we’ve applied this to automated workflows like our RAPID system and the SolTrain perovskite thin film system at MIT, but we’ve also demonstrated how the underlying data model can be useful for manual hydrothermal reactions.

So the moral of this story is really just the story of science. There’s always a better way to do things, we just need to be open and willing to question what we do.

Joshua Schrier and Alex Norquist

Follow the Topic

-

Nature

A weekly international journal publishing the finest peer-reviewed research in all fields of science and technology on the basis of its originality, importance, interdisciplinary interest, timeliness, accessibility, elegance and surprising conclusions.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in