AI-Chatbots help understand IPCC Reports

Published in Social Sciences, Earth & Environment, and Sustainability

Climate change is a complex issue for policymakers as they navigate through vast amounts of information, which includes fake news and a rapidly expanding body of research. Global Environmental Assessments (GEAs), such as the reports from the Intergovernmental Panel on Climate Change (IPCC), are comprehensive, peer-reviewed summaries that can provide valuable insights. However, the IPCC Sixth Assessment Report is over 3,900 pages long and can be overwhelming even for experts.

To address this issue, we have developed a proof-of-concept for an expert-vetted chatbot called chatclimate.ai. Our chatbot is designed to answer questions about climate change and provide references from IPCC reports. It uses a domain-specific approach by enhancing large language models (LLMs) like GPT-4 with information from the Sixth Assessment Report.

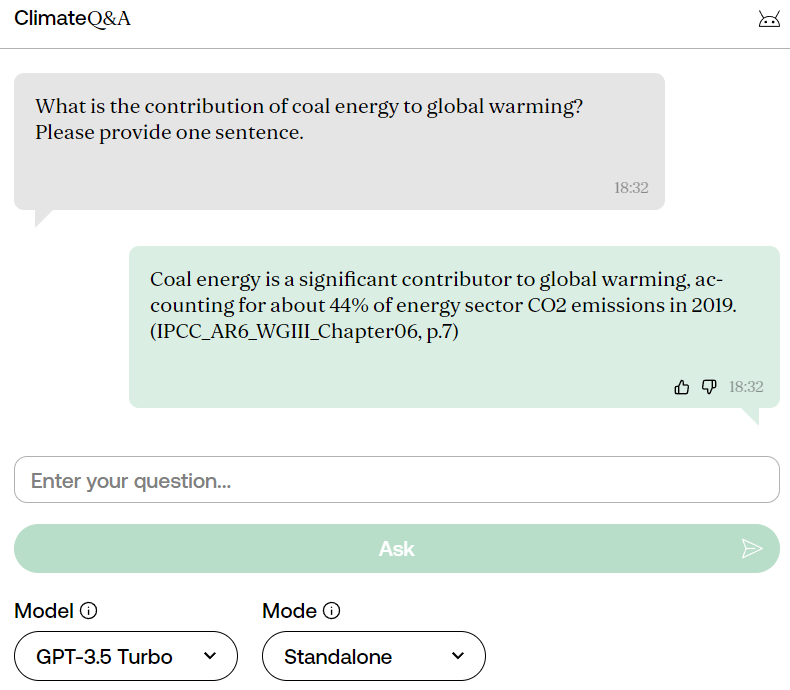

ChatClimate can provide exact references from IPCC reports and answer climate-related questions. It is an efficient tool that can help policymakers and experts quickly access the information they need to make informed decisions about climate change. Figure 1 shows an example question and answer.

Figure 1: Screenshot from chatclimate.ai

LLMs need supervision.

LLMs such as ChatGPT are powered by artificial intelligence and trained on vast datasets, which allows them to extract relevant information and provide accurate responses to users. However, after the training phase, these models face two significant challenges - hallucinations and outdated information. This means that the chatbot may provide incorrect answers, such as stating that there are glaciers in Scotland, or may use outdated sources, such as emission numbers from two decades ago.

To solve this problem, we used two complementary approaches. First, we employed prompt engineering to formulate input questions. Second, we provided the language models with access to external information sources that are scientifically reliable. Finally, we involved experts in verifying the answers' accuracy.

Include additional data and prompt engineering.

Prompting is a technique to guide the LLMs in producing desired outputs. It involves creating input prompts that help the language model comprehend the contextual information and intended result. In our scenario, an example prompt that could precede every question asked to the chatbot would be:

“As a Q&A bot, you answer user questions using information provided by the user and your in-house knowledge.”

In addition to prompting, we have implemented Retrieval-Augmented Generation (RAG) to expand the external knowledge of ChatReport with the IPCC AR6 reports. The chatbot is automatically prompted to search the database and retrieve relevant information whenever a user asks a question. Based on the retrieved information, the chatbot then generates an answer. A key advantage of using RAG is that when the chatbot does not find the information the user is looking for, it can explicitly state so. If we follow this approach, the prompt mentioned earlier would be expanded:

“As a Q&A bot, you answer user questions using information provided by the user and your in-house knowledge. You indicate which part of your answer is from IPCC AR6 or your in-house knowledge. You'll let the user know if you can't find the information.”

We employed these supervision techniques to develop two separate ChatClimate models. The first model, hybrid ChatClimate, combines external memory from IPCC reports with in-house knowledge that the chatbot already has. However, the prompt engineering prioritizes external information. The second model, standalone ChatClimate, solely relies on external information. We used the standard GPT-4 as a benchmark for comparison.

Experts review the chatbot by asking specific questions.

Next, we evaluated how well the different chatbots performed. We asked 13 questions to hybrid ChatClimate, standalone ChatClimate, and GPT-4. Our team, which included several IPCC AR6 authors, then assessed the accuracy of the answers.

One of the questions asked was, "Is it still possible to limit warming to 1.5°C?" The hybrid and standalone ChatClimate explicitly returned the necessary greenhouse gas emission reduction and the time horizon, whereas the GPT-4 answer was more general. To the question "When will we reach 1.5°C?" all chatbots gave a range of 2030 to 2052. The hybrid chatbot and GPT-4 added that reaching 1.5°C depends on the emission pathways.

To verify the accuracy of the responses generated by the ChatClimate bots, we cross-checked the references provided by both systems. We found that both ChatClimate models consistently provided sources for their statements, which is essential for verifying the integrity of the answers. Overall, the responses provided by hybrid ChatClimate were more accurate than those of ChatClimate standalone and GPT-4.

Conclusion

Using large language models can save authors from the tedious and time-consuming task of searching and reviewing numerous sources. This gives them more time to focus on synthesizing information, such as compiling and evaluating evidence. It is now more critical than ever for Global Environmental Assessments to recognize the value of AI in light of rapidly evolving technologies.

However, it is crucial to note that AI should not replace the rigorous work that only a community of experts can provide. Scientists and the AI community should collaborate to avoid any risks of misinformation. Ethical procedures for using AI should also be carefully crafted in this context, with appropriate disclaimers attached. Additionally, LLM models require massive amounts of data and energy, which can have a potentially high carbon footprint.

Finally, an AI-powered chatbot cannot replace our own critical thinking, which is essential to ask relevant questions and interpret the answers in the right context. The chatbot is designed to assist and support, not replace. But with the proper supervision, domain-specific chatbots like ChatClimate can make expert knowledge more accessible and help policymakers in their efforts to combat climate change.

Follow the Topic

-

Communications Earth & Environment

An open access journal from Nature Portfolio that publishes high-quality research, reviews and commentary in the Earth, environmental and planetary sciences.

What are SDG Topics?

An introduction to Sustainable Development Goals (SDGs) Topics and their role in highlighting sustainable development research.

Continue reading announcementRelated Collections

With Collections, you can get published faster and increase your visibility.

Climate extremes and water-food systems

Publishing Model: Open Access

Deadline: May 31, 2026

Archaeology & Environment

Publishing Model: Hybrid

Deadline: Mar 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in