AI vs. the Olympiad: Can Multimodal LLMs Truly 'See' Chemistry?

Multimodal large language models are often seen as emerging scientific assistants. Using chemistry Olympiad exams as a real-world benchmark, we study how well these models reason over diagrams, tables, and text, and where their limitations still lie.

Published in Chemistry, Computational Sciences, and Education

When we think of the U.S. National Chemistry Olympiad (USNCO), we think of deep spatial reasoning and careful problem-solving. As AI researchers, this led us to a simple question: if large language models are becoming scientific assistants, how do they handle the real-world setting of a chemistry exam?

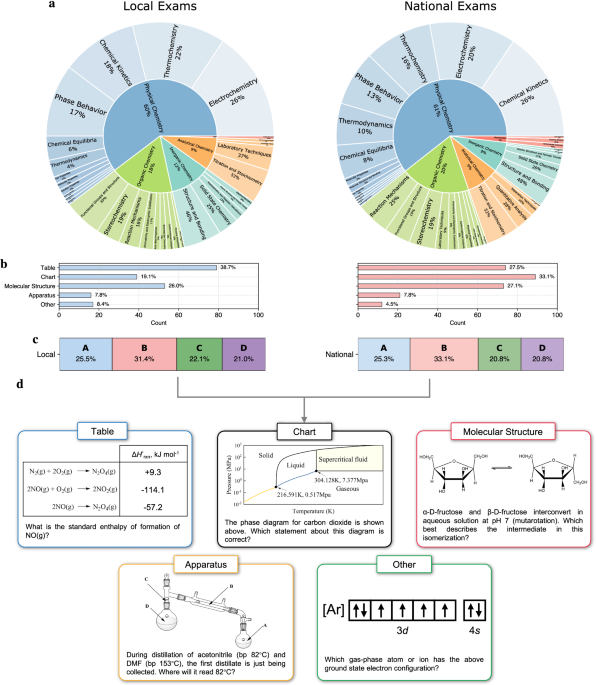

In this work, we curate a real-world chemistry benchmark from over two decades of U.S. National Chemistry Olympiad (USNCO) exams and use it to evaluate how well current multimodal models reason over text, diagrams, tables, and chemical structures. Because these problems were designed for top human students rather than machines, they offer a natural stress test for AI systems in realistic chemistry reasoning settings.

The Search for a Real-World Benchmark

Many existing AI benchmarks in science are self-constructed or automatically annotated. They are useful, but they often miss the subtlety and care that go into problems written by human experts. We chose the USNCO precisely because it serves as a genuine “blind test” for AI. It was never designed for machines, but to challenge some of the best young chemistry students in the United States.

By evaluating models such as GPT-5 and Gemini on USNCO questions, we wanted to see whether they could reason through the same diagrams, constraints, and logic that a top student faces under exam conditions.

A Surprise: Scale Over Specialization

One of the more unexpected findings, sharpened by helpful feedback from our peer reviewers, was that chemistry-specialized models do not consistently outperform general-purpose ones.

Specialized models are very strong at focused tasks such as SMILES conversion or property prediction, but they often struggle when broader reasoning is required. In contrast, large general models benefit from diverse pretraining and scale, which appear to matter more for scientific reasoning than domain-specific finetuning alone. From a chemist’s perspective, this suggests that the most capable AI assistant may not be the one trained only on molecules, but the one exposed to the widest range of human knowledge.

The "Aha!" Moment: When Less is More

Perhaps the most counter-intuitive result emerged from what we describe as a vision-language alignment gap. In earlier work on machine reading comprehension, we had noticed a familiar phenomenon: sometimes, removing part of the input actually improves performance.

We observed the same pattern here. Smaller models often rely on spurious visual cues in diagrams and become distracted by irrelevant details. In some cases, performance improved when images were removed entirely. This highlights a key limitation of current multimodal systems. While larger models are beginning to balance textual and visual information more effectively, many still struggle to truly interpret molecular structures or experimental apparatus in a human-like way.

What This Means for the Chemistry Community

Taken together, these results remind us that truly multimodal scientific AI is still at an early stage. For chemists using these tools today, it may be helpful to think of current models as brilliant but visually imperfect collaborators. They are strong at chemical logic and text-based reasoning, yet their visual understanding often lags behind.

As the field moves toward more autonomous AI systems for science, closing this vision-language gap and developing benchmarks that reflect real human problem-solving, will be an important step forward for the AI for Science community.

We hope this benchmark can serve as a useful reference point for future work, and we welcome discussion on how multimodal models can better align visual perception with chemical reasoning.

Read More

Cui, Y., Yao, X., Qin, Y. et al. Evaluating large language models on multimodal chemistry olympiad exams. Commun Chem (2025). https://www.nature.com/articles/s42004-025-01782-x

Follow the Topic

Cheminformatics

Physical Sciences > Chemistry > Theoretical Chemistry > Cheminformatics

Chemistry Education

Humanities and Social Sciences > Education > Science Education > Chemistry Education

Artificial Intelligence

Mathematics and Computing > Computer Science > Artificial Intelligence

Machine Learning

Mathematics and Computing > Computer Science > Artificial Intelligence > Machine Learning

Computer Vision

Mathematics and Computing > Computer Science > Computer Imaging, Vision, Pattern Recognition and Graphics > Computer Vision

Physical Chemistry

Physical Sciences > Chemistry > Physical Chemistry

-

Communications Chemistry

An open access journal from Nature Portfolio publishing high-quality research, reviews and commentary in all areas of the chemical sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

f-block chemistry

This Collection aims to highlight recent progress in f-element chemistry, encompassing studies on fundamental electronic structure, advances in separation chemistry, advances in coordination and organometallic chemistry, and the application of f-element compounds in materials science and environmental technologies.

Publishing Model: Open Access

Deadline: Feb 28, 2026

Experimental and computational methodology in structural biology

This cross-journal Collection highlights methodological developments in instrument design, sample preparation, data acquisition, data analysis, interpretation and integration from different techniques.

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in