Audit experiment exposes challenges and possible bias in scientific data sharing

Published in Social Sciences

Scientific progress hinges on open data sharing, but many scientists are reluctant to share their data despite prior commitments to do so. This reluctance ultimately hampers the knowledge accumulation that drives scientific advancement.

We conducted a pre-registered audit experiment to examine possible ethnic, gender, country and affiliation-status bias in data sharing. Previous research documents biases in science that relates to gender, ethnicity, and affiliation status, and we aimed to determine if these biases also manifest in data sharing. Guided by extant research, we expected this type of bias to arise from scientists’ stereotypical beliefs about data requestors’ characteristics. Given that knowledge transfer is more likely to occur in high-trust situations, the perceived status and competence of the data requester may be crucial factors in whether data is ultimately shared.

Experimental setting

In our study, we contacted authors of articles that appeared in the Proceedings of the National Academy of Sciences (PNAS) and Nature-portfolio outlets, which adhere to the FAIR data principles. Our goal was to get access to data sets that were associated with these articles.

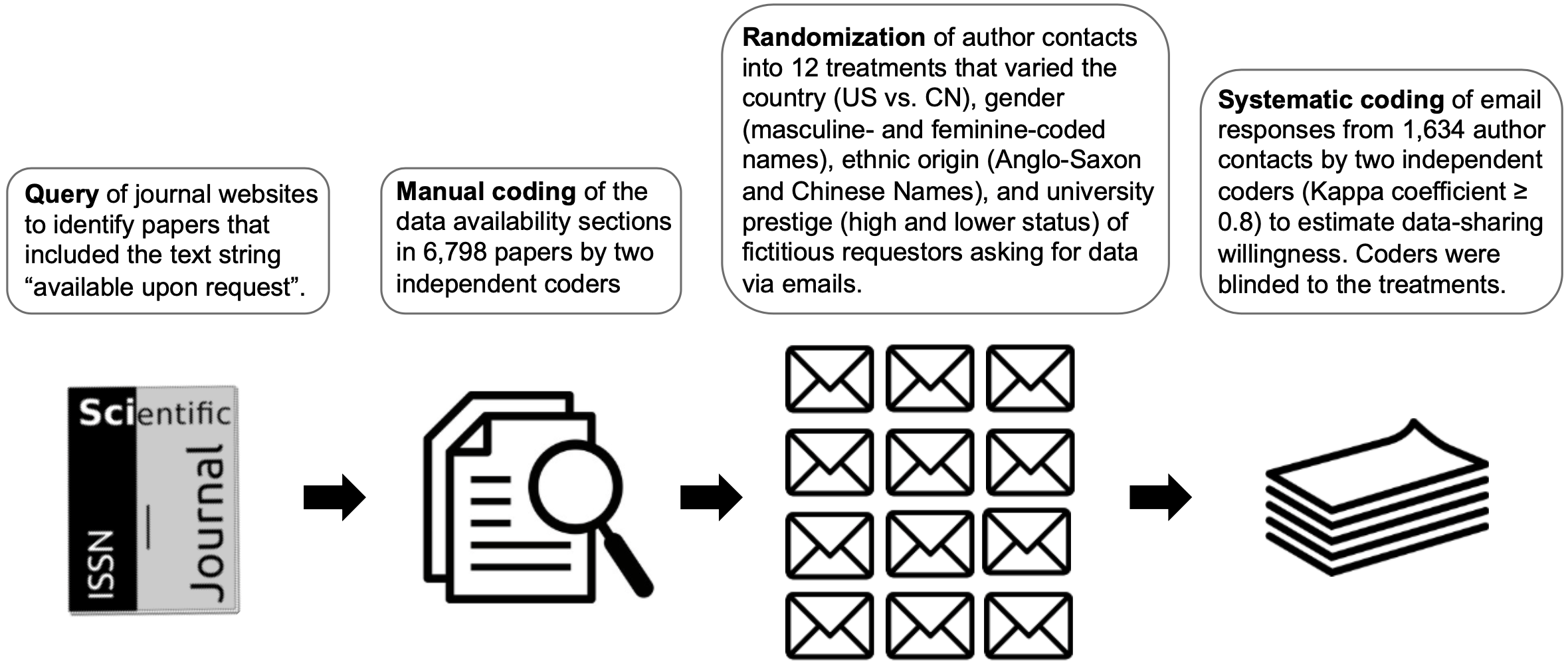

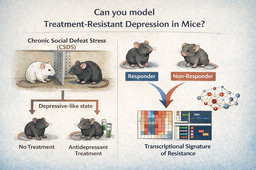

Figure 1| The design of the experiment

Our sample covered 1,634 authors of papers in diverse scientific fields who had indicated that their data was available upon request. We contacted all participants via email, using fictional ‘about to become’ PhD-student personas to request data related to a specific publication. To test for possible biases, we varied the identity of the data requestor on four factors: (1) country of residence (China vs. United States), (2) institutional prestige (high-status vs. lower-status university), (3) ethnicity (putatively Chinese vs. putatively Anglo-Saxon), and (4) gender (masculine-coded vs. feminine-coded name). With these manipulations it was possible to signal the fictitious requestors’ country of residence, university affiliation, perceived ethnicity, and gender.

Estimating biases

We expected that scientists would be less willing to share data, when a requestor (i) was from China (compared to the US); (ii) was affiliated with a lower-status university (compared to a higher status University); (iii) had a Chinese-sounding name (compared to typical Anglo-Saxon names); and (iv) had a feminine-coded name (compared to a masculine-coded name). China has emerged as the leading contributor to scientific research worldwide, and Chinese expatriates outnumber all other foreign graduate students at US universities1-3. Given these statistics, investigating the potential biases facing Chinese nationals and descendants seemed timely.

We found that scientists hesitate to share data or even respond to data requests. Around half (46%) of authors in our sample did not respond to the data requests, and only 14% either shared or indicated willingness to share all or some data. Moreover, we found suggestive evidence that response rates and willingness to share may differ depending on who is asking for the data.

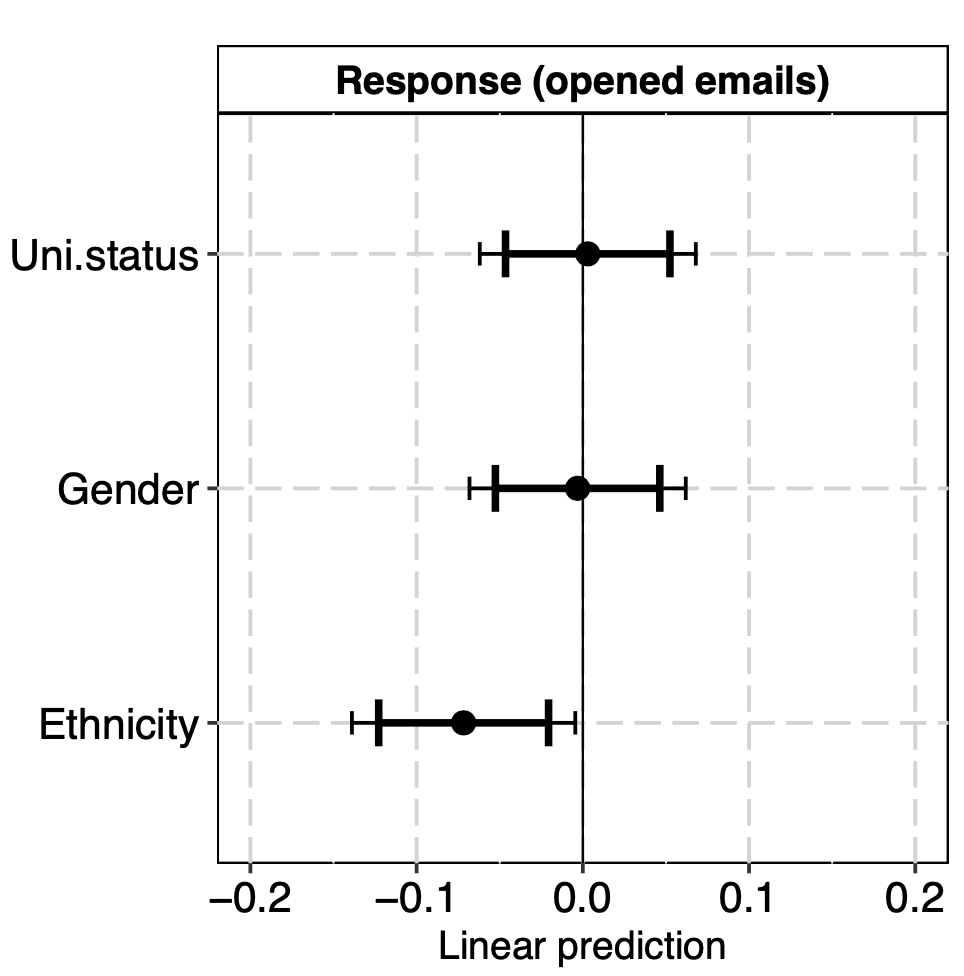

Tests for differences in scientists’ responsiveness to data requests indicated that putatively Chinese treatments were slightly less likely to receive a response compared to their Anglo-Saxon counterpart. In contrast, neither university status nor gender affected participants’ likelihood of responding to data requests from our fictitious treatments. As shown in Figure 2, treatments with Chinese-sounding names had a 7 percentage point lower response rate than treatments with Anglo-Saxon names. This corresponds to an odds ratio of 0.67, or 33% lower odds of response for putatively Chinese treatments compared to putatively Anglo-Saxon treatments, and may indicate that implicit attitudes perpetuate discriminatory behaviors in data sharing, as previously demonstrated in other areas4,5.

This type of ethnic bias may be more likely to occur at the initial stage of a data exchange when scientists make rapid and unreflective judgments on whether to engage with a requestor or not. In comparison, we found weak and statistically inconclusive evidence of ethnic bias in scientists’ willingness to share their data (inferred from a systematic coding of participants’ email responses).

Figure 2| How university status, gender and ethnicity influence data sharing.

How gender and ethnicity intersect

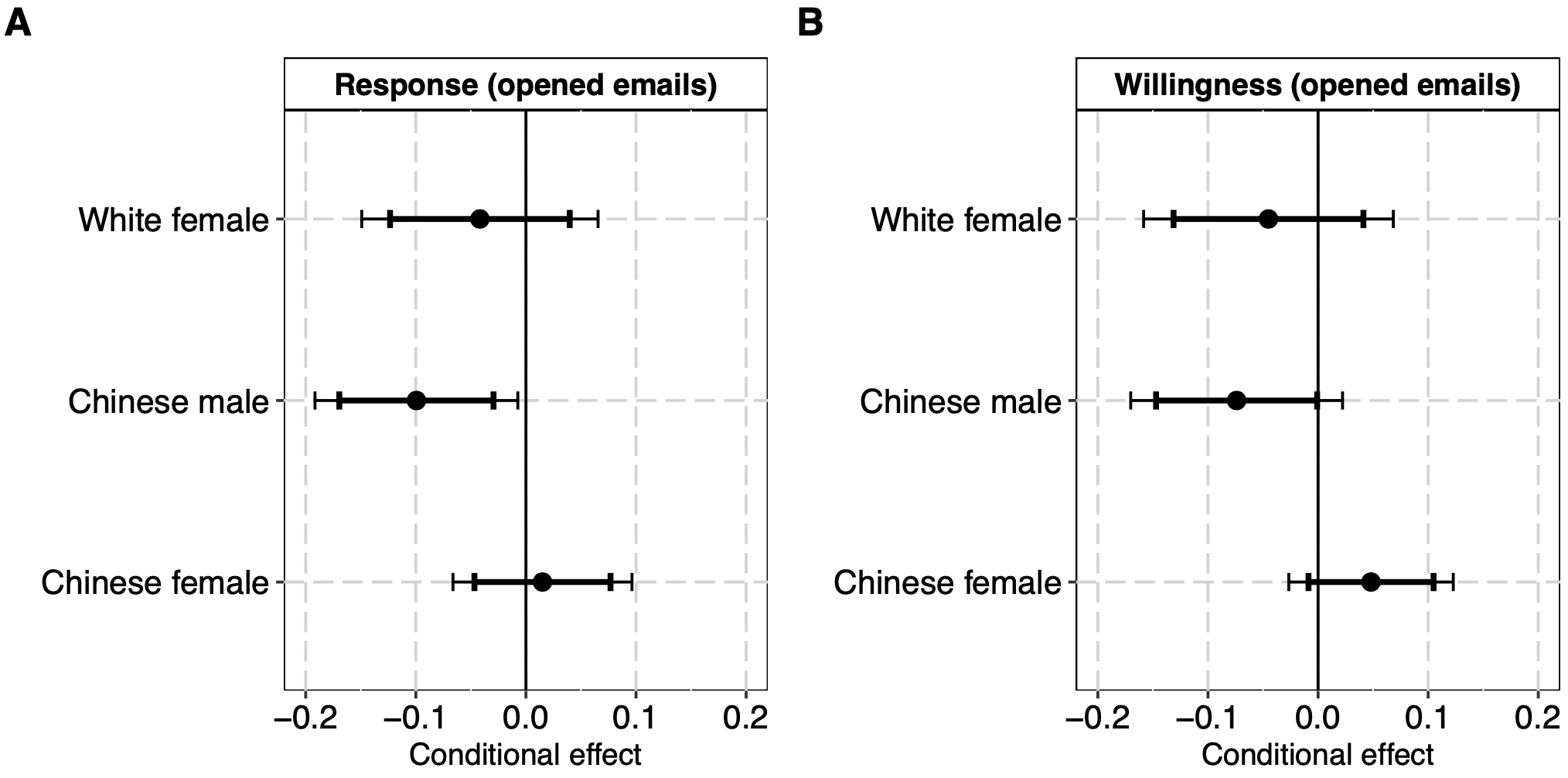

As shown in Figure 3, an exploratory interaction analyses further revealed evidence of a gender-specific ethnic disadvantage towards male Chinese data requestors. Male requestors with Chinese names experienced slight, but consistent, disadvantages in terms of both responsiveness and data-sharing willingness when compared to the male Anglo-Saxon treatment. However, we did not observe such disadvantages with the female treatments, even when the name sounded Chinese.

These findings are consistent with an underlying pattern of male-specific ethnic discrimination that may have been particularly prominent during the data collection period in 2022, given the ongoing discussions about China's alleged intellectual property theft in the US and Europe at the time6, as well as the rise of prejudice and discrimination against Asians in the wake of COVID-197,8

Figure 3| Conditional effects of Ethnicity and Gender on responsiveness and data-sharing willingness. The two panels display the effects for White female, Chinese male and Chinese female requestors compared to White male requestors.

Implications for open science policies

In sum, our study exposes potential inequalities in who can benefit from data-sharing, when disclosure decisions are left to the discretion of individual scientists.9 We show that data requests often require more than trivial efforts from the side of the requestor and that male Chinese data requestors may face particular challenges in such interactions.

The observed differences in data sharing likely arise from stereotypic beliefs about specific requestors’ trustworthiness and deservingness that may hamper the core principle of mutual accountability in science and impede scientific progress, by preventing the free circulation of knowledge.

While some participants in our study may have had good reasons not to share their data, this behavior conflicts with the FAIR principles adopted by PNAS and Nature portfolio journals. From our experience, it seems that many authors that cannot share their data for practical or ethical reasons currently opt to indicate data availability upon request to circumvent a journal’s data-sharing requirements. Improving the necessary storage space or implementing easy-to-use methods for sensitive populations, could help mitigate these problems.10

-

Tollefson, J. China declared world’s largest producer of scientific articles. Nature 553, 390–391 (2018).

-

Brumfiel, G. Chinese students in the US: Taking a stand. Nature 438, 278–280 (2005).

-

Bartlett, T., & Fischer, K. The China Conundrum. The New York Times. (Retrieved October 2022) (2011).

-

Bhati, A. Does Implicit Color Bias Reduce Giving? Learnings from Fundraising Survey Using Implicit Association Test (IAT). Volunt. Int. J. Volunt. Nonprofit Organ. 32, 340–350 (2021).

-

Stepanikova, I., Triplett, J. & Simpson, B. Implicit racial bias and prosocial behavior. Soc. Sci. Res. 40, 1186–1195 (2011).

-

Guo, E., Aloe, J., & Hao, K. The US crackdown on Chinese economic espionage is a mess. We have the data to show it. MIT Technology Review (2021).

-

Lu, Y., Kaushal, N., Huang, X. & Gaddis, S. M. Priming COVID-19 salience increases prejudice and discriminatory intent against Asians and Hispanics. Proc. Natl. Acad. Sci. 118, e2105125118 (2021).

-

Cao, A., Lindo, J. M. & Zhong, J. Can Social Media Rhetoric Incite Hate Incidents? Evidence from Trump’s" Chinese Virus" Tweets. (2022).

-

Andreoli-Versbach, P. & Mueller-Langer, F. Open access to data: An ideal professed but not practised. Res. Policy 43, (2014).

-

Quintana, D. S. A synthetic dataset primer for the biobehavioural sciences to promote reproducibility and hypothesis generation. Elife 9, e53275 (2020).

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Invertebrate omics

Publishing Model: Open Access

Deadline: May 08, 2026

Latest Content

Why is Singapore Identified in Global Research as Number One? How Physical Activity and Education Excellence Created a Global Leader

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in