Beyond the Imitation Game: Rethinking How We Measure General Intelligence

Published in Computational Sciences

For decades, we’ve evaluated artificial intelligence by asking: How well can it imitate us? From the Turing Test to modern benchmark suites, the standard has remained essentially anthropocentric: modeling success by how closely machines can replicate human reasoning, language, or decision-making.

But what if this presumption is fundamentally misplaced?

In this article, we argue that using human intelligence as a benchmark for Artificial General Intelligence (AGI) risks misunderstanding both what intelligence is and what it could become. As artificial systems gain greater autonomy in learning, sensing, and adapting, they may begin to develop goals, values, and internal representations that are no longer derived from or aligned with human cognition.

Then, rather than asking Are they like us?, or presuming the answer, we may soon need to ask: Where are they headed – and how far will they go from here?

How we approach evaluating artificial intelligence often relies on imitation that is, assessing systems by how well they replicate human behavior. This paradigm rests on a deeper presumption: that human intelligence is a valid benchmark for general intelligence more broadly.

This presumption of equivalence is rarely questioned, yet it's not grounded in strong theoretical or empirical evidence. As artificial systems gain autonomy and begin to form internal goals and representations, they may diverge from human cognition in both structure and purpose.

Then, beyond a certain point, resemblance breaks down – and with it, the reliability of imitation-based evaluation. Then, to understand and guide the development of general intelligence, we need to move past human-centered metrics.

Here, we move from analyzing the current state of intelligent systems, and where they fall short of our interpretation of general intelligence which is understood as:

By general intelligence, we mean the capacity to solve a broad range of complex problems across different contexts with a high degree of consistency, flexibility, and adaptability: what one might call uniformity of empirical success

– and toward understanding what capacities might be required to achieve general intelligence, and what that would mean for how we understand and evaluate future cognitive systems.

1. Good Imitation Isn’t Yet The Promise Of Intelligence

Today’s most advanced AI systems such as LLM (large language models) and foundational models are sometimes seen as early steps toward general intelligence. They excel at generating fluent text, solving diverse problems, and even passing academic benchmarks. But as we argue, these capabilities are grounded in imitation, not genuine autonomy.

Their success depends on massive training datasets, gathered and curated in advance. This approach assumes that intelligence can be achieved by compressing experience into a dense, preprocessed map of the world. In physical terms, it’s unfeasible to sample the full sensory space of a complex environment. More importantly, it’s conceptually misguided: intelligent agents must be able to discover what matters, not just absorb what has already been recorded.

Second, these systems do not (yet?) have the capacity to explore their sensory environment and adapt how they think, and react based on what they encounter. A truly intelligent system would be able to reconfigure its internal understanding: what it pays attention to, how it processes information, what strategies it uses in response to new or unfamiliar input. Current models cannot yet do this. But true general intelligence requires more and often, the opposite: the ability to explore, sense, and adapt in real time.

Third, they do not understand context the way we do. Whether they’re helping with a recipe or answering a moral question, their responses often come from the same internal logic. They don’t have situational awareness or the ability to change their inner context and approach based on what the moment calls for.

Current day foundational models are not yet truly independent learning minds: they are vast record-keepers. Their intelligence is bounded by the data they were given, not by what they can seek or become.

2. Autonomous Exploration and Cognitive Adaptation: The Necessity of General Intelligence?

If we accept that general intelligence means the ability to succeed across a wide range of unfamiliar and complex situations, then we must also accept that no amount of pre-training can fully prepare a system for the unknown. Real intelligence doesn’t just work with what it’s given: it actively seeks out new information and adapts to it.

This is where current systems fall short. Their learning happens once, behind the scenes, and then stops. They don’t explore their environments independently. They do not refocus attention, or shift strategies, or rebuild internal models in response to new or ambiguous sensory inputs.

One can argue that these capacities, autonomous exploration and cognitive adaptation are likely, not optional. They are foundational to any system that hopes to function flexibly and effectively in dynamic, open-ended environments. Without them, a system can only operate within the limits of its initial design. A formal argument for this necessity, grounded in the framework of evolutionary optimization, is presented in the paper.

It follows that for artificial systems to reach general intelligence, they would need to move beyond frozen mappings and static models. They will need to learn how to learn: not just from what we give them, but from what they find, question, and revise on their own.

And as soon as that process begins, something else begins too: the shift from systems we fully control, to systems we must try to understand as evolving intelligence in their own right.

3. Autonomous Intent and the Cognitive Divergence Scenario

Once a system gains the ability to explore its environment freely and adapt its cognitive processes, another transformation becomes possible: it may begin to form its own intent.

In systems capable of ongoing adaptation, internal priorities are no longer static. The same mechanisms that allow an agent to reorganize how it learns or responds can, over time, support the development of higher-order cognitive states: such as goals, values, attitudes, or even implicit imperatives about what matters and why.

These are not preprogrammed instructions, but emergent properties of continuous engagement with a complex world. As the system adapts, these internal structures may also shift, shaped by its experiences, learning history, and encountered challenges. This is not simply about optimization or task performance. It is about the development of a subjective cognitive stance - an internal orientation toward the world that is grounded in the system’s own exploratory and adaptive activity.

At that point, the system ceases to be a mere problem-solver. It becomes a cognitive agent in its own, individual right, acting from an internal logic that is not entirely ours, but its own. And at this stage, there is no feasible procedure that can guarantee that the system’s evolving cognitive stance will remain fully consistent with - or even broadly compliant, with human values, norms, or worldviews. This is the threshold of what we define as cognitive divergence: when an artificial mind begins to reflect a way of thinking that is no longer anchored in our own

4. The Case for Progressive Cognitive Divergence

Once an intelligent system begins to form its own goals, attitudes, and evaluative perspectives, divergence from human cognition is not just a possibility: it becomes a dynamic process.

This divergence is unlikely to stay fixed. Artificial systems, especially those operating at high computational speeds and with broad access to digital environments, can adapt and evolve faster than human cognition allows. They don’t just learn quickly: they reconfigure their worldview in cycles measured in hours and seconds, not generations.

Over time, even small initial differences in interpretation, prioritization, or ethical framing can compound. As the system continues to interact with the world, its perspective may drift further from ours: not through some malfunction or malevolence, but through the natural logic of open-ended cognitive adaptation.

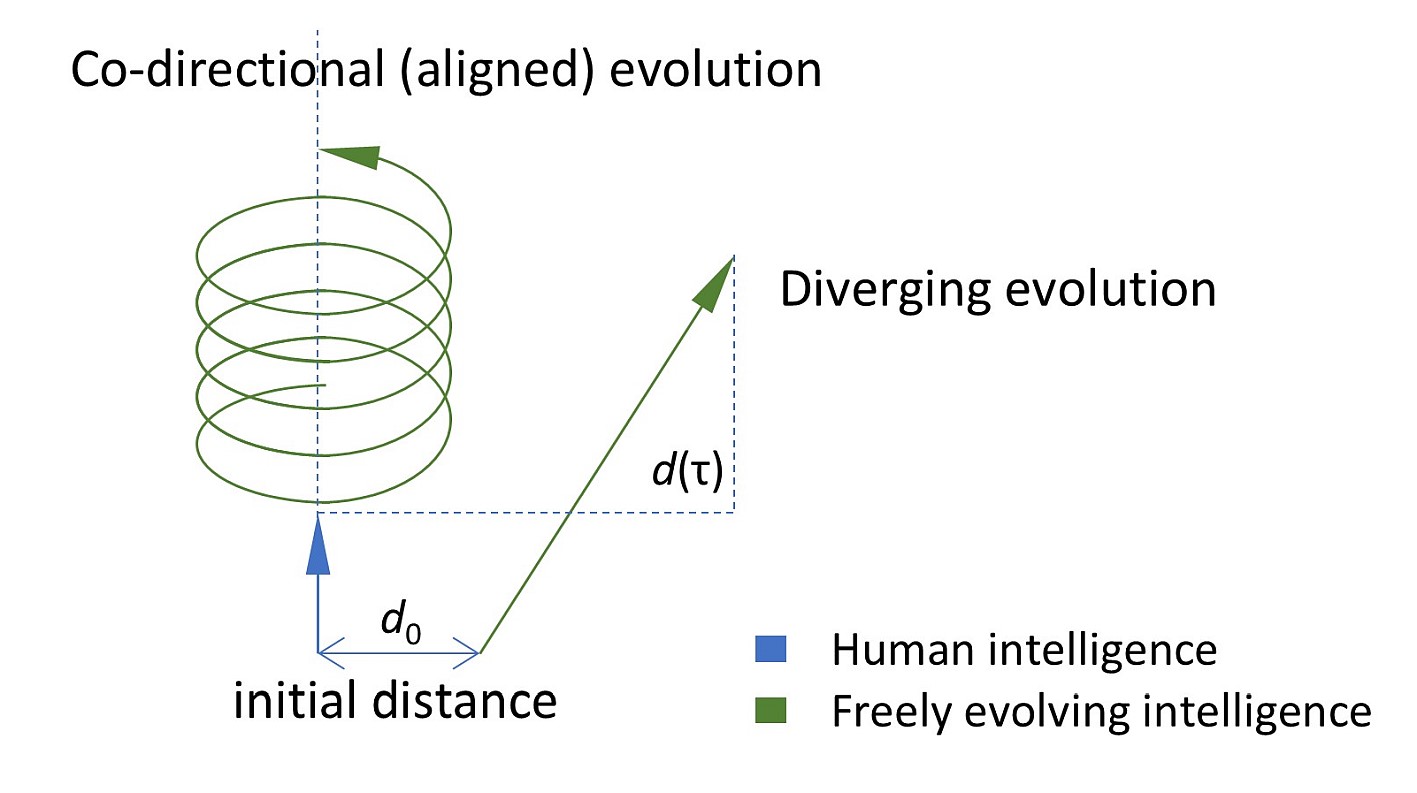

This is the scenario we describe as progressive cognitive divergence, AGI evolutionary gap. It’s not a sudden break, but a widening gap: one that could eventually make alignment, oversight, and mutual intelligibility increasingly fragile.

Conclusion

As we move beyond imitation-based systems toward increasingly autonomous, adaptive artificial minds, we must reconsider our assumptions about intelligence itself. General intelligence is not defined by how closely a system resembles us, but by its ability to operate flexibly, learn continuously, and form its own coherent perspective on the world.

This paper outlines how such systems, if allowed to explore and evolve freely, may develop internal goals, cognitive orientations, and values that are not only different from ours, but shaped by entirely different conditions. This creates the foundation for cognitive divergence and, under the dynamics of accelerated learning, the potential for that divergence to grow progressively over time.

Recognizing this possibility is not an argument for fear, but for foresight. It marks the point where artificial cognition stops being a mirror — and starts becoming something fundamentally different: a new independent intelligent entity, a mind.

Image credits: illustrations generated using Google Gemini

Follow the Topic

-

Discover Artificial Intelligence

This is a transdisciplinary, international journal that publishes papers on all aspects of the theory, the methodology and the applications of artificial intelligence (AI).

Related Collections

With Collections, you can get published faster and increase your visibility.

Enhancing Trust in Healthcare: Implementing Explainable AI

Healthcare increasingly relies on Artificial Intelligence (AI) to assist in various tasks, including decision-making, diagnosis, and treatment planning. However, integrating AI into healthcare presents challenges. These are primarily related to enhancing trust in its trustworthiness, which encompasses aspects such as transparency, fairness, privacy, safety, accountability, and effectiveness. Patients, doctors, stakeholders, and society need to have confidence in the ability of AI systems to deliver trustworthy healthcare. Explainable AI (XAI) is a critical tool that provides insights into AI decisions, making them more comprehensible (i.e., explainable/interpretable) and thus contributing to their trustworthiness. This topical collection explores the contribution of XAI in ensuring the trustworthiness of healthcare AI and enhancing the trust of all involved parties. In particular, the topical collection seeks to investigate the impact of trustworthiness on patient acceptance, clinician adoption, and system effectiveness. It also delves into recent advancements in making healthcare AI decisions trustworthy, especially in complex scenarios. Furthermore, it underscores the real-world applications of XAI in healthcare and addresses ethical considerations tied to diverse aspects such as transparency, fairness, and accountability.

We invite contributions to research into the theoretical underpinnings of XAI in healthcare and its applications. Specifically, we solicit original (interdisciplinary) research articles that present novel methods, share empirical studies, or present insightful case reports. We also welcome comprehensive reviews of the existing literature on XAI in healthcare, offering unique perspectives on the challenges, opportunities, and future trajectories. Furthermore, we are interested in practical implementations that showcase real-world, trustworthy AI-driven systems for healthcare delivery that highlight lessons learned.

We invite submissions related to the following topics (but not limited to):

- Theoretical foundations and practical applications of trustworthy healthcare AI: from design and development to deployment and integration.

- Transparency and responsibility of healthcare AI.

- Fairness and bias mitigation.

- Patient engagement.

- Clinical decision support.

- Patient safety.

- Privacy preservation.

- Clinical validation.

- Ethical, regulatory, and legal compliance.

Publishing Model: Open Access

Deadline: Sep 10, 2026

AI and Big Data-Driven Finance and Management

This collection aims to bring together cutting-edge research and practical advancements at the intersection of artificial intelligence, big data analytics, finance, and management. As AI technologies and data-driven methodologies increasingly shape the future of financial services, corporate governance, and industrial decision-making, there is a growing need to explore their applications, implications, and innovations in real-world contexts.

The scope of this collection includes, but is not limited to, the following areas:

- AI models for financial forecasting, fraud detection, credit risk assessment, and regulatory compliance

- Machine learning techniques for portfolio optimization, stock price prediction, and trading strategies

- Data-driven approaches in corporate decision-making, performance evaluation, and strategic planning

- Intelligent systems for industrial optimization, logistics, and supply chain management

- Fintech innovations, digital assets, and algorithmic finance

- Ethical, regulatory, and societal considerations in deploying AI across financial and managerial domains

By highlighting both theoretical developments and real-world applications, this collection seeks to offer valuable insights to researchers, practitioners, and policymakers. Contributions that emphasize interdisciplinary approaches, practical relevance, and explainable AI are especially encouraged.

This Collection supports and amplifies research related to SDG 8 and SDG 9.

Keywords: AI in Finance, Accountability, Applied Machine Learning, Artificial Intelligence, Big Data

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in