Deep-learning-based decomposition of overlapping-sparse images: application at the vertex of simulated neutrino interactions

Published in Physics

Image decomposition is a technique that breaks down an image into its fundamental parts or layers, such as textures, colours, shading, and illumination. Think of it like peeling back the layers of an onion, each representing a different aspect of the image. This process helps us extract meaningful information from images, making it useful for applications like improving image quality, editing images, recognising objects, and understanding scenes.

A sparse image is one where most pixels are empty or irrelevant, containing only a tiny percentage of meaningful information. This sparsity can result from the nature of the data, how it was collected, or intentional data compression. Sparse images are common in scientific fields like cosmology, particle physics, medical imaging, and molecular biology. Dealing with sparse images is crucial for scientific research and advancements. In particle physics, for example, sparse images can happen when multiple particle tracks overlap within a detector, making it difficult to distinguish and analyse individual tracks. Traditional computational methods struggle with these complex scenarios due to the vast number of possible signal combinations.

This article focuses on a specific challenge in particle physics: resolving overlapping-sparse images from neutrino interactions. When a neutrino interacts with a target, it produces secondary particles that generate scintillation light, creating overlapping signals due to the finite granularity of current detectors. Accurately resolving these signals is critical for future high-precision neutrino experiments, which aim to measure fundamental properties like the neutrino oscillation parameters [1, 2].

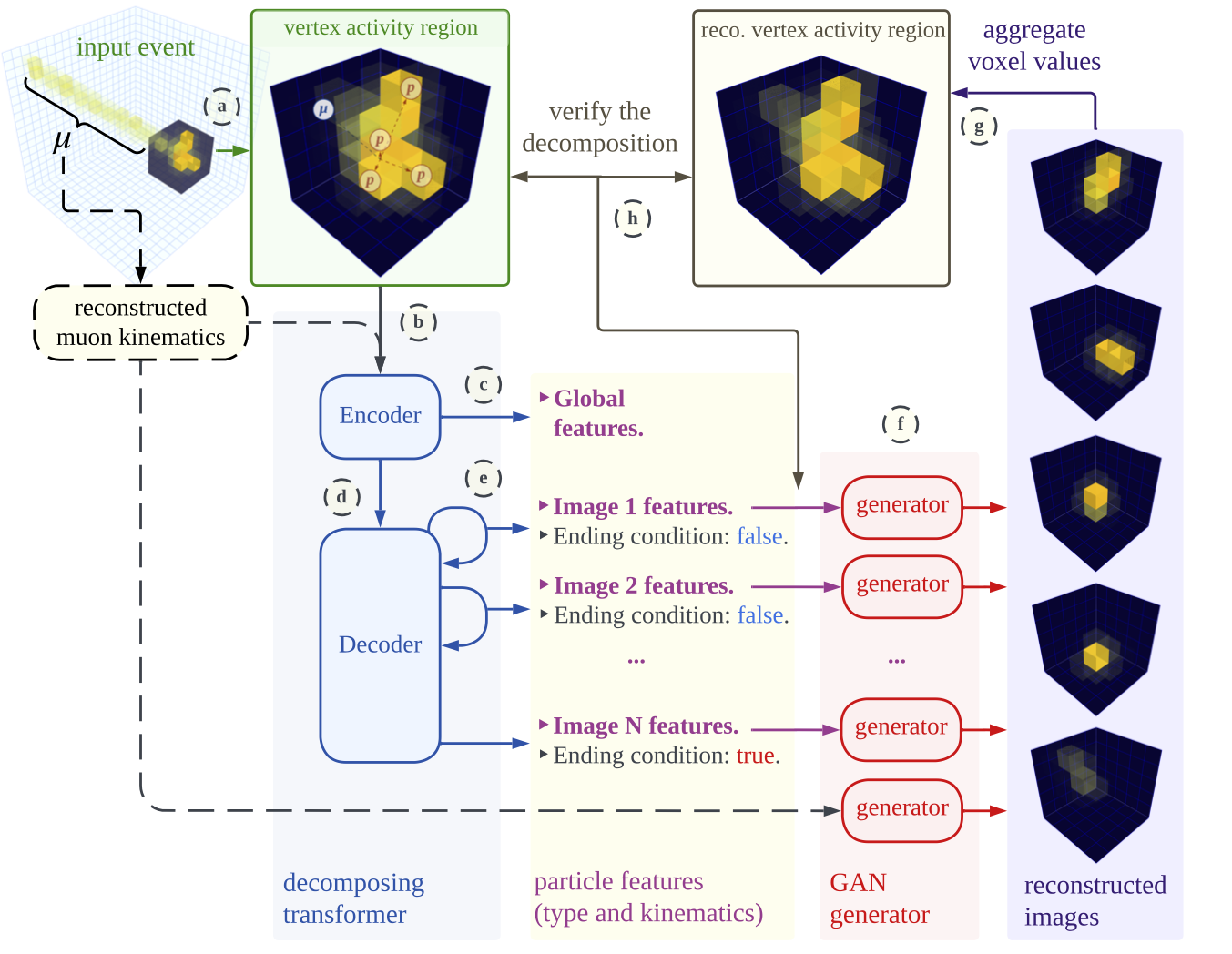

Deep learning offers a solution by training neural networks on large datasets to learn the complex patterns in overlapping images. These models can infer the underlying components from the overlapping signals, handling high-dimensional data efficiently. The paper proposes using the transformer model, a cutting-edge deep learning architecture known for its ability to capture relationships in data through attention mechanisms [3]. The hypothesis is that transformers can understand the correlations between overlapping images and iteratively deconstruct them into their individual components. The methodology includes using a generative model to validate and improve the proposed process. This study leverages the differenciable nature of the generative model to explore parameter space through gradient-descent minimisation. This technique involves iteratively adjusting model parameters, enhancing the accuracy and reliability of the particle decomposition. The full methodology is depicted in Figure 1.

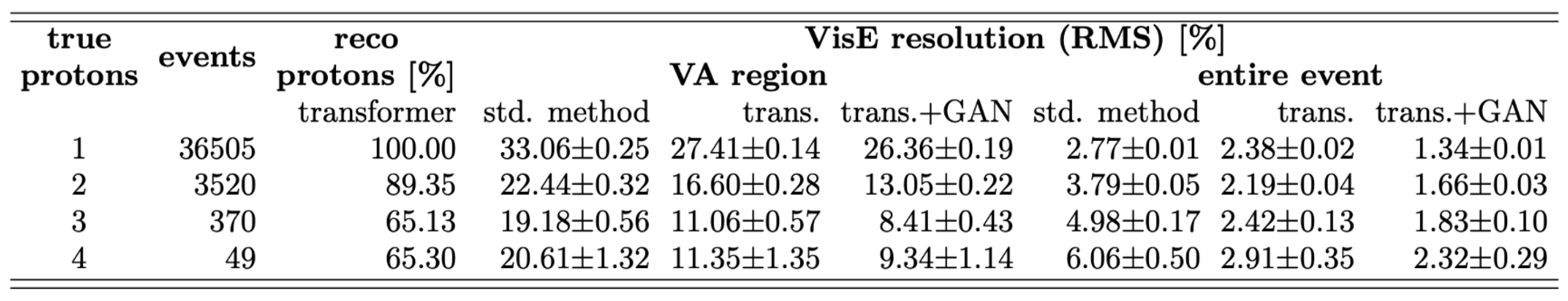

The table shows the performance of the standard method, the transformer model, and the transformer model together with the generative adversarial network (GAN) in reconstructing the visible energy (VisE) across varying proton multiplicities (1-4), within both the vertex-activity (VA) region and the entirety of the event. The resolution is calculated as (VisEtrue-VisEreco) VisEtrue-1.

By providing a more precise and detailed view of the interaction vertex, this work lays the foundation for improved accuracy in neutrino-related measurements. The results are presented in the form of a table in Figure 2, evaluated on a statistically independent dataset of neutrino interactions under a selected nuclear model, outperforming the standard method in the reconstruction of the neutrino energy. The deep-learning approach presented in this study has significant implications for future high-precision long-baseline neutrino experiments [1, 2]. It can reduce systematic errors, avoid model dependence, and improve neutrino energy resolution. This improvement directly influences the sensitivity towards potentially discovering leptonic charge-parity (CP) violation and measuring neutrino oscillation parameters, which are crucial for advancing our understanding of fundamental physics [4].

References

[1] Abi, B. et al. Long-baseline neutrino oscillation physics potential of the DUNE experiment. Eur. Phys. J. C. 80, 978 (2020).

[2] Abe, K. et al. Hyper-kamiokande design report. Preprint at https://arxiv.org/abs/1805.04163 (2018).

[3] Vaswani, A. et al. Attention is all you need. Preprint at https://arxiv.org/abs/1706.03762 (2017).

[4] Ershova, A. et al. Role of deexcitation in the final-state interactions of protons in neutrino-nucleus interactions. Phys. Rev. D. 108, 112008 (2023).

Follow the Topic

-

Communications Physics

An open access journal from Nature Portfolio publishing high-quality research, reviews and commentary in all areas of the physical sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Higher-order interaction networks 2024

Publishing Model: Open Access

Deadline: Feb 28, 2026

Physics-Informed Machine Learning

Publishing Model: Hybrid

Deadline: May 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in