Giving autonomous vehicles the ability to hear

Published in Electrical & Electronic Engineering, Computational Sciences, and Mechanical Engineering

How it started: Sound, autonomy, and urban intelligence

When we imagine autonomous vehicles, most of us picture cameras, radars, and LIDAR sensors scanning the road ahead. What we rarely consider is hearing — a crucial sensory modality in human driving. As humans, in specific situations, we would also rely on sounds like honking, sirens, barking dogs, or children playing to make safe driving decisions.

This project was born from a simple yet powerful question:

Can autonomous vehicles benefit from environmental sound recognition, especially in urban environments within smart cities?

And if so, could we make it work on low-cost embedded systems, like the Raspberry Pi?

The motivation: Why a new dataset was necessary

During my master’s research, we found a clear gap in the available data: while there are many datasets for environmental sound recognition (ESR), none were designed for real-world autonomous vehicle applications.

Most existing datasets were:

- Too general (not vehicle-specific);

- Not representative of urban noise conditions within the context of smart cities;

- Not structured for edge deployment on devices with limited computing power.

At the same time, sound recognition had proven effective in areas like smart homes, healthcare, and wildlife monitoring. It was time to bring hearing to mobility.

Building the US8K_AV dataset

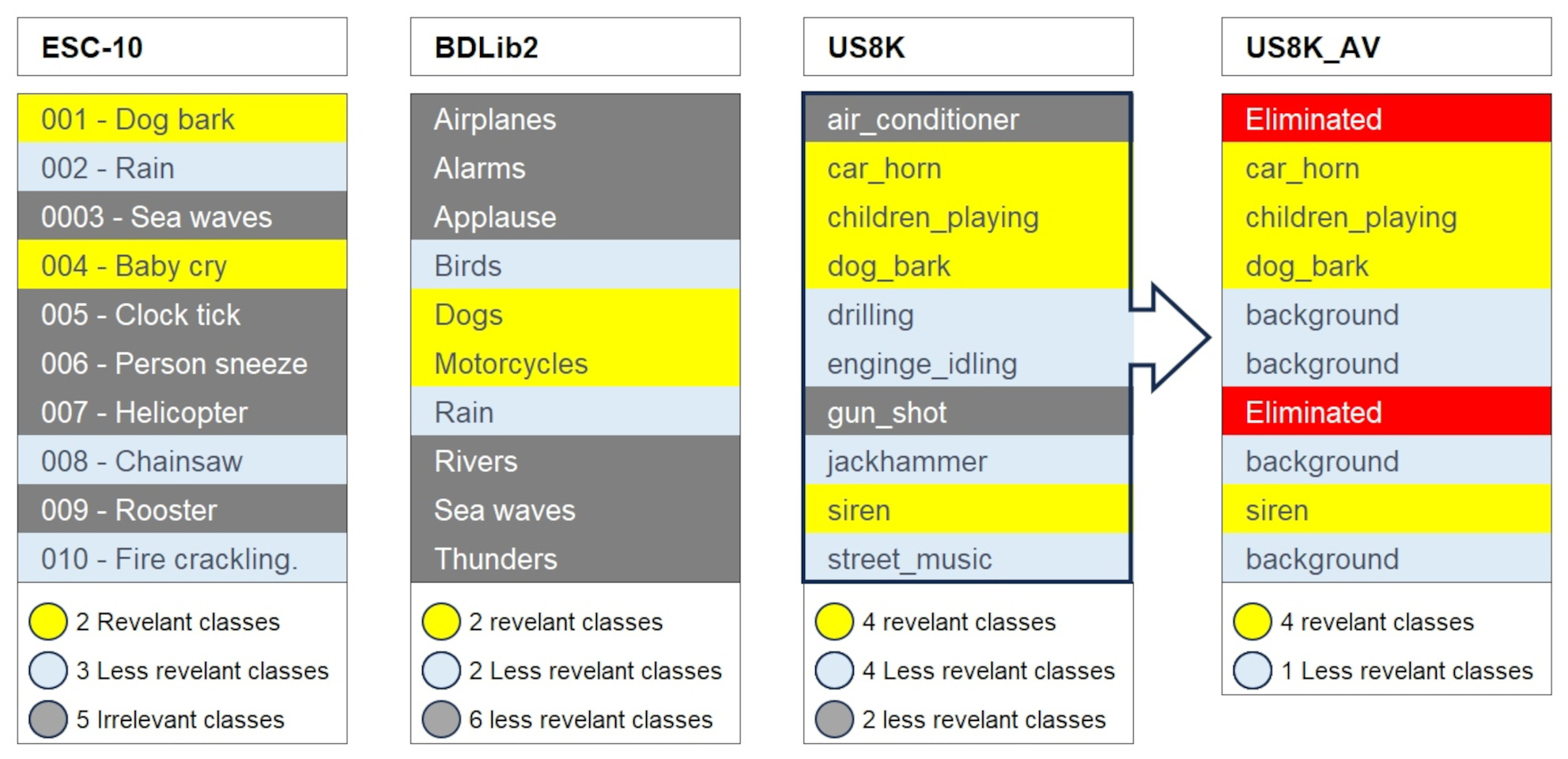

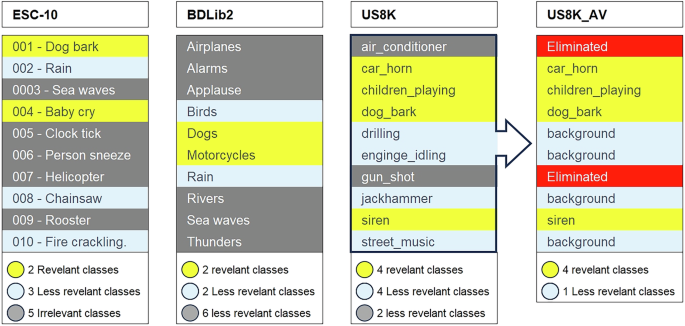

We started by adapting the well-known UrbanSound8K (US8K) dataset — a standard benchmark in ESR — to better fit the needs of autonomous driving.

Step 1: Filtering irrelevant classes

Classes like 'air_conditioner' and 'gun_shot' were considered irrelevant in the context of urban mobility and removed from the dataset.

Step 2: Creating the ‘background’ class

Some classes (e.g., 'drilling', 'engine_idling', 'jackhammer', 'street_music') were merged into a new class called 'background', representing general urban noise.

Step 3: Adding a new class — ‘silence’

Instead of treating silence as just low-volume audio, we sourced real-world silence samples from diverse locations, annotated them, and included them as a new class. This allows models to actively recognize and react to quiet environments — useful for event segmentation, baseline calibration, and power-saving mechanisms.

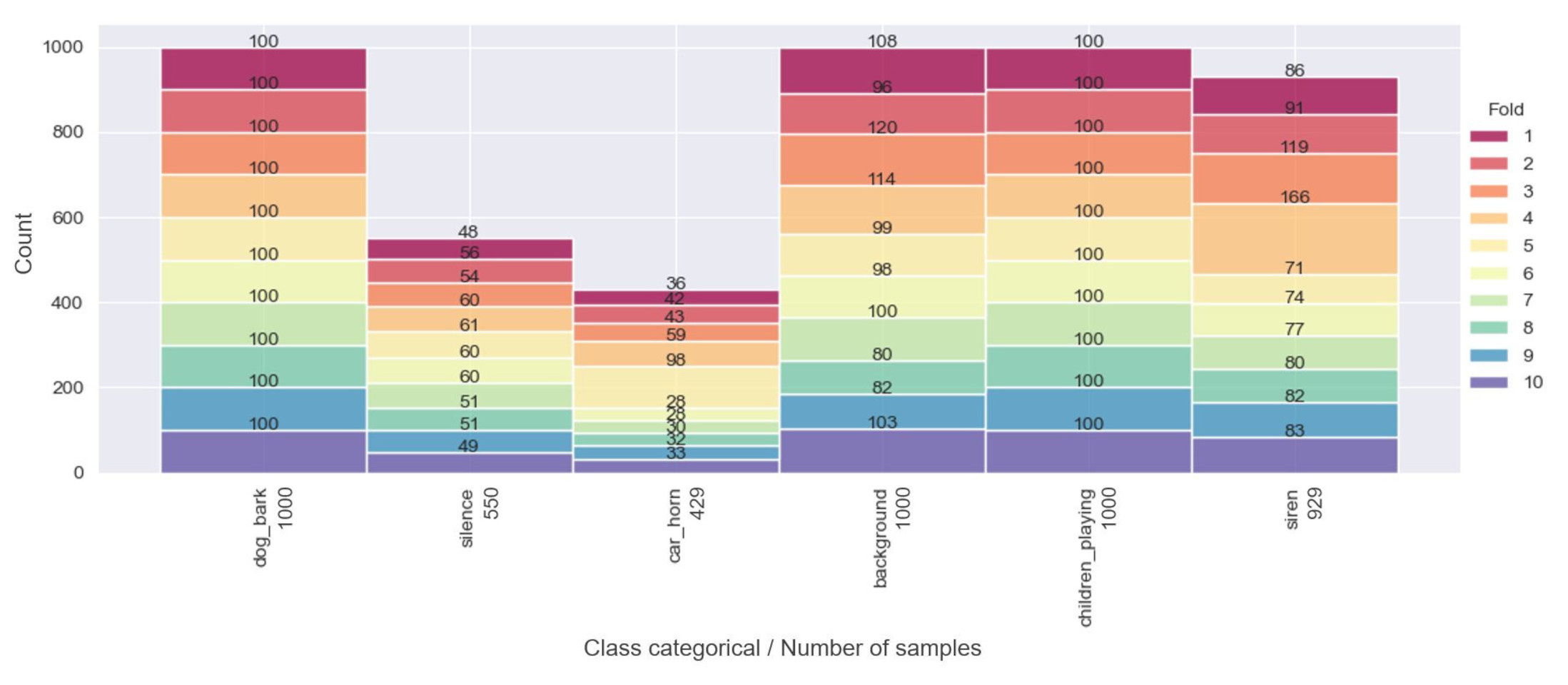

Step 4: Preserving structure and preventing data leakage

The dataset was carefully split into 10 folds, ensuring that all slices from a single audio source were placed in the same fold. This prevents overly optimistic results due to duplicated samples in both training and testing sets.

The result is the dataset US8K_AV:

- 4,908 annotated WAV files ;

- 4.94 hours of audio;

- 6 meaningful sound classes;

- Designed for embedded systems and real-world use.

Model results and real-time testing

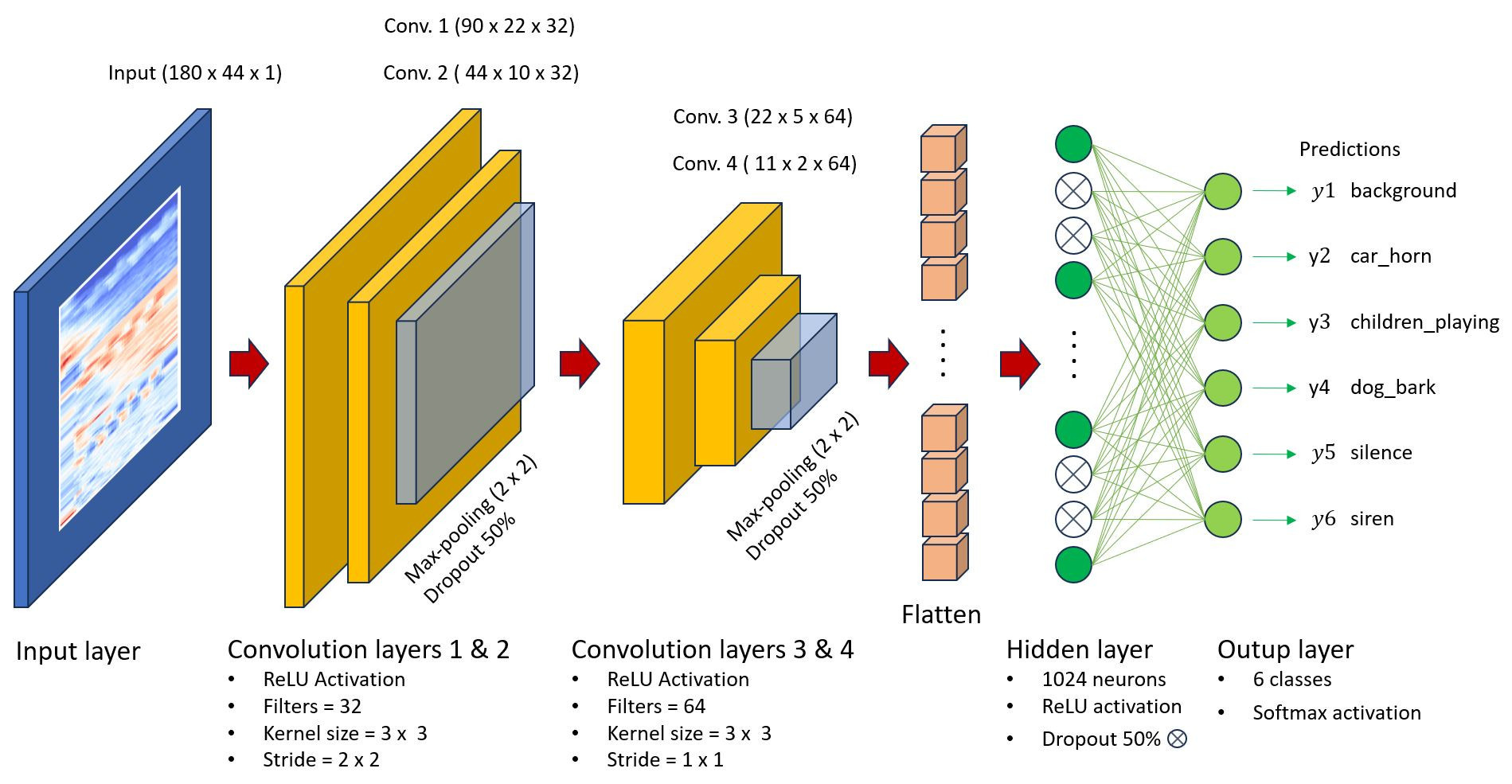

We benchmarked several classifiers — including traditional machine learning algorithms (SVM, Logistic Regression, Random Forest) and deep learning architectures (ANN, CNN 1D, CNN 2D).

We found that a 2D Convolutional Neural Network (CNN 2D) trained on log-mel spectrograms (with their derivatives) yielded the best trade-off between accuracy, memory usage and speed, even when deployed on a Raspberry Pi 4.

Key highlights:

- CNN 2D achieved >80% accuracy on real-world data;

- Response time <50 ms on Raspberry Pi;

- Significant F1-score improvements over the original US8K for relevant classes.

👉🏼So far, the performance improved across all relevant categories, validating our methodology of merging and adding classes.

Real-world applications

This dataset was designed with practical use cases in mind, especially for urban autonomous vehicles in smart cities, like the inovation project Citybot.

Use cases include:

- 🚸 Children playing behind a fence — a camera can’t see them, but a microphone can hear them;

- 🐕 Dog barking or 🚗 honking — useful when approaching intersections or blind spots;

- 🚨 Siren detection — allows earlier response to emergency vehicles than vision-based sensors alone.

The inclusion of a silence class also enables systems to identify periods of inactivity, helping with energy efficiency and segmentation.

Challenges and lessons learned

One unexpected challenge?

Finding real silence...

True silence in urban settings is rare, and collecting well-documented, noise-free recordings took substantial time and curation.

Another lesson: balancing scientific rigor with practical deployment. Our goal was not just to publish another dataset, but to create something usable and replicable — something that can run on a Raspberry Pi and still be meaningful in the real world.

An invitation to the community

We see US8K_AV not as a final product, but as a foundation for future work.

We invite researchers to:

- Add new classes relevant to other vehicle types;

- Expand the dataset with recordings from other regions;

- Use it in different edge-computing environments;

- Explore sensor fusion combining acoustic and visual data.

🔗 All source code, thesis and dataset are publicly available:

- 🖥️ GitHub

- 📰 Master thesis of André Luiz Florentino at Centro Universitário FEI

- 💽 US8K_AV available at Harvard Dataverse

Final thoughts: Why hearing matters

Autonomous vehicles are becoming increasingly capable. But without the ability to hear, they are still missing an essential sense — one that humans use every day to stay safe, avoid accidents, and make informed decisions.

Our hope is that this dataset inspires others to think beyond vision and radar — and consider sound as a rich, underutilized source of environmental context.

Because sometimes...

the most important thing to know... is what you hear!

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Invertebrate omics

Publishing Model: Open Access

Deadline: May 08, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in