Harnessing Intrinsic Noise in Memristor Hopfield Neural Networks for Combinatorial Optimization

Published in Electrical & Electronic Engineering

Solving combinatorial optimization problems, which have broad industry applications, is still very challenging with today’s compute hardware, especially when the problems fall within the NP hard complexity class, where only exponentially expensive solutions are known. So far, different hardware solutions using fully digital, quantum and optical approaches have been proposed, but there is room to improve speed and energy efficiency. Ideally, such hardware solutions would work at room temperature and would be fabricated with today’s technology, in contrast to expensive and cryogenically cooled quantum systems.

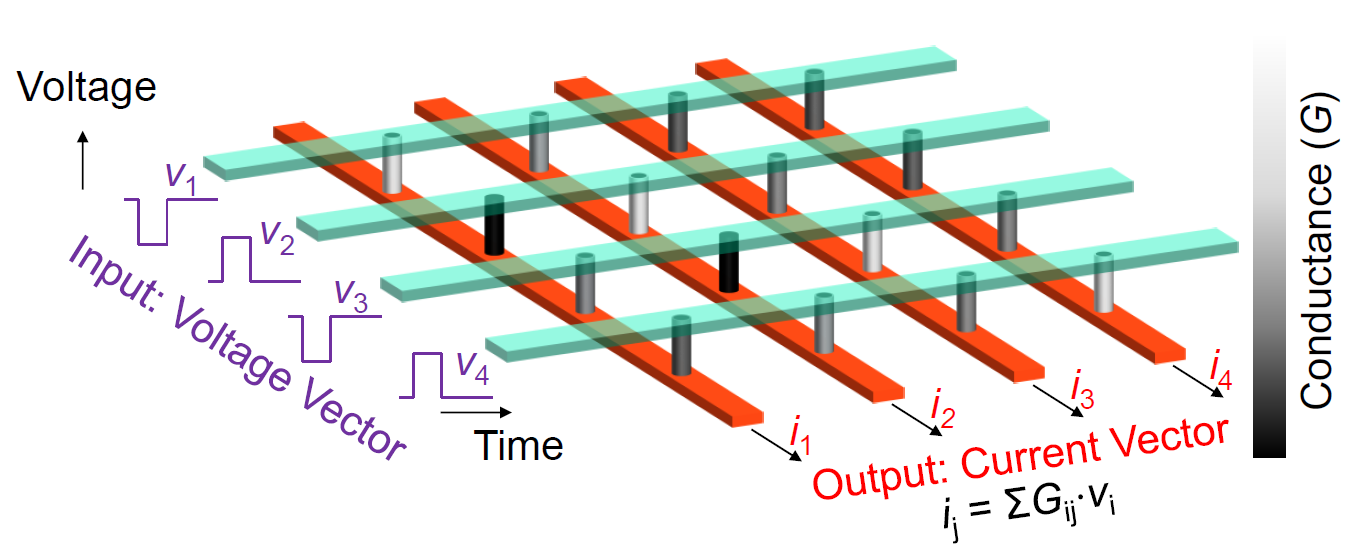

Recently, amongst other applications, memristor-based crossbar arrays have caught great interest for machine learning applications due to the ability to perform highly parallel operations at high throughput and low power consumption. This brought us to the question: could we also use this funky piece of non-volatile hardware to solve combinatorial optimization problems?

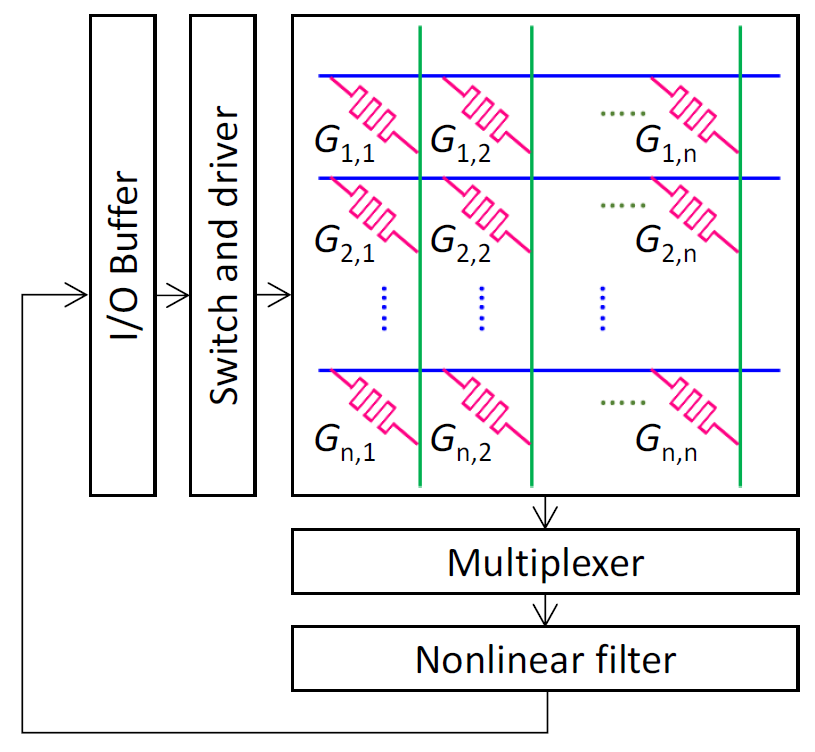

This question kicked off the start of my summer internship project in 2018. I temporarily joined Hewlett Packard Labs’ Rebooting Computing team and started collaborating with an interdisciplinary set of researchers, involving both colleagues in labs and from various universities. After a literature study we quickly figured out that one of the first proposals of a neural network algorithm, the discrete Hopfield Neural Network (HNN), seems to be a perfect match. This algorithm had been proposed by John Hopfield in the 80s, before many of the principal researchers in our team had been born. Similar to the other machine learning applications Labs had been investigating, the updating mechanism of that iterative algorithm can be easily implemented with a memristor crossbar array, and as John Hopfield had pointed out back in the day, it is also an appealing approach to solve combinatorial optimization problems. We baptized our memristor HNN “mem-HNN”, took advantage of a prototype memristor crossbar array fabricated with Foundry CMOS and Labs’ custom memristor material stack, and off we went into the lab.

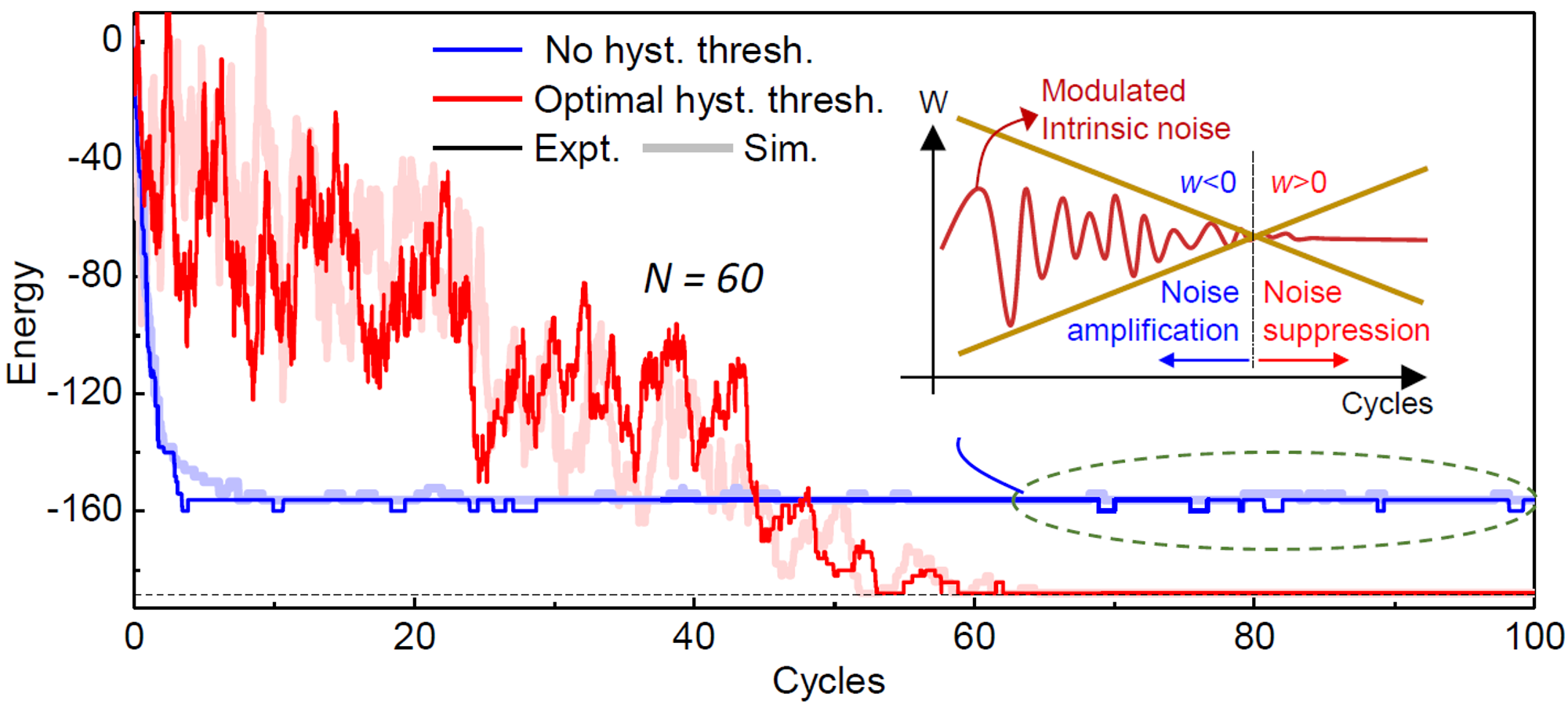

Quickly did we notice we still had some hiccups to solve. Software implementations of HNNs get notoriously stuck in local minima, which often prevents the system from finding the optimal solution we actually care about (the global minimum). In digital algorithms, this bad habit gets solved by inserting a bit of randomness into the system, kicking the system out of undesired local minima. From the hardware perspective, that’s pretty expensive as digital random number generators have a substantial footprint and energy. That’s where nature helped us out with our analog mem-HNN hardware: analog hardware is accurate but not always precise, with noise present from multiple sources. This noise comes for free, doesn’t require any additional hardware real-estate or energy, and after we identified an approach based on a hysteretic threshold function to amplify or dampen the noise as desired, we were able to obtain state-of-the-art performance with our experimental prototype.

We found that using such analog hardware to implement heuristic approaches (like Hopfield networks) to solve combinatorial optimization problems was an excellent fit of technology to algorithm. At scaled CMOS nodes, we estimated that the mem-HNN substantially improves performance and scalability compared to current quantum annealing approaches, fiber-based optical accelerators, or modern digital technologies. In fact, we forecast over four orders of magnitude higher solution throughput per power consumption. All of this while operating close to room temperature and taking advantage of existing semiconductor technology and the rapidly emerging analogue non-volatile memristor technology.

For more details, please see our recent paper in Nature Electronics:

Follow the Topic

-

Nature Electronics

This journal publishes both fundamental and applied research across all areas of electronics, from the study of novel phenomena and devices, to the design, construction and wider application of electronic circuits.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in

Nice work. Very impressive!

Thanks for the comments, Dr. Wang!