Why does it matter?

The quality of surgery has a profound impact on patients’ outcomes and quality of life. However, it remains unclear which specific surgical movements by surgeons have the greatest impact on surgical outcomes, and why some surgeons have better results than others. Understanding these factors may improve surgical outcomes and the quality of care delivery. Our research, which focuses on deconstructing surgery to the granular level of surgical gestures, offers a promising approach to answering these questions.

What are surgical gestures?

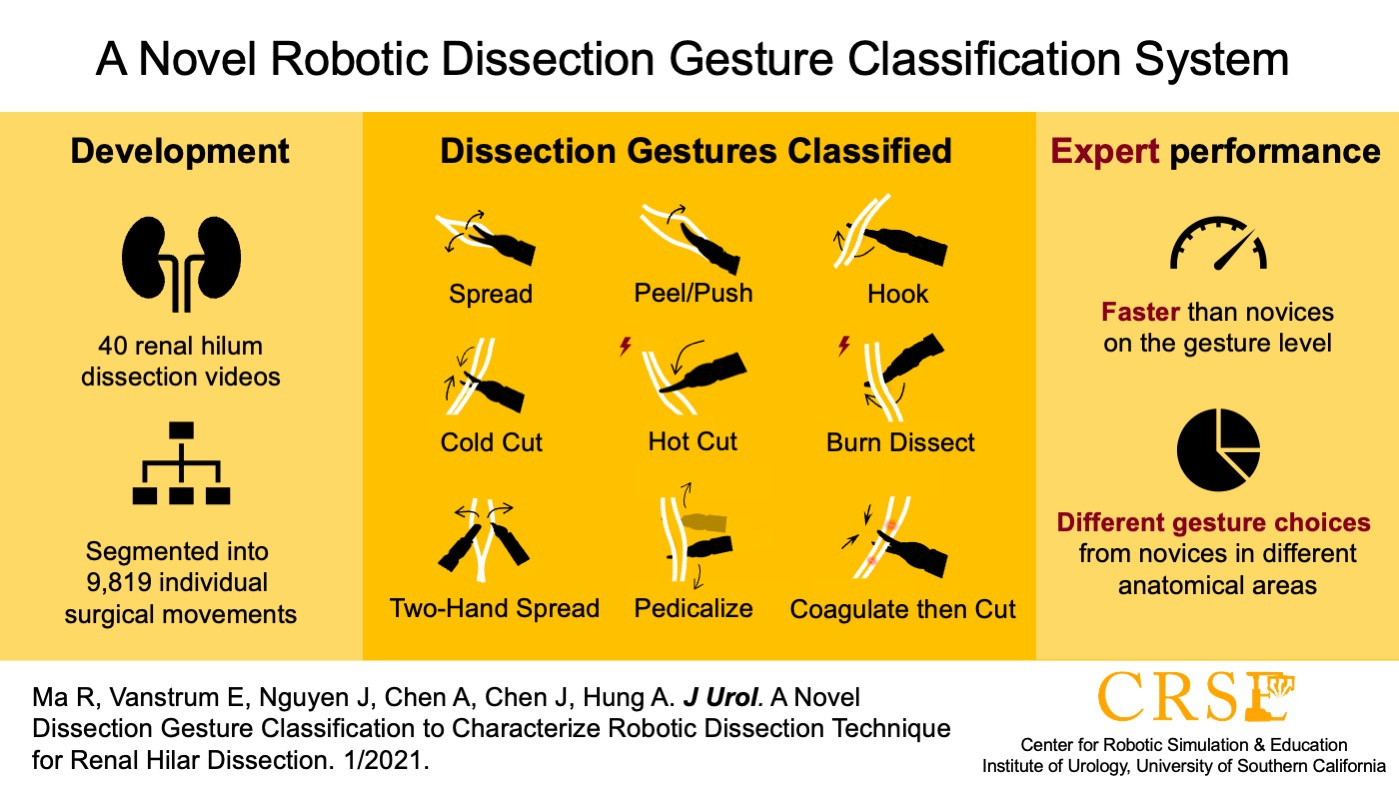

We define surgical gestures as the smallest meaningful interactions of a surgical instrument with human tissue. These gestures typically last only a few seconds and are preceded and followed by a brief period without motion. We developed this concept because we observed that there was no widely-accepted terminology describing different instrument movements during robotic surgery, hindering understanding, feedback, and improvement in the operating room. By interviewing expert surgeons and analyzing hundreds of surgical videos, we created a system that categorizes surgical movements into nine dissection gestures and four supporting gestures. This classification comprehensively considers factors such as the direction of movement, the application of energy, the number of instruments used. We initially validated this classification system using 40 surgical videos of renal hilar dissection during robot-assisted partial nephrectomy, and found that this system could cover all movements in this procedure.1 We also discovered that surgeons of different experience levels use different surgical gestures, an insight that provides actionable teaching points for novices. However, on this point, more work needs to be done to better understand how different surgical movements impact surgical outcomes.

What did we find in this study?

Radical prostatectomy is the most common treatment for prostate cancer, and is an ideal test case for evaluating the relationship between surgical gestures and patient outcomes. This procedure has a concrete functional outcome that is strongly linked to surgical performance – erectile function. Over 60% of men experience erectile dysfunction after surgery due to injury of the nerves that run alongside the prostate.2 During a nerve-sparing procedure, surgeons carefully peel these nerves away from the prostate. Even minute changes in a surgeon's dissection technique can have a significant impact on a patient's erectile function recovery.

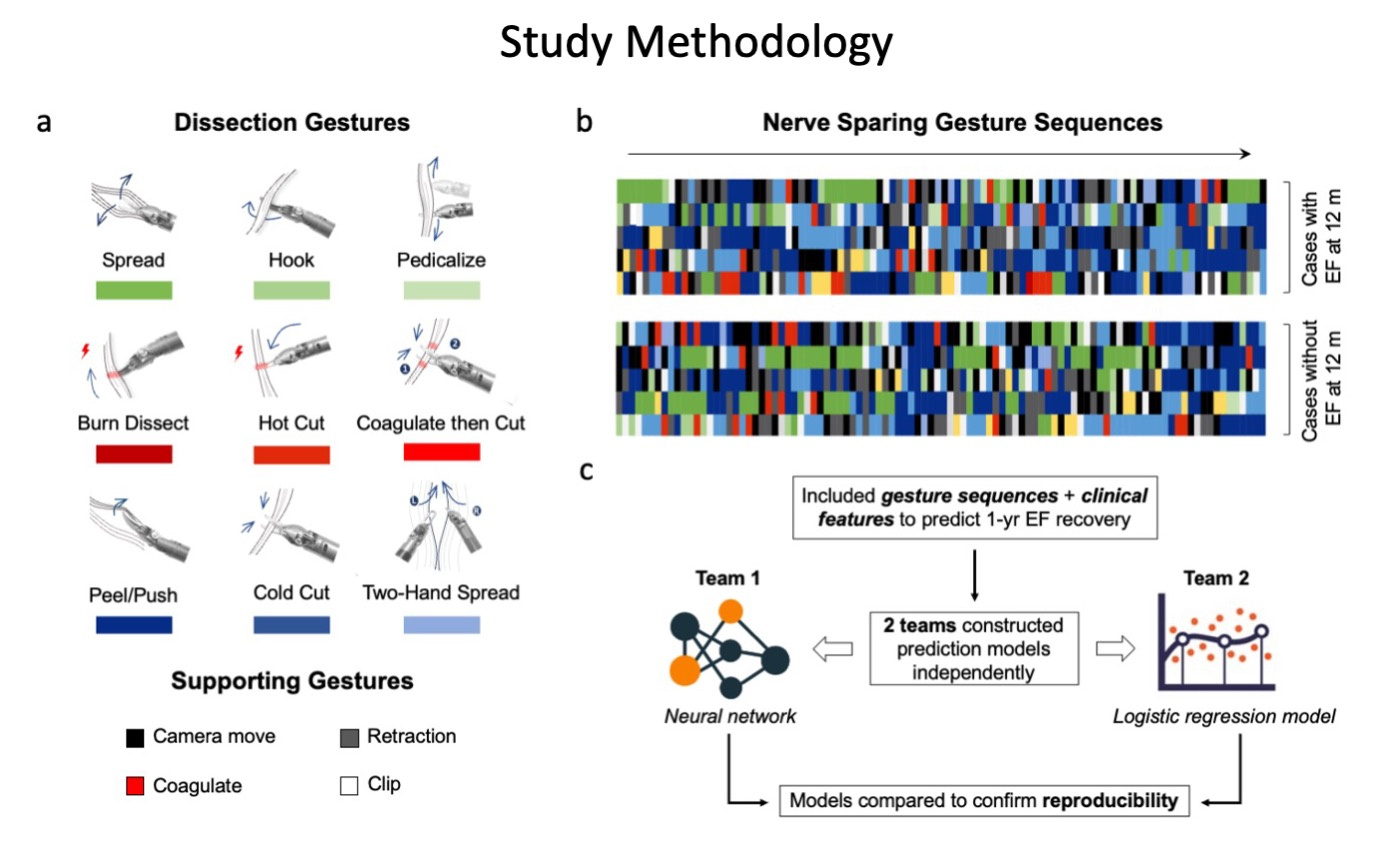

In this study, we identified 3 key findings after evaluating 34,323 individual gestures performed in 80 nerve-sparing procedure from two international medical centers:

a) Surgical gesture selection impacts surgical outcomes. Less use of hot cut and more use of peel/push are statistically associated with a better chance of erectile function This has be explained as extensive energy utilization during the procedure can cause damage to nearby nerves.

b) Surgical gesture sequences can predict surgical outcomes. We had two teams independently construct distinct machine learning models using gesture sequences and patient clinical features to predict erectile function recovery. In both models, gesture sequences are able to predict 1-year erectile function recovery with decent accuracy. This dual-effort method speaks to the point of reproducibility in a way has been rarely conducted in clinical literature, though it has been widely advocated for by the machine learning research community to increase the robustness of conclusions made with machine learning assistance.3

c) Different execution of the same gestures can result in different outcomes. Specifically, erectile function recovery was dependent on surgeon experience given similar surgical gestures. This finding suggests that while the gesture selection is important to determining surgical outcome, their skillful execution also matters. This finding is well-supported; in a simulation study, we found that the efficacy and error rates of the same type of gestures were different between novices, intermediates, and experts.4 It would be interesting to replicate this study in live surgery settings and observe the difference.

How can surgical gestures be used to improve surgery in the future?

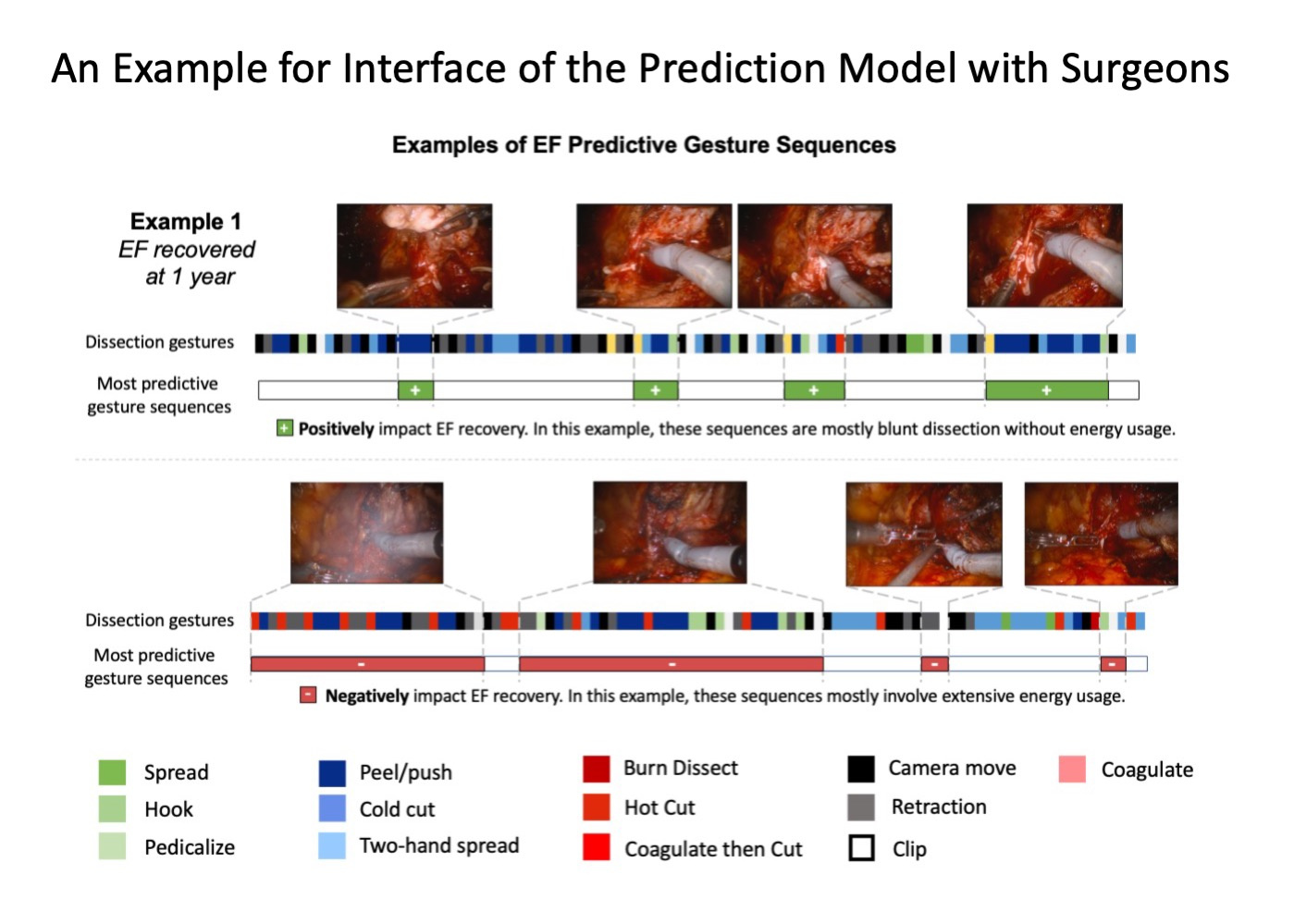

We initiated the study with the eventual aim to improve surgery. In our opinions, there are three aspects of our study that may improve surgery in the future. First, the proposed surgical gesture classification system provides a standardized terminology for communication in surgical education. This is of particular importance in the operating room, where clear communication is critical to avoiding mistakes that could harm patients. Second, the trained machine learning model that uses surgical gestures as input can provide feedback to surgeons immediately after surgery. Without this system, surgeons must wait months or years for a patient’s full functional recovery to become evident. This lag time makes it difficult to assess the direct impact of their actions. In the figure below, we illustrate a possible interface for the prediction model to communicate with the operating surgeons. This setup can show portions of surgical gestures that contribute positively or negatively to the final erectile function recovery so that surgeons can modify their techniques accordingly. Finally, surgical gestures can be a useful tool for eventually automating robotic surgery. Automation of surgery will not happen overnight, and even the most advanced artificial intelligence (AI) models need to learn from the basics.5 As the smallest unit of surgery, surgical gestures can serve as the building blocks for AI models.

Takeaways

- Surgical gestures serve as the smallest meaningful unit which can be used to study surgery.

- Gesture selection and skillful execution both are important in predicting surgical outcomes.

- Gestures are helpful for anticipating surgical outcomes and providing actionable feedback for surgeons. Surgical gestures can also serve as the building blocks for AI enabled automatic surgery.

References

- Ma, R. et al. A Novel Dissection Gesture Classification to Characterize Robotic Dissection Technique for Renal Hilar Dissection. Journal of Urology 205, 271–275 (2021).

- US Preventive Services Task Force et al. Screening for Prostate Cancer: US Preventive Services Task Force Recommendation Statement. JAMA 319, 1901–1913 (2018).

- Lambin, P. et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol 14, 749–762 (2017).

- Inouye, D. A. et al. Assessing the efficacy of dissection gestures in robotic surgery. J Robotic Surg (2022) doi:10.1007/s11701-022-01458-x.

- Ma, R., Vanstrum, E. B., Lee, R., Chen, J. & Hung, A. J. Machine learning in the optimization of robotics in the operative field. Current Opinion in Urology 30, 808–816 (2020).

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in