In situ training of feed-forward and recurrent convolutional memristor networks

Published in Electrical & Electronic Engineering

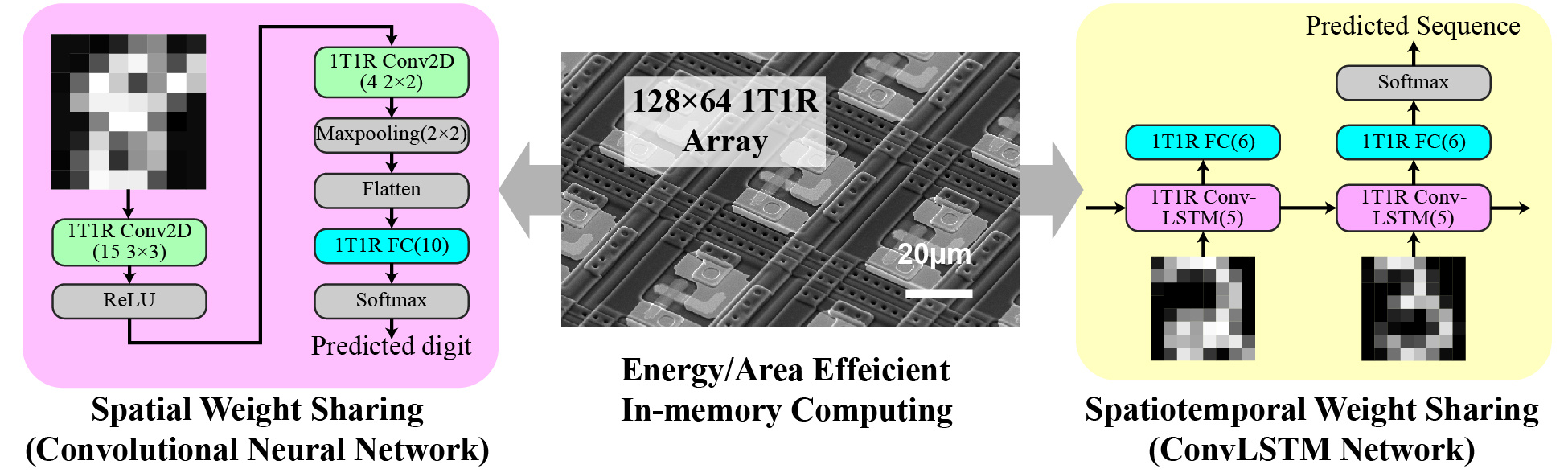

Memristor, the emerging analog memory capable of storing and processing information (via simple ‘compute-by-physics’) on the same device, is now actively pursued by computing giants like IBM, HPE, Intel, Huawei, and TSMC. Memristors have recently been used to physically implement the fully connected layers of neural networks, demonstrating great energy/area efficiency by overcoming the von-Neumann bottleneck with processing-in-memory. However, state-of-the-art neural networks are almost exclusively benefited by the weight-sharing technique, for instance, the convolutional neural network (CNN). It has not yet been demonstrated whether memristors, with intrinsic stochasticity and imperfect device yield, could meet the stringent requirements of the spatial and even spatiotemporal shared weight networks, which, if works, could combine the architectural advantage of weight sharing with the energy/area efficiency boost of the processing-in-memory.

In this work, researchers from UMass Amherst, Hewlett Packard Labs, Syracuse University, Air Force Research Labs, Binghamton University, Tsinghua University, and Texas A&M University teamed up to implement the first in situ training of the convolutional neural network on a 128 × 64 1-transistor-1-memristor (1T1R) array. It was shown that the network could withstand the intrinsic hardware nonidealities with a simple dense mapping of convolution kernels to the memristor crossbar. The in situ trained memristor-based CNN correctly classified 92.13% of the MNIST hand-written digits using ~1000 weights, only a quarter of those of a fully connected memristor neural network with similar performance, thanks to the spatial weight sharing. In addition, we demonstrated the advantages of the weight sharing could be further extended by cascading the convolutional kernels in a recurrent convolutional long short-term memory (ConvLSTM) network. This first demonstration of memristor-based neural network with intrinsic spatiotemporal weight sharing was trained to classify a synthetic MNIST-sequence dataset with just 850 weights.

The experimental demonstration of memristor-powered CNN and ConvLSTM networks represents an important milestone of analog computing technology. It suggests the hybrid analog-digital system is not just an emerging technology in lab, but with promising potential to be deployed in the near future to accelerate real-world machine learning tasks, particularly edge applications. Technically, the memristor based CNN and ConvLSTM have verified the advantages of the weight sharing and the processing-in-memory could be simultaneously garnered in the presence of hardware non-idealities, enabled by the robust in situ training method.

Our work is published on Nature Machine Intelligence, at the link https://www.nature.com/articles/s42256-019-0089-1.

Follow the Topic

-

Nature Machine Intelligence

This journal publishes high-quality original research and reviews in a wide range of topics in machine learning, robotics and AI.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in