Journals must do more to support misinformation research

Published in Social Sciences

Humanity's social systems have experienced profound changes in the last two decades. The rise of social media platforms transformed our social networks, which now broadcast massive amounts of information without any sort of editorial moderation. Researchers argue that these changes are eroding our most fundamental social institutions. Modern social networks help spread misinformation at a much larger scale. They exacerbate political polarization, threatening the integrity of democratic processes. And they hamper responses to global crises such as COVID-19.

These societal challenges require rapid policy responses, backed by robust and timely research. The issue is so urgent that some researchers call for designating the field a "crisis discipline," a mix of complexity science and social sciences, also relying heavily on computational modelling.

Academic journals too recognize the value of misinformation research and the weight of its policy implications. But the field and its methods are continually evolving, and misinformation research is uniquely interdisciplinary. Are we doing enough to ensure the strength of reach and impact it deserves?

I spoke with Dr Rachel Moran and Dr Joe Bak-Coleman, early career researchers at the University of Washington, to discuss their publishing experiences, concerns, and expectations. The conversation has been edited for length and clarity.

AR: You both work at the Center for an Informed Public at UW. What kinds of challenges does the Center aim to address?

RM: Our center’s mission is to “resist strategic misinformation, promote an informed society, and strengthen democratic discourse.” Essentially we work on all problems around the spread and impact of misinformation online and offline, thinking expansively about what makes information true and trustworthy and why this matters. Our center is a diverse bunch of scholars from across disciplines including information science, biology, ecology, social psychology, journalism and law. We work on everything from COVID-19, election interference to gender discrimination and reproductive rights. Misinformation stifles our ability to build community, work on large collective problems and disrupt injustices and so we orient our work to better understand how to facilitate the spread of authoritative, useful information.

AR: Your research is broadly interdisciplinary. How do you decide where to publish, and what would be the ideal venue for your work?

RM: Coming from Journalism studies, I tended to place my work in Journalism and Communication-related journals, but as my research agenda has expanded I feel that I’m far too often “preaching to the choir” by publishing solely in these areas. While they are supportive places, they aren’t well-read by outside disciplines who work on similar problems and topics. However, as a mostly qualitative researcher there aren’t many high impact, widely read journals for my work. This means that while I might read and cite widely when writing about misinformation, I have limited options to place my own research. I’d love to see high-impact journals embrace more qualitative and mixed methods research and seek out reviewers who can properly evaluate qualitative methodology—far too often I’ve been assigned researchers whose reviews are simply “why didn’t you use X computational approach or run Y analysis.”

JBC: I’ve found it difficult to place my work in specialist journals, as the evaluations for “fit” can be at odds with interdisciplinary perspectives and methods. Instead, I tend to submit towards interdisciplinary venues. One difficulty is that, on average, interdisciplinary venues tend to be high-impact and therefore quite selective.

It would be fantastic to see a journal focused on stewardship of global collective behavior, examining ways in which large-scale social processes impact human sustainability, equity, and well-being. Ideally such a journal could be broadly-focused and methodologically agnostic, open to quantitative and qualitative research, as well as policy and ethics work. For such a journal, rapid turnaround times are a must—the ability for research to reduce harm can be limited by substantial delays.

I would be excited to see something along the lines of “Nature Complexity”, as publishing venues in complex systems are few and far between—with many of the gaps filled by predatory publishers.

AR: What kinds of issues do you run into when submitting and publishing your work? What would you want the editors and reviewers handling your work to know?

RM: I have two primary issues that particularly arise when working on mis/disinformation-related topics - a lack of reviewer expertise in qualitative methods and shaky ethical considerations around social media data. To speak to the first, when I’ve published in larger and more interdisciplinary spaces I’ve often faced a Reviewer 2 who doesn’t value qualitative work and whose feedback is almost always to reposition a qualitative analysis into a more quantitative approach. I’d love to have a reviewer who meets the paper where it’s at and suggests changes that strengthen the methods employed, rather than believing qualitative work is of a lesser rigor.

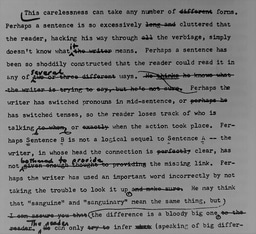

The second issue is harder to handle. Researchers using social media data have to make so many ethical decisions to balance research objectives with commitments to privacy, safety, and accountability. For example: a large body of my current work relies on Instagram posts and stories as the primary data source. These posts are publicly available and IRBs deem the study of them as “not human subjects research”—yet this is real people’s information and so we most often err on the side of completely anonymizing it by blurring out images, taking off usernames, etc. However this comes at an analytical cost, as it often means we can’t show readers exactly the visual phenomenon we are referring to. As we grapple with what to make visible and what to keep private, I would love to see some leadership from Journals as to how to present this research in the most ethical way.

JBC: Perhaps the most common hurdle I run into is simply by using Bayesian methods—essential for accomplishing inferential goals when off-the-shelf models won’t work. In submission, I find myself shoehorning the analysis into author guidelines and reporting summaries that are framed around null-hypothesis significance testing (NHST). Now-common best practices like pre-registration and registered reports are poor fits to Bayesian workflows.

In review, I only recall having a reviewer trained in Bayesian methods once or twice in my career. Standards of evaluating Bayesian and NHST approaches are quite different, and reviewers often look for best practices in NHST when evaluating a Bayesian paper. In my work, this is the most common cause of rejection—from skepticism that the effects aren’t “significant” to dated concerns over the subjectivity of priors, or feeling that unfamiliar models are uninterpretable or being used because simpler models did not get the “desired result”. In my experience, one review along these lines is all but guaranteed, and two will usually sink the paper.

In extreme cases, I have been accused of questionable research practices for things as mundane as using informative priors or presenting credible intervals other than 95% . Editors understandably reject papers with prejudice in such circumstances. I’ve adopted the strategy recommended to me by more senior colleagues facing similar challenges—developing a robust workflow for reformatting a manuscript to submit elsewhere. This is probably easy enough to solve by flagging the differences for reviewers and editors to ensure that appropriate standards are applied for a given paper.

Harder problems surround the fragmentation of social sciences. Often when writing an interdisciplinary paper you wind up having to resolve long-standing conflicts between disparate disciplines. It can be quite hard to write, cite, and revise a paper across methodological divides (quant, qual, mixed-methods, mathematical theory), differing lexicons, and disagreement about what is “known” across reviewers. Fortunately, this is a common enough issue that editors often recognize the unreconcilable.

AR: When we last met, you talked about reproducibility/replication crises and some of the initiatives within the community. Are they a good fit for misinformation research community?

JBC: In a recent preprint, we propose a model of the replication crisis that highlights how replication can be an unreliable measure of scientific productivity. Quite a few statisticians have raised similar concerns about both our framing of the replication crisis and the approach reforms. Yet these viewpoints have typically been drowned out by replication work in the social sciences (mostly psychology) that proposes solutions to improve science by improving replication based on fairly restrictive models of the scientific process.

My concern is that these reforms (e.g., pre-registration, registered reports, replication) define truth as the set of empirical investigations that can reliably generate a p-value less than 0.05. We shouldn’t expect a set of reforms derived in psychology—with its unique constraints and opportunities—to be broadly beneficial when applied across the breadth of scientific research.

How would a model of climate change be shoehorned into a pre-registered hypothesis test? Would it benefit our understanding to do so? Should we require a wildlife biologist hoping to learn something about elusive snow leopards to conduct a power analysis? Would a quantitative study of some rare event—implicitly non-replicable—be considered not scientific or only tentatively true? Moreover, should we disregard qualitative research entirely, or force researchers to toss aside their rich understanding in order to code their data into something that can be run through an ANOVA? To the extent that the replication crisis reforms become norms of conducting “good science”, these pathways to knowledge will struggle to persuade reviewers.

The global challenges we face–from climate change and pandemics to social media stewardship—will require quantitative and qualitative investigations about many phenomena that cannot reasonably be studied in a NHST experimental context. We cannot expect this critical research to be consistently pre-registered, with sample sizes determined a priori, and knowledge gained solely through significance testing with off-the-shelf models.

Instead, science will likely need to embrace accepting methodologically diverse papers and encourage open-minded review. When reviewers raise concerns derived from open science reforms, these should be weighed against the inferential goals of the paper and the practical constraints of the topic. Badges indicating that a paper has met standards of open science or preregistration may inadvertently serve as a cue that those findings are more reliable or important than other types of research. Perhaps most of all, it is important to avoid interdisciplinary spaces imposing standards derived from single disciplines on the whole of science.

A related concern with replication reforms is that they are sometimes predicated on the notion that a major barrier to generating useful science is the bad behavior of scientists. As we discuss in the preprint, this is likely a flawed assumption and is inconsistent with evidence from registered reports. It is worth considering how norms of open science, alongside a perhaps fraught perception of widespread fraud, impose differential risks to researchers. Some of these issues are certainly not something that we can reasonably expect will be fixed by journals or editors—they’ll require systemic change. However, journal policies being aware of the drawbacks to replication crisis reforms is essential.

AR: Ultimately, you would want your research to make a difference in policy. Are there specific policies targeting social media companies that you would want to see implemented?

JBC: We’re not going to get anywhere without transparency. This needs to include not only access to data, but full information regarding code and algorithmic design. At a minimum, we need the ability to experimentally probe algorithmic decision-making systems. Relatedly, it would be good to see standards of observability, from academic-facing APIs to design choices that facilitate research.

More generally, we need much stricter laws on the types of data companies are able to collect, how they can be collected, how long they can be stored, and how easy it is for a user to request data deletion. Weighing the benefits of marginally more effective ad sales against the myriad risks, this feels like easy policy. Another bit of low-hanging fruit would be to regulate dark patterns and require periodic affirmative renewal for subscriptions. Similarly, it would be great to see carbon taxes (in general) but specifically on crypto-currency.

RM: I waiver on intervention strategies constantly as my work often captures the unintended negative impacts that changes in policy and design create. Ultimately, there does need to be changes on the data access front, which Joe captures above. I’d also like to see more transparency and accountability in the decision-making processes behind-the-scenes at social media companies. We can have access to all the user data in the world but that won’t necessarily help us understand why platforms make the decisions they make and the pressure points the public have to alter this decision-making. Sure I’d love access to more Facebook data, but I’d also love more access to Facebook itself! ◆

[Poster image credit: 86thStreet: Chuck Close, Subway Portraits, MTA, CC BY 2.0]

Follow the Topic

-

Nature Human Behaviour

Drawing from a broad spectrum of social, biological, health, and physical science disciplines, this journal publishes research of outstanding significance into any aspect of individual or collective human behaviour.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in