Let physics do the heavy lifting in machine learning

Published in Physics

Why machine learning creates a need for more efficient computers

Machine learning is slowly growing into a serious sustainability issue due to the large amounts of computational resources that it consumes. Today, training just a single machine learning model requires a large cluster of computers that has to run over the course of hours or even days. In the process, more CO2 can be emitted that an average car does over its entire lifetime, hence presenting a massive ecological challenge. And this problem is further aggravated, as the complexity of new machine learning systems currently doubles every 3-4 months. How can we make sure that machine learning does not become a massive sustainability issue in the near future? Many signs indicate that our current digital computers may not be the most efficient way for training neural networks. For instance, while computer clusters can consume several hundreds of Kilowatts of electrical power for training neural networks, our own human brain requires only around 20 Watts. Inspired by this, researchers have been on the hunt for new unconventional computing systems that work entirely different from today’s digital computers and that can solve machine learning problems significantly faster and more energy efficient.

How Ising machines exploit physics for highly efficient computation

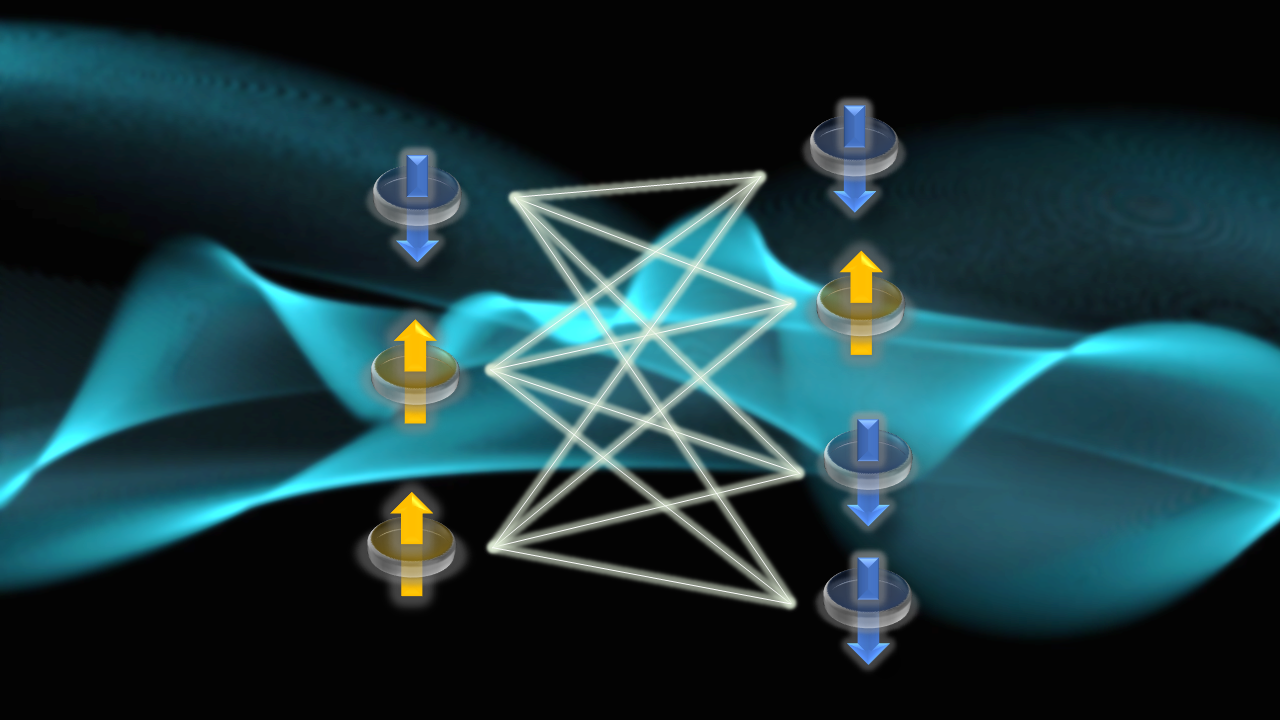

Within this surge of new computing systems, we came across a very intriguing concept that exploits the laws of physics for highly efficient computation: Ising machines. Ising machines solve difficult optimization problems by utilizing the natural tendency of physical systems to evolve to their lowest energy configuration. For this, they employ artificial magnets, s.c. Ising spins, that can point either up or down. Such magnets will automatically self-organize themselves to be aligned in an energetically optimal configuration, which is equivalent to finding the lowest energy state of the well-known Ising model. Ising machines exploit this by mapping the cost function of optimization problems to such Ising models. They then use physical system, such as optical or electrical oscillators, to create networks of artificial magnets, which are equivalent to these Ising models. As the systems self-organize themselves into an energetically optimal configuration, they will thus find a solution to the original optimization problem. Through such physics-based computing, Ising machines have been able to solve optimization problems orders-of-magnitude faster than digital computers, while consuming a fraction of their electrical power.

Why Ising machines have been inefficient for machine learning so far

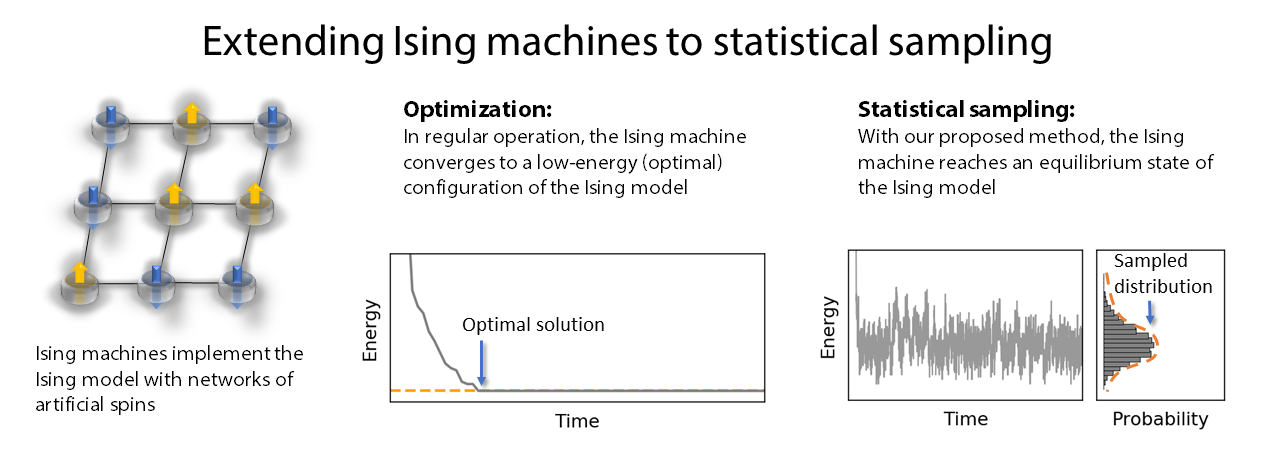

We wanted to show that this inherent speed and efficiency of Ising machines can also be used to address the current inefficiency of machine learning. However, while various machine learning models can be mapped to the Ising model, we found that training them with Ising machines is very inefficient and inaccurate. That is because training these models requires statistical sampling, which is very different from optimization. Statistical sampling aims to measure the probability distribution of artificial neurons in a thermal equilibrium, whereas optimization is only concerned with finding the lowest energy configuration. Therefore, while optimization ideally requires a system at low temperatures to converge to low-energy states, sampling requires a system in equilibrium at a finite temperature; two very opposite goals. Initially, we found that Ising machines inherently behave like low temperature systems. Our goal was therefore to find a way to emulate temperature in Ising machines so that we could better exploit their inherent speed for statistical sampling; and hence for machine learning.

How to enable ultrafast statistical sampling with Ising machines

To solve this problem, our idea was to inject a strong noise signal into the Ising machine. Similar to a physical temperature, the noise can act as a randomizing force that prevents the system from converging to low-energy states. Much to our surprise, we found that, when injected with strong noise, the resulting equilibrium state of the Ising machine agrees very well with software-based sampling methods, such as Monte-Carlo sampling. By adjusting the noise power, we observed that the equivalent thermodynamic temperature of the Ising model could be easily tuned. This motivated us to move further and to see how the overall performance of this noise-induced sampling compares to software-based methods. We found that the injection of noise allows Ising machines to continuously create samples at rates of billions of samples per second. This is up to 1000 times faster than current software-based sampling methods.

Applying Ising machines to machine learning and beyond

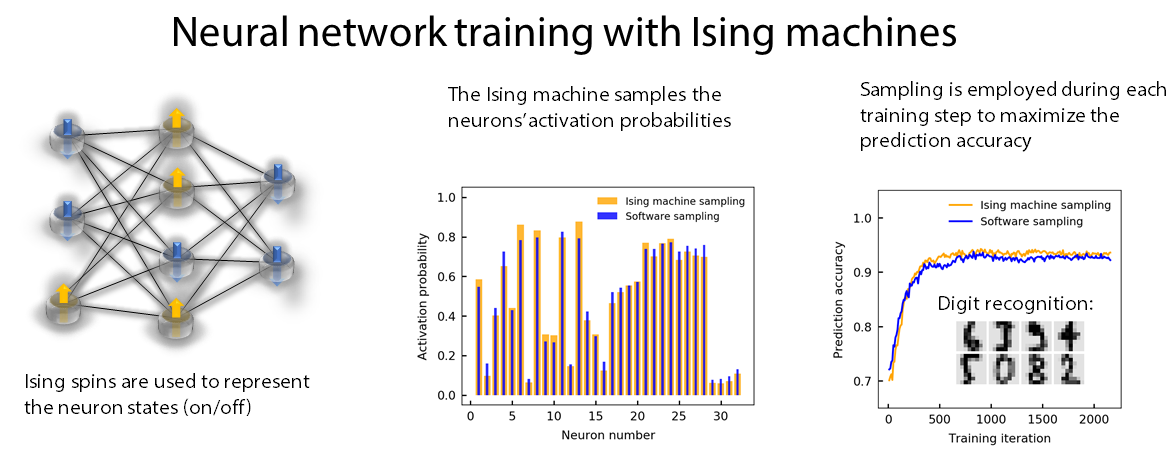

Such ultrafast sampling can be directly applied to the training of neural networks. We were able to use an Ising machine to sample the activation probabilities during training of a neural network in an image recognition task. The resulting recognition performance matched that of conventional training methods. Yet, as statistical sampling is the computationally most demanding task during the training of such neural networks, the speedup in sampling by using Ising machines directly translates into significantly faster training. With our sampling method, Ising machines therefore offer a promising route to alleviate the large computational load of modern machine learning systems. Beyond that, statistical sampling is also a computational challenge in other fields, such as drug design and finance. Going forward, it will be very interesting to see how the speed of Ising machines can benefit these applications as well.

The machine learning libraries developed for Ising machines are available under: DOI: 10.17605/OSF.IO/347XT

The article can be found here: https://www.nature.com/articles/s41467-022-33441-3

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in