The number of sensory nodes in Internet of Everything continues to increase rapidly and generate massive data. By 2032, the generated information from sensory nodes is equivalent to 1020 bit/s, much larger than the total collective human sensory throughput. Although 5G and 6G networks can potentially provide high speed and wide bandwidth, it is still quite challenging to send all of data produced at sensory terminals to “Cloud” computation center, especially for those time-delay sensitive applications (e.g., autonomous vehicles). This situation demands a dramatic increase on the computation near or inside sensory networks.

Vision sensor is a representative data-intensive application. In our previous works, we demonstrated preprocessing functions of images by adopting optoelectronic resistive switching memory array 1. Later, Mennel et al. reported more complicated image processing by constructing an artificial neural network with two-dimensional material image sensors 2. These works show that the near-sensor or in-sensor computing paradigms require new hardware platforms to achieve new functionalities, high performance, and energy efficiency using the same or less power 3. With the rapid development in this emerging area, it has become quite necessary to summarize the existing achievements and provide perspectives for future development in this field.

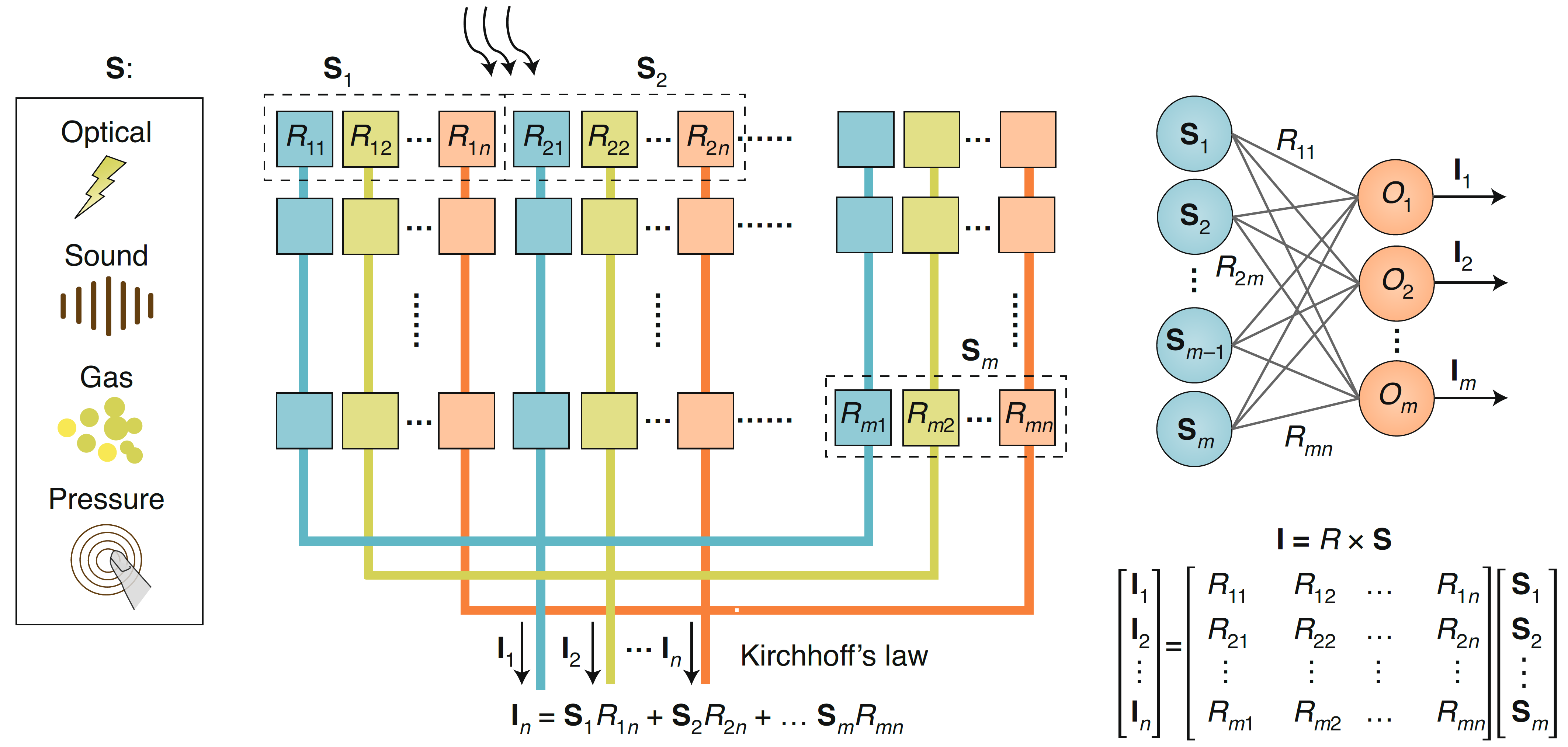

We examine the concept of near-sensor and in-sensor computing paradigms and define them according to their physical placements between sensory and computing units. Depending on the information processing functions, we classify the sensory computing as low-level processing (still representation of sensory signals) and high-level processing (abstract representation). As sensing functions are realized in the noisy analogue domain, it would be ideal to process sensory data using integrated analog processors to avoid analog-to-digital conversions for a highly efficient system, which could decrease time latency and reduce power consumption. Fig. 1 shows a typical example for in-sensor computing architecture with reconfigurable sensors, in which each sensor not only works as a sensory device, but also is an indispensable element in the artificial neural network, which allows multiply-and-accumulate operations and reduce the amount of intermediate data. In addition to optical signals, we can also extend this computing diagram to other physical signals, including mechanical, chemical, electrochemical etc.

Fig. 1 In-sensor computing architecture with reconfigurable sensors for multiply accumulation operations in the neural network.

Sensory and computing units usually rely on disparate process technology nodes and materials systems. For the hardware deployment of near-sensor and in-sensor computing paradigms, it requires seamless integration between sensory and computing units with minimized interconnect length. Three-dimensional (3D) monolithic integration is a scheme for high-density system, while the complicated processing technology greatly limits its implementation. An alternative approach to the near-sensor computing paradigm is the so-called 2.5D Chiplet integration technology, which is a cost-effective scheme, relies on advanced packaging techniques, and have relative short-time-to-market.

You can find more details about this work in our perspective published in Nature Electronics: Zhou, F. C., Chai, Y., Near-sensor and in-sensor computing. Nat Electron, 3, 664-671 (2020). https://doi.org/10.1038/s41928-020-00501-9

References:

[1] Feichi Zhou, Zheng Zhou, Jiewei Chen, Tsz Hin Choy, Jingli Wang, Ning Zhang, Ziyuan Lin, Shimeng Yu, Jinfeng Kang, H. –S. Philip Wong and Yang Chai, “Optoelectronic random access memories for neuromorphic vision sensors”, Nature Nanotechnology, 2019, 14, 776-782.

[2] Lukas Mennel, Joanna Symonowicz, Stefan Wachter, Dmitry K. Polyushin, Aday J. Molina-Mendoza and Thomas Mueller, “Ultrafast machine vision with 2D material neural network image sensors”, Nature, 2020, 579, 62-66.

[3] Yang Chai, "In-sensor computing for machine vision", Nature, 2020, 579, 32-33.

Follow the Topic

-

Nature Electronics

This journal publishes both fundamental and applied research across all areas of electronics, from the study of novel phenomena and devices, to the design, construction and wider application of electronic circuits.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in