Neuromorphic electronics mimicking the sensory cue integration in the brain

Published in Electrical & Electronic Engineering

Bumble bees display the ability to recognize objects across modalities of visual and tactile senses. Star-nosed moles perceive their surroundings using tactile and olfactory perception in lightless underground environments. Many animal species, especially mammals, demonstrate multisensory integration ability that enables them to combine multiple sensory signals. Indeed, sensory cue integration in the brain may benefit the animals for completing complex tasks and improving perceptual performance.

Is it possible to implement the brain function of sensory cue integration in an electronic device? Neuromorphic electronics may shed light on this topic. Recent research has reported artificial synapses and artificial sensory nerves that can emulate the basic working principles of biological neurons, where information is processed in a parallel, asynchronized, event-driven manner. However, the fundamental principles of spatiotemporal recognition, perceptual enhancement, inverse effectiveness, and perceptual weighting related to multisensory integration in the brain have not been sufficiently investigated. Besides, cognitive intelligence needs to be further combined with multisensory integration to enable their application in wearable electronics and advanced robotics, where real-time recognition and energy-efficient sensing are required.

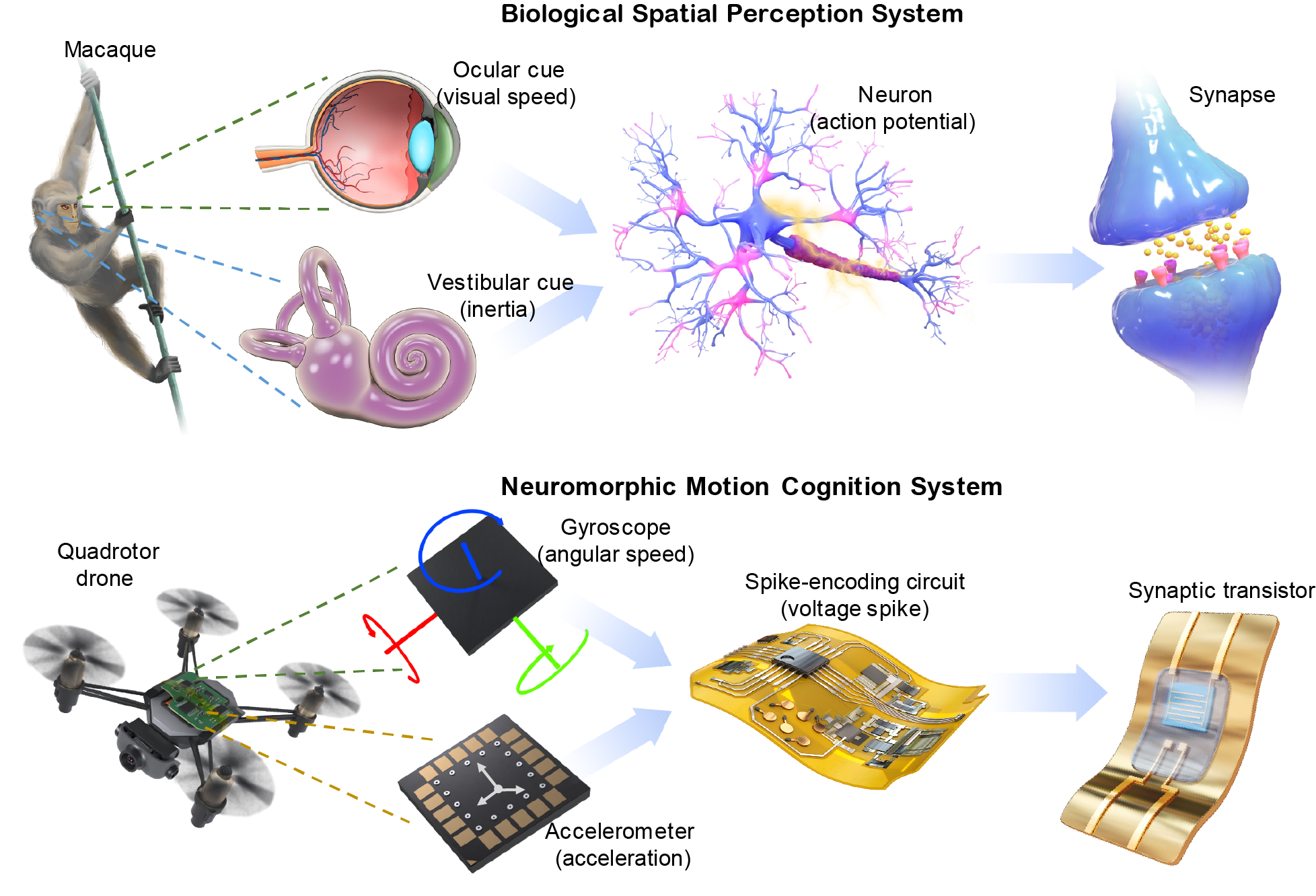

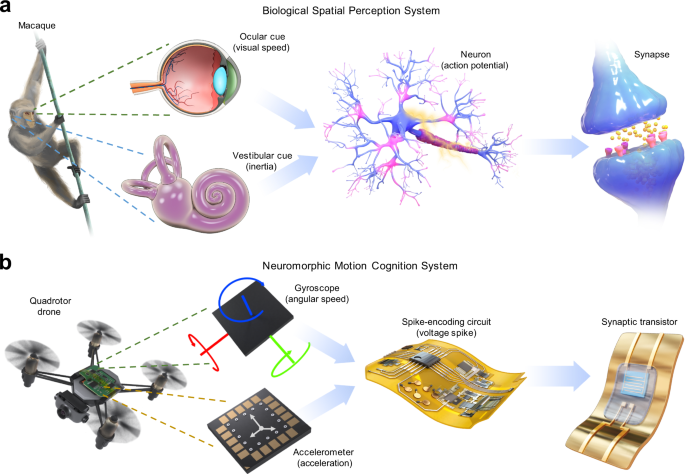

In our recent work published in Nature Communications, a motion-cognition neuromorphic nerve was developed to achieve a brain-like multisensory integration. For the macaque monkey, self-motion in the environment invokes inertial stimuli in the inner-ear vestibular, and visual stimuli in the retina (Figure 1). The vestibular-inertia and visual-speed information are converted to spike trains carrying different spatiotemporal patterns and then processed in the neural and synaptic networks through the process of sensory perception. Such integration of information from two different sensory modalities results in neural and behavioral response enhancement regarding motion and spatial perception. Similar to the ocular-vestibular system of the macaque, acceleration and angular speed signals are obtained by an accelerometer and a gyroscope in our system (Figure 1). The motion signals are firstly converted into two temporally correlated spikes and then processed by a multi-input synaptic device. The temporal pattern of the two spikes affect the output of the synaptic device. Recognition of motion information is implemented by averaging the spike firing rate and reading the device output in an event-based manner. As a result, neuromorphic perception of motion information through multisensory cue integration is achieved.

As an extension, multisensory integration of sensory cues obtained from completely different sensors has been investigated using our system. Implementing the sensing modules using optical-flow, vibrotactile and inertia sensors allows the detection of multimodal signals corresponding to visual, tactile and vestibular cues. Due to its flexible and portable design, our system can be readily attached to the human skin or wirelessly communicate with an aerial robot and then complete motion-perception tasks including human activity recognition and drone flight mode classification. Compared with unimodal sensory conditions where each sensory cue is segregated, bimodal sensory integration of different cues improves recognition accuracy and realizes perceptual enhancement.

Our system serves as a general neuromorphic platform for the emulation of multisensory neural processing in the brain. Essentially, this system is biologically plausible, because it emulates sensory cue integration and realizes cognitive functions, and its perceptual performance is comparable with the biological principles of perceptual enhancement by multisensory integration. We envision that our system can provide a new paradigm for combining cognitive neuromorphic intelligence with multisensory perception towards applications in sensory robotics, smart wearables, and human-interactive devices.

The original article can be found here:

Jiang, C. et al. Mammalian-brain-inspired neuromorphic motion-cognition nerve achieves cross-modal perceptual enhancement. Nat Commun 14, 1344 (2023).

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in