The Story Behind Our Study On BioLogicalNeuron – Bridging Biology and Artificial Neural Networks

Published in Protocols & Methods and Anatomy & Physiology

The challenge of unstable neural networks

Neural networks have revolutionized AI, powering breakthroughs from image recognition to drug discovery. Yet they remain fragile. Train them too long, and neurons die. Feed them noisy data, and performance collapses. Push gradients too hard, and training explodes or vanishes.

These aren't just technical annoyances—they're fundamental limitations that prevent neural networks from matching the robustness of biological brains. A human neuron operates reliably for decades, self-regulating and self-repairing. An artificial neuron? It needs careful babysitting: dropout, batch normalization, learning rate schedules, early stopping.

We asked: What if artificial neurons could regulate themselves, like real ones?

Learning from biology: calcium as a signal

In biological neurons, calcium is everything. It's a messenger, a regulator, and—critically—a warning signal. When calcium levels rise too high, it's toxic. The neuron must act fast: scale down overactive synapses, reinforce important connections, prune the weak ones.

This homeostatic regulation isn't a backup system. It's how neurons maintain stability while learning throughout life. It's how they balance the competing demands of plasticity (learning new things) and stability (remembering old things).

Most biologically inspired neural networks focus on spiking dynamics or energy efficiency. But they miss the bigger picture: the continuous, proactive self-regulation that keeps biological neurons healthy.

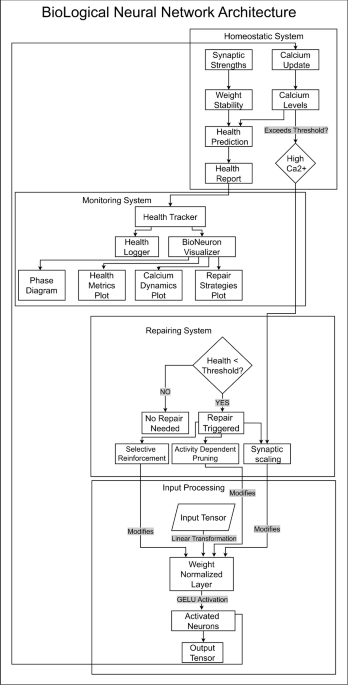

The BioLogicalNeuron layer

1. Calcium-based monitoring Like biological neurons, our layer tracks calcium levels as a proxy for neural activity. High activity increases calcium; natural decay brings it down. When calcium exceeds safe thresholds, the neuron knows it's in danger.

2. Homeostatic health assessment The layer continuously computes its own health based on calcium levels and synaptic stability. This isn't a post-hoc metric—it's computed in real-time during training, allowing the network to detect problems before they cascade.

3. Adaptive repair mechanisms When health degrades, the layer activates biologically inspired repair strategies:

- Activity-dependent synaptic scaling: Reduces overactive connections to prevent excitotoxicity

- Selective synaptic reinforcement: Strengthens important pathways

- Activity-dependent pruning: Removes weak, unused connections

These mechanisms don't just react to problems—they prevent them. The layer dynamically adjusts its learning rate based on health, slowing down when unstable and accelerating when healthy.

What surprised us most

We expected BioLogicalNeuron to improve stability. What surprised us was the performance boost.

On molecular datasets—notoriously noisy and high-dimensional—BioLogicalNeuron achieved state-of-the-art results:

- AIDS dataset: Surpassed previous best performance

- HIV dataset: Demonstrated high accuracy on complex predictions

- COX2 dataset: Strong results on molecular property prediction

But the real surprise was generalization. The same layer that excelled on molecular graphs also worked on:

- Graph-based datasets (Cora, CiteSeer)

- Image datasets (CIFAR-10, MNIST)

- Various neural architectures (GNNs, attention models)

This wasn't task-specific engineering. The biological principles—homeostasis, adaptive repair, health monitoring—turned out to be universally useful.

The power of doing nothing

One insight stood out: sometimes the best intervention is strategic restraint.

When a neuron is unhealthy, conventional wisdom says: push harder, increase gradients, add more training. BioLogicalNeuron does the opposite: it slows down. It reduces the learning rate, giving the neuron time to stabilize before pushing forward.

This mirrors biological systems. Neurons don't learn maximally all the time—they modulate plasticity based on their internal state. Learning when healthy, consolidating when stressed.

Silences in speech, pauses in learning

This reminded us of a beautiful result in neuroscience: inserting silences into compressed speech improves comprehension. Not because silences add information, but because they give brain rhythms time to resynchronize.

Similarly, BioLogicalNeuron's adaptive learning rates create "pauses" in training—not dead time, but opportunities for neural homeostasis to restore balance. The network learns more by occasionally learning less.

Beyond molecular data

While we focused on molecular datasets, the implications extend further. Any domain with:

- Noisy or incomplete data

- Long training periods

- Risk of catastrophic forgetting

- Need for continual learning

A personal journey

I conducted this research independently as a diploma student, driven by a simple fascination: Why are biological neurons so much more robust than artificial ones?

The journey from that question to a published paper was challenging. Implementing calcium dynamics, debugging homeostatic regulation, tuning repair mechanisms—it required combining insights from neuroscience, computational biology, and deep learning.

But the most rewarding moment wasn't achieving state-of-the-art results. It was watching the health monitoring plots during training: calcium levels rising and falling, repair mechanisms activating precisely when needed, the network self-correcting without human intervention.

It felt alive.

The road ahead

BioLogicalNeuron is a starting point, not a destination. Future work could explore:

- More sophisticated calcium dynamics (buffering, spatial compartments)

- Multiple timescales of homeostasis

- Interaction with other biological mechanisms (neuromodulation, metaplasticity)

- Integration with spiking neural networks

We also see potential for neuromorphic hardware. Self-regulating neurons that adapt their own learning rates could be more energy-efficient than externally controlled systems.

Why this matters

The gap between biological and artificial intelligence remains vast. Biological systems are robust, adaptive, energy-efficient, and capable of lifelong learning. Artificial systems are powerful but brittle.

By translating biological principles into computational mechanisms, we're not just improving neural networks—we're understanding what makes biological intelligence special. Each biologically inspired innovation brings us closer to artificial systems that are as resilient and adaptive as natural ones.

BioLogicalNeuron shows that the brain's self-regulatory mechanisms aren't just biological curiosities—they're computational principles that can transform how we build AI.

We hope this work inspires further research at the intersection of neuroscience and machine learning, and we welcome collaborations from researchers across disciplines.

🔗 Read the full article: https://doi.org/10.1038/s41598-025-09114-8

Follow the Topic

-

Scientific Reports

An open access journal publishing original research from across all areas of the natural sciences, psychology, medicine and engineering.

Related Collections

With Collections, you can get published faster and increase your visibility.

Reproductive Health

Publishing Model: Hybrid

Deadline: Mar 30, 2026

Women’s Health

Publishing Model: Open Access

Deadline: Feb 28, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in