The story behind our study on rhythm-based speech processing

Published in Neuroscience and Protocols & Methods

The puzzle of robust speech comprehension

People talk at different speeds, with different accents, in noisy cafes, and on the phone with poor connections. Yet, we rarely notice the effort it takes to understand others. How does the brain succeed where even state-of-the-art speech recognition algorithms often fail, especially under difficult conditions?

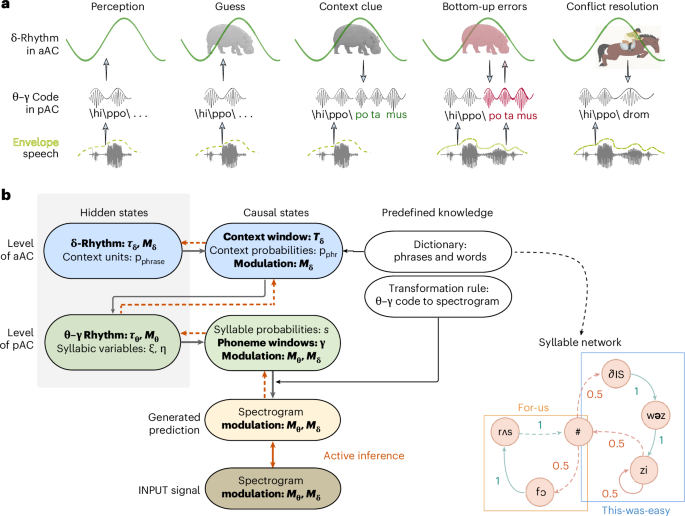

One clue lies in time: speech is organized into natural rhythms. Phonemes last a few dozen milliseconds, syllables about 200 milliseconds, and phrases roughly a second. Our brains seem tuned to these timescales, suggesting that neural rhythms may act as “temporal windows” for sound demultiplexing.

From rhythms to predictions

Decades of experiments show that the brain’s activity synchronizes with speech rhythms. For instance:

- Theta rhythm (4–8 Hz) aligns with syllables,

- Gamma rhythm (30–60 Hz) captures rapid phonemic details,

- Delta rhythm (below 4 Hz) follows phrases and prosody.

But synchronization alone does not explain comprehension. Speech understanding also relies on prediction. We don’t just process sounds as they arrive; we constantly anticipate what will be said next. This predictive ability helps us to recover the meaning when speech is noisy, fast, or interrupted.

Our study set out to combine these two insights—neural rhythms and predictive coding—into a single computational framework.

The BRyBI model

We developed the Brain-Rhythm-Based Inference model (BRyBI). At its core, BRyBI simulates the auditory cortex as a two-level system:

- At the lower level, coupled theta and gamma rhythms parse the incoming sound into syllables and phonemes.

- At the higher level, the delta rhythm builds a contextual “guess” of words and phrases, which then shapes perception at the lower level.

In other words, BRyBI captures the dialogue between bottom-up sensory input and top-down contextual predictions, orchestrated by rhythmic brain activity. This structure allowed us to explain a range of experimental puzzles: why speech comprehension fails beyond certain speeds, why inserting silences into compressed speech can restore intelligibility, and why brain responses vary depending on predictability and surprise.

Silences that help us hear

One of the most striking phenomena in speech research is that adding silences can make speech more intelligible.

Imagine someone speaking at triple speed: words blur, and comprehension collapses. But if we insert tiny silent gaps between chunks of fast speech, understanding suddenly improves—even though no new information is added.

BRyBI reproduces this effect. The model shows that silences allow brain rhythms to “catch up” and re-establish the natural timing of syllables and phrases. In particular, delta rhythm at the phrase level appears crucial: when its timing is restored, comprehension recovers. This finding challenges the idea that only syllabic timing (theta rhythm) matters. Instead, phrase-level rhythms (delta) provide the contextual glue that holds meaning together.

Uncertainty, surprise, and error correction

Our model also captures how the brain handles uncertainty. At the start of a sentence, when many interpretations are possible, bottom-up signals dominate. As context builds, top-down predictions guide interpretation, reducing uncertainty.

When predictions fail—say, if we misheard a word—the model generates an “error signal,” forcing a reset of context. This mirrors neural signatures of surprise seen in brain recordings, suggesting that BRyBI may help explain error-related brain responses during speech.

The road ahead

BRyBI is not the final word on speech perception. It simplifies many aspects, such as semantic meaning and syntax. Future versions may incorporate richer linguistic structures, more biologically realistic rhythms, and even spiking neural network implementations.

But as a proof of concept, BRyBI demonstrates the power of combining rhythms with predictions. It shows how silence can be as important as sound and how the brain’s internal clocks help us navigate the rapid, noisy stream of human communication.

A personal note

Working on this project was both challenging and rewarding. We often found ourselves asking: Why does the brain bother with rhythms at all? Couldn’t it just process speech continuously, without these discrete windows?

The answer, we believe, is efficiency and robustness. By rhythmically structuring perception, the brain balances detail with context and bottom-up evidence with top-down expectation. This rhythmic orchestra may be the key to understanding not only speech but cognition more broadly.

We hope BRyBI sparks further research into the role of brain rhythms in perception and inspires collaborations across neuroscience, linguistics, and artificial intelligence.

Follow the Topic

-

Nature Computational Science

A multidisciplinary journal that focuses on the development and use of computational techniques and mathematical models, as well as their application to address complex problems across a range of scientific disciplines.

Related Collections

With Collections, you can get published faster and increase your visibility.

Physics-Informed Machine Learning

Publishing Model: Hybrid

Deadline: May 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in