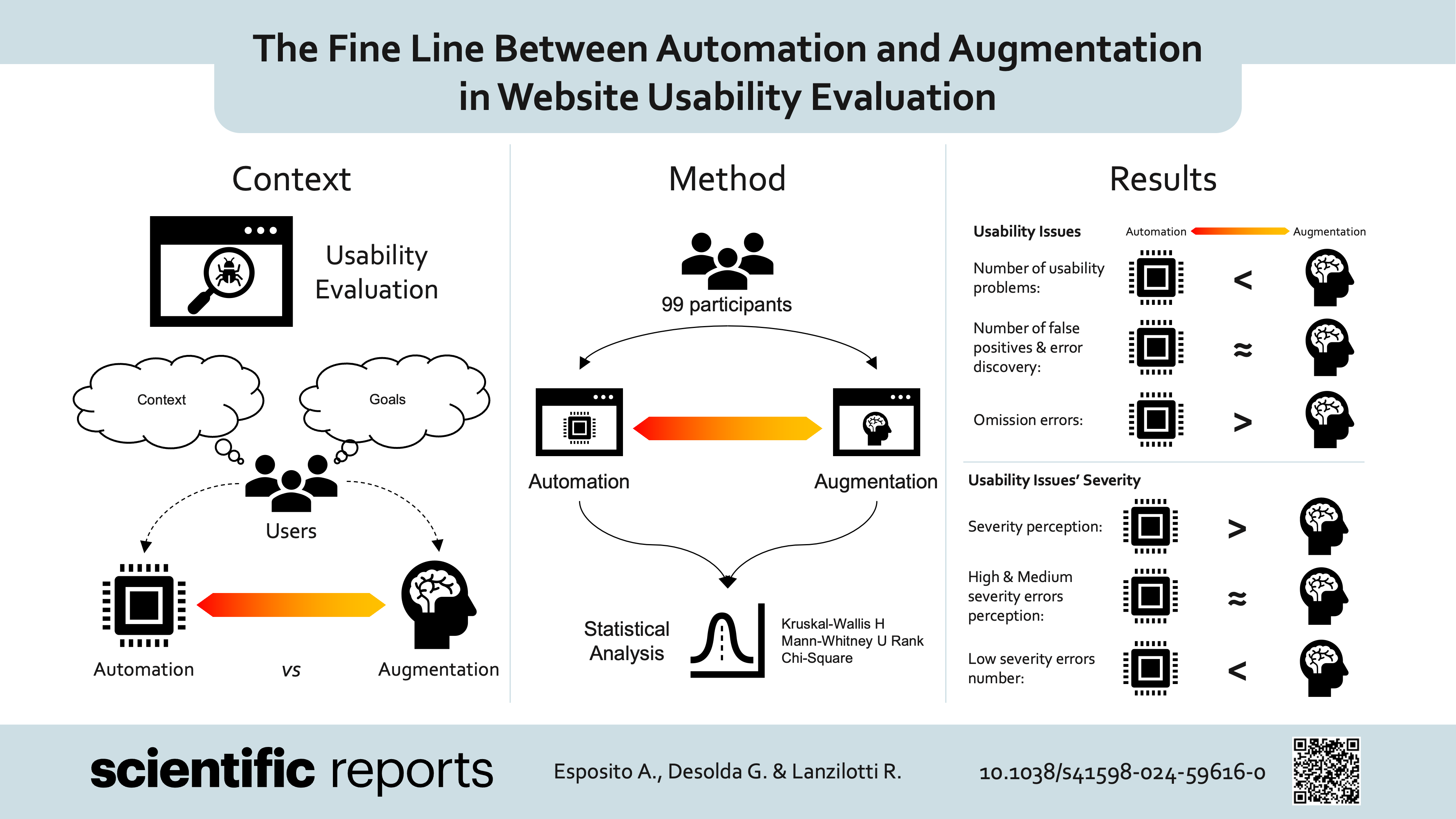

To Automate or To Augment Humans? That is the Question for AI, and The Answer Depends on Users’ Requirements

Published in Computational Sciences

Introduction

In an age where technology reigns supreme, the debate surrounding the integration of artificial intelligence (AI) into various facets of our lives rages on. From streamlining processes to enhancing productivity, AI holds immense promise. But what about its impact on the very essence of our humanity? Are we at risk of being sidelined by the very creations we’ve engineered? Our recent research delved into this question, focusing on the delicate balance between automation and augmentation—in the realm of website usability evaluation.

We set out to explore the interplay between usability evaluators and AI tools, seeking to understand how varying levels of automation impact the ability of evaluators to identify usability issues. Our findings shed light on a nuanced landscape where automation isn’t merely a threat to human involvement but can actually enhance it.

Introducing “SERENE”

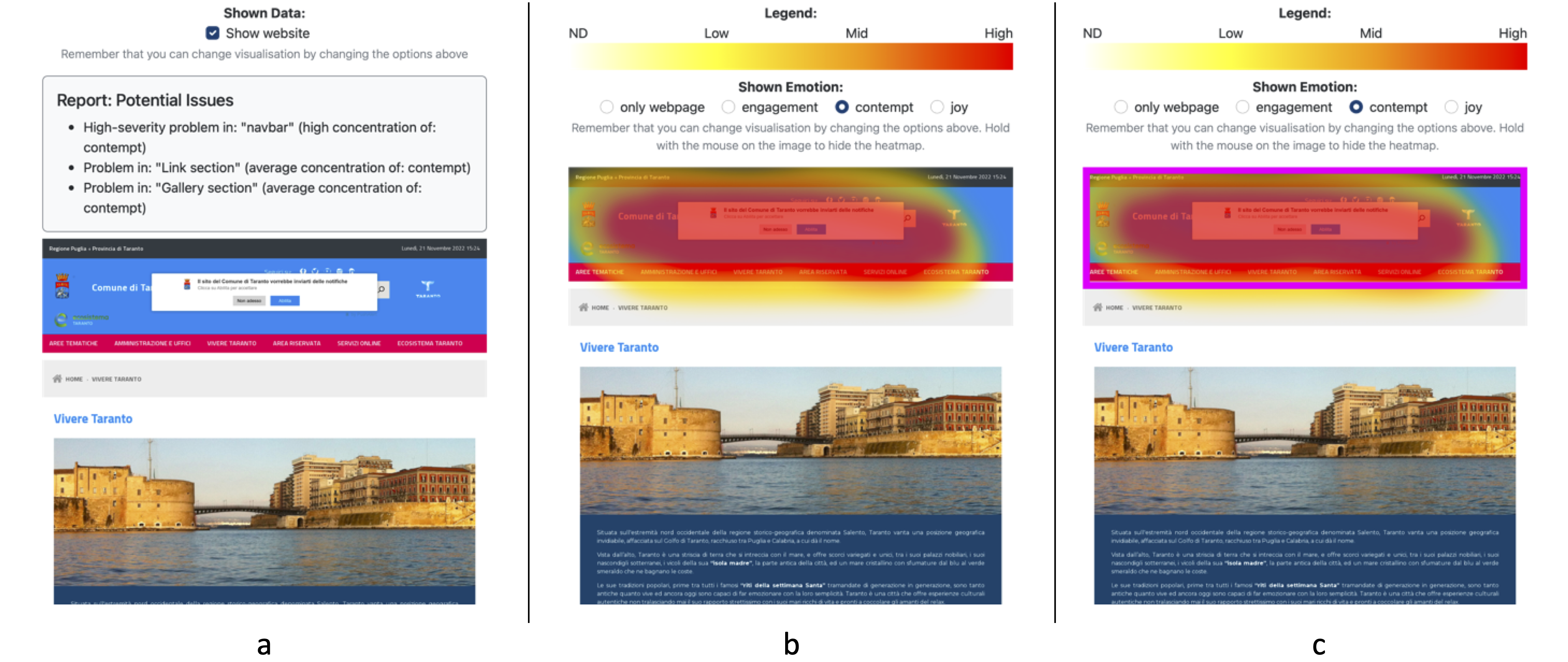

We relied on the innovative SERENE platform to explore the dynamic interplay between human evaluators and AI assistance in website usability evaluation. This tool represents a convergence of two pivotal trends in usability evaluation: the measurement of user emotions and the detection of actual usability problems.

Over recent years, there has been a surge in solutions aimed at automating aspects of usability evaluation. Some rely on affective computing techniques to recognize emotions without linking them to usability, while others provide static analyzers that may not accurately pinpoint usability problems.

Unlike its predecessors, SERENE bridges the gap by measuring the emotions experienced by website visitors during their interactions without requiring additional software or hardware on their devices. By privately recording mouse and keyboard activity, SERENE employs an AI model based on neural networks to predict user emotions moment by moment. This allows SERENE to predict the average emotions experienced during interaction with each user interface (UI) element.

To detect usability problems, drawing from studies linking perceived usability with emotional responses, SERENE operates on the premise that poorly designed UI elements evoke negative emotions in users. It suggests potential usability problems based on areas where negative emotions exceed a certain threshold.

Our Research

In our study, we compared three different approaches: full automation, partial automation (a middle-ground condition), and augmentation.

- Full Automation: This visualization presents users with a textual list of UI elements potentially affected by automatically detected usability problems for each webpage. Additionally, the related website is displayed below the list.

- Full Augmentation: In this visualization, a heatmap is overlaid on each webpage, accompanied by a menu allowing evaluators to switch between three key emotions: contempt, joy, and engagement. This approach empowers evaluators to interpret emotion concentration on UI elements freely.

- Middle-ground: This visualization is a hybrid of the previous ones. As in full augmentation, a heatmap is overlaid on each webpage, allowing evaluators to freely interpret emotions on the UI. However, automatically-detected usability problems are highlighted by boxes, suggesting evaluators their presence.

To conduct our research, we designed an experimental evaluation using a survey and involving users, more precisely, usability evaluators. Participants were tasked with evaluating two distinct websites, with each participant being supported by one of three different SERENE solutions, each offering varying levels of automation. Our experimental design followed a between-subject approach, with the level of automation serving as the independent variable: each participant is only exposed to one condition.

The primary aim of our study was to address the research question: “How does the level of automation affect the identification of usability problems?” To answer this question, we defined eight metrics to quantify the users’ correct decisions, errors, and biases. More precisely, 4 metrics deal with how users perceive AI suggestions and decisions, while 4 metrics deal with their perceived severity.

Results

The results were intriguing. A fully automated approach indeed proved adept at identifying significant usability problems, particularly those of medium to high severity. This underscores the efficiency and effectiveness of AI in specific contexts. What truly caught our attention was the potential of augmented approaches: by blending human expertise with AI assistance, we discovered a sweet spot where low-severity usability issues were more readily detected.

Conclusions

So, what does this mean for the future of website usability evaluation?

It’s not a question of humans versus machines but rather a harmonious fusion of both. In other words, the Human-Centred AI framework allows to blend human decision-making and expertise and the capabilities of AI. Our study provides tangible proof of the need to strike a balance between humans and AI, harnessing the best of both worlds.

In essence, our research shows that we must embrace the symbiotic relationship between humanity and AI without forgetting the need for human-centered approaches that define the requirements and goals of the system—tipping the scale of automation and augmentation.

Follow the Topic

-

Scientific Reports

An open access journal publishing original research from across all areas of the natural sciences, psychology, medicine and engineering.

Related Collections

With Collections, you can get published faster and increase your visibility.

Reproductive Health

Publishing Model: Hybrid

Deadline: Mar 30, 2026

Women’s Health

Publishing Model: Open Access

Deadline: Feb 28, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in