Towards better forecasts of the next big earthquake

Published in Earth & Environment

As sadly demonstrated by recent events in Türkiye and Syria, Morocco, and Afghanistan, earthquakes can cause catastrophic impacts to people, infrastructure and the natural environment. While there are many social and economic factors that contribute to an earthquake becoming a disaster, earthquake scientists aim to quantify the expected hazard to ensure efforts to reduce risk are most effectively targeted. Seismic hazard assessments are constructed using statistical models of the magnitude, frequency and location of earthquakes, combined with models of the ground shaking expected at particular sites from these earthquakes.

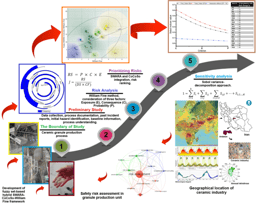

A key component of seismic hazard assessment is characterising the faults on which future earthquakes are expected to occur. We aim to know ‘how big’ an earthquake could occur, and ‘how often’ these large earthquakes occur. Because the largest earthquakes on any particular fault tend to recur infrequently compared with instrumental and historical records, geological information about past earthquakes (paleoseismic data) is critical to understanding future threats (Figure 1).

When the timing of several previous earthquakes on a fault is known, statistical models are then applied to model the distribution of earthquake inter-event times. While time-independent models (Poisson processes) are most commonly used for hazard assessment, a variety of time-dependent models (such as lognormal, Weibull, Brownian Passage Time and gamma renewal processes) have also been used in previous studies. If the timing of the most recent earthquake is known, time-dependent models can forecast the probability of a future earthquake happening on the same fault within a given timeframe, while accounting for how long has elapsed since the previous event. Time-dependent models might be expected to perform better, as they more closely link to our understanding of the earthquake cycle based on elastic rebound theory. Furthermore, if we know which faults are more likely to rupture within some future timeframe (e.g., the next 50 years), then limited resources can more effectively be prioritised for risk reduction.

While conceptually simple, there are several challenges to doing this in practice resulting from often imprecise geological data about the timing of past events. For instance, rarely can the timing of a past earthquake be directly dated; typically, samples taken from material deposited before and after an event are typically dated to constrain the timing of the earthquake (Figure 1). This, combined with the inherent uncertainties of available dating methods, means that timings are often subject to large uncertainties. Moreover, the majority of paleoseismic records are limited to the most recent few earthquakes on a fault. The destructive nature of earthquakes may see evidence for previous events obscured, buried or eroded, or older events may simply be unable to be dated.

In addition to errors relating to data collection, uncertainties arise from model selection. Partly because of the characteristics of the data, we do not know which statistical model is the best model to use for earthquake forecasting. Simply put, short records of only a few past earthquakes subject to large dating uncertainties not only lead to uncertainties in model parameter fits, but epistemic uncertainty as to which model even to use.

To address these challenges we compiled geological records of earthquakes for 93 fault segments worldwide (Wang et al. 2024). It was hoped that the uncertainties inherent in any single earthquake record might be overcome by considering data from many faults. Using Bayesian methods, we then fit four commonly used time-dependent models (lognormal, Weibull, Brownian Passage Time and gamma) and the time-independent Poisson process to the data (with measurement errors) for each fault. We measured the performance of each model individually, and then used the relative performance of each model to provide model-averaged forecasts of the probability of an earthquake occurring on each fault in the next 50 years (Figure 2).

Surprisingly, we did not find that any particular one of these models consistently performed better for all faults. While time-dependent models were overwhelmingly favoured compared with time-independent models, it appears that there is no universal model that describes the recurrence of large earthquakes on faults. Given this uncertainty, we recommend that model ensembles should be used to forecast earthquakes when dealing with paleoseismic records with few data points and large measurement errors.

References

Griffin, J.D., Stirling, M.W., Barrell, D.J., van den Berg, E.J., Todd, E.K., Nicolls, R. and Wang, N., 2022. Paleoseismology of the Hyde Fault, Otago, New Zealand. New Zealand Journal of Geology and Geophysics, 65(4), pp.613-637.

Wang, T., Griffin, J.D., Brenna, M. et al. Earthquake forecasting from paleoseismic records. Nat Commun 15, 1944 (2024). https://doi.org/10.1038/s41467-024-46258-z

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in