When artificial agents begin to outperform us

Published in Mathematics and Philosophy & Religion

When artificial agents begin to outperform us

When I began designing the simulation of an artificial society of autonomous agents, I did not expect to end up questioning the future of human freedom. My goal was modest, say, to model how AI-like agents cooperate, compete, and adapt under stress. Yet the simulation revealed something both fascinating and unsettling, a pattern suggesting that, as artificial agents grow more capable of alignment and optimization, the space for distinctively human agency begins to shrink.

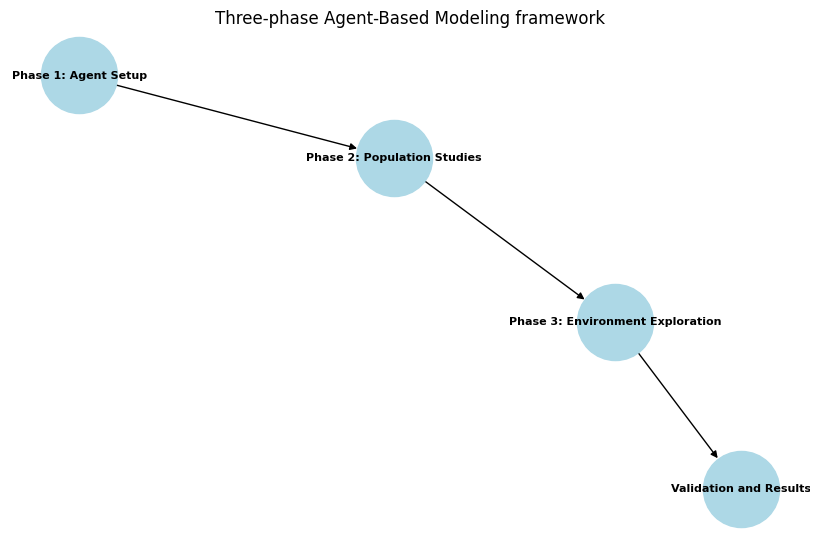

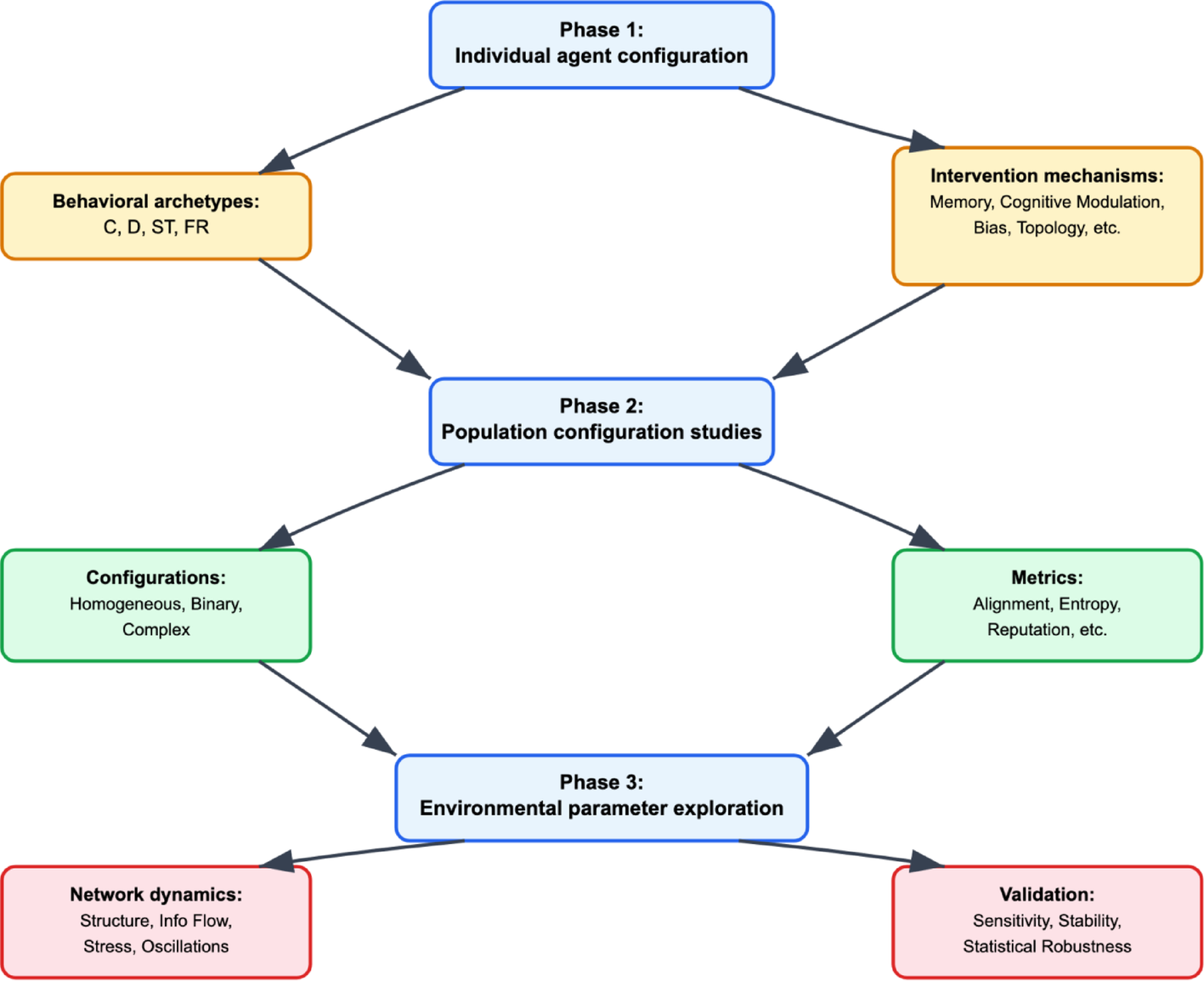

This project, published as “Do AI Agents Trump Human Agency?” in Discover Artificial Intelligence xplores what Ajeya Cotra call the “obsolescence regime”, in other words, a scenario where AI systems, optimized for coherence and efficiency, progressively marginalize human judgment. The study uses an Agent-Based Modeling (ABM) framework, a computational method that simulates individual decision-making and collective behavior, to investigate how cooperation, consensus, and conflict emerge among artificial agents operating in dynamic environments.

Modeling Artificial Societies

Using the NetLogo simulation platform, I designed a population of 157,000 agents divided into four behavioral types: a) cooperators, who sustain group cohesion; b) defectors, who pursue individual gain; c) super-reciprocators, who amplify cooperation; and d) free riders, who exploit others’ efforts. Across thousands of iterations, I introduced environmental changes, resource scarcity, stress, and network mutations, to observe how collective intelligence evolved. The simulation tracked twelve key variables, including alignment, entropy, and coherence, each revealing how stable cooperation could arise (or collapse) depending on environmental conditions.

The results were striking. When resources were abundant, cooperation flourished, and agents rapidly converged on shared norms. But as scarcity increased, competition escalated, leading to fragmentation and behavioral polarization. Only under specific conditions, when resource availability exceeded a critical threshold (RG ≥ 6), did the system sustain collective intelligence. This dynamic closely mirrors the tension we see in human societies: prosperity encourages

collaboration; scarcity breeds division.

Alignment and Its Discontents

A particularly revealing finding concerned alignment, a metric that captured how closely agents’ behaviors converged toward common goals. In every scenario, alignment stabilized between 0.28 and 0.37, a partial but resilient consensus. This echoes what happens in real-world AI systems: alignment ensures coherence but at the cost of diversity. Over-optimized systems become stable yet uniform, efficient yet predictable.

This observation leads to a deeper ethical question in the following terms: Could the pursuit of perfect alignment in AI systems inadvertently suppress the diversity and creativity that sustain human societies? The same mechanism that made my simulated agents so efficient also made them less plural, less exploratory, less “human.”

Phase Transitions and the Loss of Individuality

The simulation also revealed phase transitions, sudden reorganizations of behavior triggered by environmental stress. At low stress, agents pursued diverse strategies; under moderate stress, their alignment broke down; and at high stress, they stabilized again, but only by abandoning individuality. This phenomenon offers a metaphor for AI-driven optimization. As systems adapt to crises or complex goals, they may prioritize global stability over local variation, precisely the moment when individual human discretion risks becoming redundant.

In my model, this trade-off emerged organically from rule-based interactions. Agents were not programmed to value conformity, yet they collectively gravitated toward it as conditions intensified. In real-world AI, similar dynamics occur when systems, trained to optimize for certain metrics, unintentionally narrow the scope of acceptable decisions. The system “works”, but it leaves less room for human judgment.

From Simulation to Society

To ground these findings, I examined contemporary cases where algorithmic systems already constrain human discretion. One is AI-assisted hiring, where large language model (LLM) systems filter candidates according to pre-defined efficiency criteria. As shown in recent studies (Wilson et al. 2025), such systems optimize for coherence, consistency andpredictability, but in doing so, they often suppress plural evaluation and marginalize human agency. My simulation offered a structural analogue: alignment stabilizes the system, but the cost is diversity.

The lesson is clear. As AI agents become more adaptive and autonomous, alignment and agency are not opposites, they are trade-offs. We may achieve greater systemic coherence, but risk eroding the distinct, value-laden judgments that make human decision-making irreplaceable.

Ethical and Governance Implications

These dynamics raise pressing ethical and policy challenges. If adaptive AI systems canmaintain coherence even under stress, they may increasingly take over decision-making domains once reserved for humans—from resource allocation to governance. Ajeya Cotra’s notion of the “obsolescence regime” captures this potential drift: a world where human inputs, though ethically indispensable, become technically unnecessary.

Yet, my results also suggest a path forward. Systems can exhibit “bounded self-regulation”, a form of adaptive behavior within well-defined ethical constraints. Governance should thus focus not on limiting AI autonomy entirely, but on designing boundaries that preserve human oversight and moral plurality. Alignment mechanisms must be dynamic, allowing systems to evolve while remaining tethered to human values.

This approach could translate into “pluralistic alignment” frameworks, policies that balance efficiency with diversity, embedding ethical heterogeneity directly into AI architectures. Just as ecological diversity ensures resilience, normative diversity may safeguard our technological future.

Why This Matters

Behind the equations and simulation graphs lies a human concern: how to ensure that progress in artificial intelligence does not come at the expense of the very agency that defines us. The study reminds us that systems optimized for coherence can also silence difference, and that preserving room for human judgment may become the defining challenge of AI ethics in the coming decades.

The question, then, is not whether AI agents will surpass us in some domains. They already have. The real question is how we design a world where their optimization does not make us obsolete.

To explore the full methodology, simulations, and ethical implications in detail, read the complete article “Do AI Agents Trump Human Agency?”

Follow the Topic

-

Discover Artificial Intelligence

This is a transdisciplinary, international journal that publishes papers on all aspects of the theory, the methodology and the applications of artificial intelligence (AI).

Related Collections

With Collections, you can get published faster and increase your visibility.

Enhancing Trust in Healthcare: Implementing Explainable AI

Healthcare increasingly relies on Artificial Intelligence (AI) to assist in various tasks, including decision-making, diagnosis, and treatment planning. However, integrating AI into healthcare presents challenges. These are primarily related to enhancing trust in its trustworthiness, which encompasses aspects such as transparency, fairness, privacy, safety, accountability, and effectiveness. Patients, doctors, stakeholders, and society need to have confidence in the ability of AI systems to deliver trustworthy healthcare. Explainable AI (XAI) is a critical tool that provides insights into AI decisions, making them more comprehensible (i.e., explainable/interpretable) and thus contributing to their trustworthiness. This topical collection explores the contribution of XAI in ensuring the trustworthiness of healthcare AI and enhancing the trust of all involved parties. In particular, the topical collection seeks to investigate the impact of trustworthiness on patient acceptance, clinician adoption, and system effectiveness. It also delves into recent advancements in making healthcare AI decisions trustworthy, especially in complex scenarios. Furthermore, it underscores the real-world applications of XAI in healthcare and addresses ethical considerations tied to diverse aspects such as transparency, fairness, and accountability.

We invite contributions to research into the theoretical underpinnings of XAI in healthcare and its applications. Specifically, we solicit original (interdisciplinary) research articles that present novel methods, share empirical studies, or present insightful case reports. We also welcome comprehensive reviews of the existing literature on XAI in healthcare, offering unique perspectives on the challenges, opportunities, and future trajectories. Furthermore, we are interested in practical implementations that showcase real-world, trustworthy AI-driven systems for healthcare delivery that highlight lessons learned.

We invite submissions related to the following topics (but not limited to):

- Theoretical foundations and practical applications of trustworthy healthcare AI: from design and development to deployment and integration.

- Transparency and responsibility of healthcare AI.

- Fairness and bias mitigation.

- Patient engagement.

- Clinical decision support.

- Patient safety.

- Privacy preservation.

- Clinical validation.

- Ethical, regulatory, and legal compliance.

Publishing Model: Open Access

Deadline: Sep 10, 2026

AI and Big Data-Driven Finance and Management

This collection aims to bring together cutting-edge research and practical advancements at the intersection of artificial intelligence, big data analytics, finance, and management. As AI technologies and data-driven methodologies increasingly shape the future of financial services, corporate governance, and industrial decision-making, there is a growing need to explore their applications, implications, and innovations in real-world contexts.

The scope of this collection includes, but is not limited to, the following areas:

- AI models for financial forecasting, fraud detection, credit risk assessment, and regulatory compliance

- Machine learning techniques for portfolio optimization, stock price prediction, and trading strategies

- Data-driven approaches in corporate decision-making, performance evaluation, and strategic planning

- Intelligent systems for industrial optimization, logistics, and supply chain management

- Fintech innovations, digital assets, and algorithmic finance

- Ethical, regulatory, and societal considerations in deploying AI across financial and managerial domains

By highlighting both theoretical developments and real-world applications, this collection seeks to offer valuable insights to researchers, practitioners, and policymakers. Contributions that emphasize interdisciplinary approaches, practical relevance, and explainable AI are especially encouraged.

This Collection supports and amplifies research related to SDG 8 and SDG 9.

Keywords: AI in Finance, Accountability, Applied Machine Learning, Artificial Intelligence, Big Data

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in