A statistically validated stacking ensemble of CNNs and vision transformer for robust maize disease classification

Inspiration

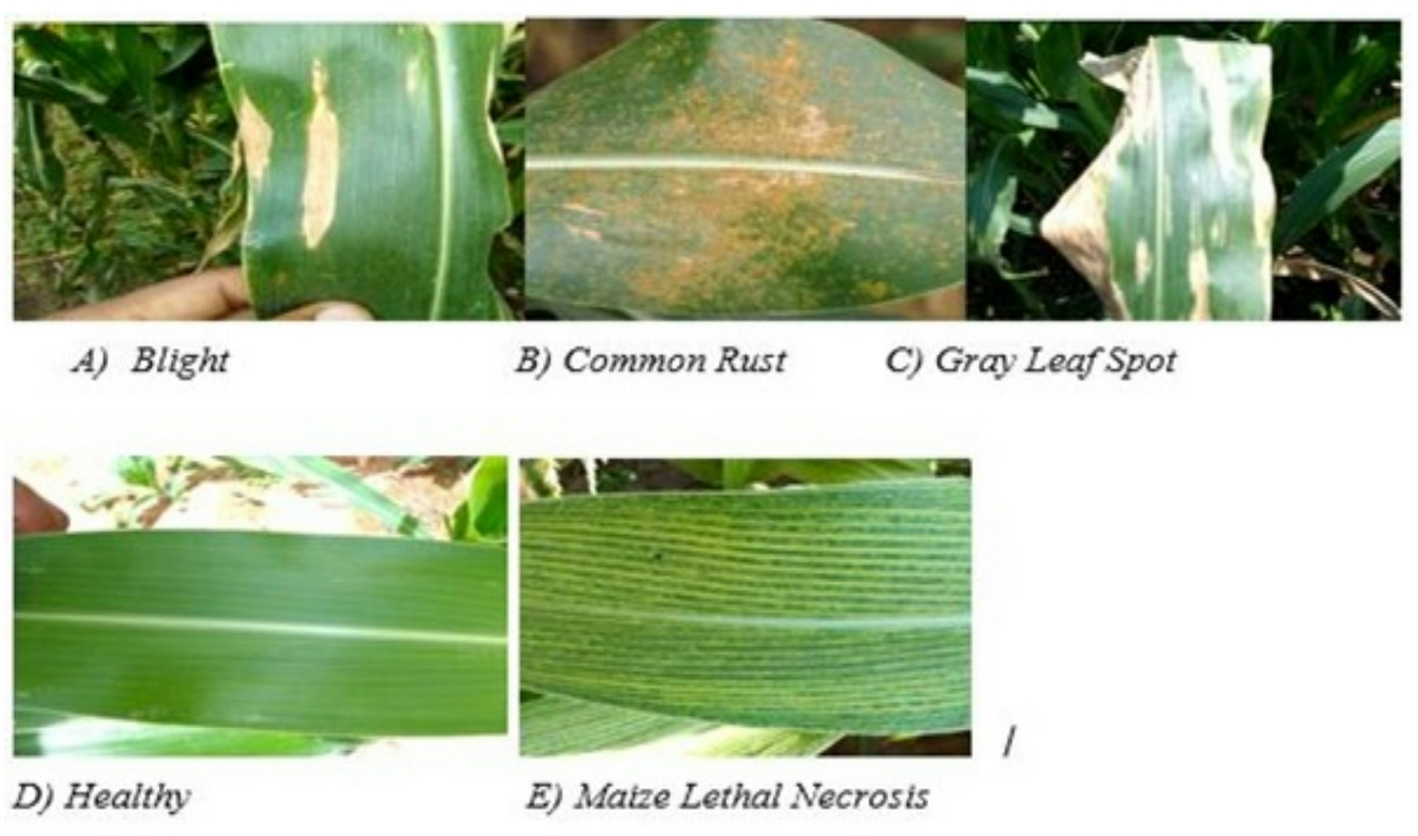

We note that global food security is under increasing pressure from crop diseases. In particular, maize is a staple crop in many countries including Ethiopia, where yield losses due to foliar diseases such as Turcicum Leaf Blight, Common Rust, Gray Leaf Spot and Maize Lethal Necrosis are a serious threat. Traditional visual inspection by farmers or extension agents is labor intensive, subjective and prone to misdiagnosis. Moreover, deep learning and transformer based models have shown promise in plant disease diagnosis via images, but there remain gaps. Thus, we tried to build a robust disease classification model for maize leaves that can:

(1) Apply multiple architectures (CNNs + Vision Transformer) in a heterogeneous stacking ensemble

(2) Provides statistical validation (K-fold, paired t-test) to ensure performance gains are real

(3) Takes into account real world field data (rather than only curated lab images)

(4) Assesses computational cost , so that the solution can be realistically applied (e.g., for Ethiopian farming conditions).

Actions

First, we collected a large dataset of maize‐leaf images: 15,995 images, combining in field smartphone photos from Ethiopia and a public Kaggle dataset. Then, we pre processed images (resizing to 224×224, normalization, median filtering, K-means segmentation to isolate leaf regions) to reduce noise and background variation. Next, we selected several pre trained CNN architectures (DenseNet201, InceptionV3, NASNetMobile, VGG19) and a Vision Transformer (ViT) to act as base learners. Additionally, we built a stacking ensemble: all base models extract features, then a meta learner (a fully connected network) concatenates the feature vectors and learns how to best combine them. We ran stratified five fold cross validation on the training/validation split, and held out an independent test set (~15% of data). We also applied a paired t-test comparing the ensemble vs the best single model to check statistical significance (p < 0.05). Finally, we analyzed computational cost (training time, inference speed) and deployment scenarios.

Outcomes

First, on the hold-out test set, the stacking ensemble achieved 99.15% accuracy. Second, in the five-fold cross‐validation, the mean validation accuracy was 99.13% with a very low standard deviation (~±0.14), indicating high stability. Third, the improvement over the best single model (DenseNet201) was statistically significant (paired t-test, p < 0.05). Fourth, although high-performance, the ensemble comes with the cost of higher inference time (~5× slower than a single DenseNet201) and heavier computational resources (training on a Tesla P100 GPU took ~6.5 h). Fifth, we emphasize that this makes the solution more suited for deployment .

As a result, the heterogeneous stacking ensemble combining multiple CNNs and a Vision Transformer is, according to the researchers, novel in the maize‐leaf disease domain. That means, the rigorous methodological approach: stratified K-fold, paired t-test, comparative benchmarking of heavy vs light models and the field collected data from Ethiopian farms adds real-world variation (lighting, backgrounds, smartphone camera), improving generalizability.

The research dataset is available at: https://doi.org/10.57760/sciencedb.28532

Influencer

For practitioners (agronomists, extension agents, farmers) this model offers a high‐accuracy tool for diagnosing maize leaf diseases in real‐world settings, potentially leading to earlier intervention, reducing misdiagnosis, saving yield and input costs.

Because the research was done in Ethiopia, it is particularly relevant for sub-Saharan Africa and smallholder farming contexts, where smartphone penetration is growing and crop disease diagnosis remains a challenge.

From a research perspective, the work sets a new baseline (99.15% accuracy) for maize-leaf disease classification with field images, and outlines a methodological standard that others can follow or build upon.

Insights

It’s very encouraging to see a research addressing a locally relevant, globally important problem (maize disease) with modern AI methods. The fact that we collected field images makes the work more meaningful and practicable for African agriculture.

The emphasis on statistical validation (paired t-tests) is a welcome step: many papers report high accuracy but don’t show that improvement is statistically significant nor that results are stable across folds. That builds trust.

One thing to watch is how well the model generalizes when the maize plant variety, disease presentation, background vary more widely than the dataset. We note this . For a farmer, tools that “fail” outside their training domain can still harm trust. What matters even more is real‐world usability. We deliver a prototype which is a good start.

Follow the Topic

-

Discover Artificial Intelligence

This is a transdisciplinary, international journal that publishes papers on all aspects of the theory, the methodology and the applications of artificial intelligence (AI).

Related Collections

With Collections, you can get published faster and increase your visibility.

Enhancing Trust in Healthcare: Implementing Explainable AI

Healthcare increasingly relies on Artificial Intelligence (AI) to assist in various tasks, including decision-making, diagnosis, and treatment planning. However, integrating AI into healthcare presents challenges. These are primarily related to enhancing trust in its trustworthiness, which encompasses aspects such as transparency, fairness, privacy, safety, accountability, and effectiveness. Patients, doctors, stakeholders, and society need to have confidence in the ability of AI systems to deliver trustworthy healthcare. Explainable AI (XAI) is a critical tool that provides insights into AI decisions, making them more comprehensible (i.e., explainable/interpretable) and thus contributing to their trustworthiness. This topical collection explores the contribution of XAI in ensuring the trustworthiness of healthcare AI and enhancing the trust of all involved parties. In particular, the topical collection seeks to investigate the impact of trustworthiness on patient acceptance, clinician adoption, and system effectiveness. It also delves into recent advancements in making healthcare AI decisions trustworthy, especially in complex scenarios. Furthermore, it underscores the real-world applications of XAI in healthcare and addresses ethical considerations tied to diverse aspects such as transparency, fairness, and accountability.

We invite contributions to research into the theoretical underpinnings of XAI in healthcare and its applications. Specifically, we solicit original (interdisciplinary) research articles that present novel methods, share empirical studies, or present insightful case reports. We also welcome comprehensive reviews of the existing literature on XAI in healthcare, offering unique perspectives on the challenges, opportunities, and future trajectories. Furthermore, we are interested in practical implementations that showcase real-world, trustworthy AI-driven systems for healthcare delivery that highlight lessons learned.

We invite submissions related to the following topics (but not limited to):

- Theoretical foundations and practical applications of trustworthy healthcare AI: from design and development to deployment and integration.

- Transparency and responsibility of healthcare AI.

- Fairness and bias mitigation.

- Patient engagement.

- Clinical decision support.

- Patient safety.

- Privacy preservation.

- Clinical validation.

- Ethical, regulatory, and legal compliance.

Publishing Model: Open Access

Deadline: Sep 10, 2026

AI and Big Data-Driven Finance and Management

This collection aims to bring together cutting-edge research and practical advancements at the intersection of artificial intelligence, big data analytics, finance, and management. As AI technologies and data-driven methodologies increasingly shape the future of financial services, corporate governance, and industrial decision-making, there is a growing need to explore their applications, implications, and innovations in real-world contexts.

The scope of this collection includes, but is not limited to, the following areas:

- AI models for financial forecasting, fraud detection, credit risk assessment, and regulatory compliance

- Machine learning techniques for portfolio optimization, stock price prediction, and trading strategies

- Data-driven approaches in corporate decision-making, performance evaluation, and strategic planning

- Intelligent systems for industrial optimization, logistics, and supply chain management

- Fintech innovations, digital assets, and algorithmic finance

- Ethical, regulatory, and societal considerations in deploying AI across financial and managerial domains

By highlighting both theoretical developments and real-world applications, this collection seeks to offer valuable insights to researchers, practitioners, and policymakers. Contributions that emphasize interdisciplinary approaches, practical relevance, and explainable AI are especially encouraged.

This Collection supports and amplifies research related to SDG 8 and SDG 9.

Keywords: AI in Finance, Accountability, Applied Machine Learning, Artificial Intelligence, Big Data

Publishing Model: Open Access

Deadline: Apr 30, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in