Black Phosphorous-based Human-Machine Communication Interface

Published in Materials

According to a WHO (World Health Organization) report, approximately 15% of the global population suffers from a disability such as vision, listening, speaking, and/or other issues.1 People with disabilities may confront a variety of inconveniences and restrictions in their daily life. Many types of assistive devices have been developed for disabled people to utilize for hearing, interaction, speaking, touching, and health care.2,3 However, there are still several constraints in terms of applications, including portability, compact size, flexibility, low cost, and easy fabrication. For visually impaired people typing or reading, auditory feedback is more reliable for accessing information.4,5 There is recent interest in human-machine interface (HMI) techniques that use touch sensing to establish real-time synchronized communication between humans and machines.6,7 Although HMI needs to perform touch sensing with auditory feedback, the major emphasis has been on touch sensing, and research on various types of sensing feedback is still limited. A few studies have shown realistic visual evaluations of acquired electrical signals using real-time applied pressure and visual feedback transfer to smartphone or cloud via wireless communication.8,9 However, only limited studies have demonstrated the use of touch feedback in visual interpretation, which is the focus of this work.

Many tactile sensors based on two-dimensional materials have been reported, including graphene, MXene, and others.10,11 The majority demonstrated applications in monitoring routine human vital signs such as pulse detection, tactile perception, voice monitoring, blood pressure monitoring, gait analysis, and so on. This is the first time black phosphorous has been used in a tactile sensor for the human-machine communication interface. The fabricated BP@PANI-based piezoresistive tactile sensor has great sensitivity, reasonable response time, high stability, and so on.

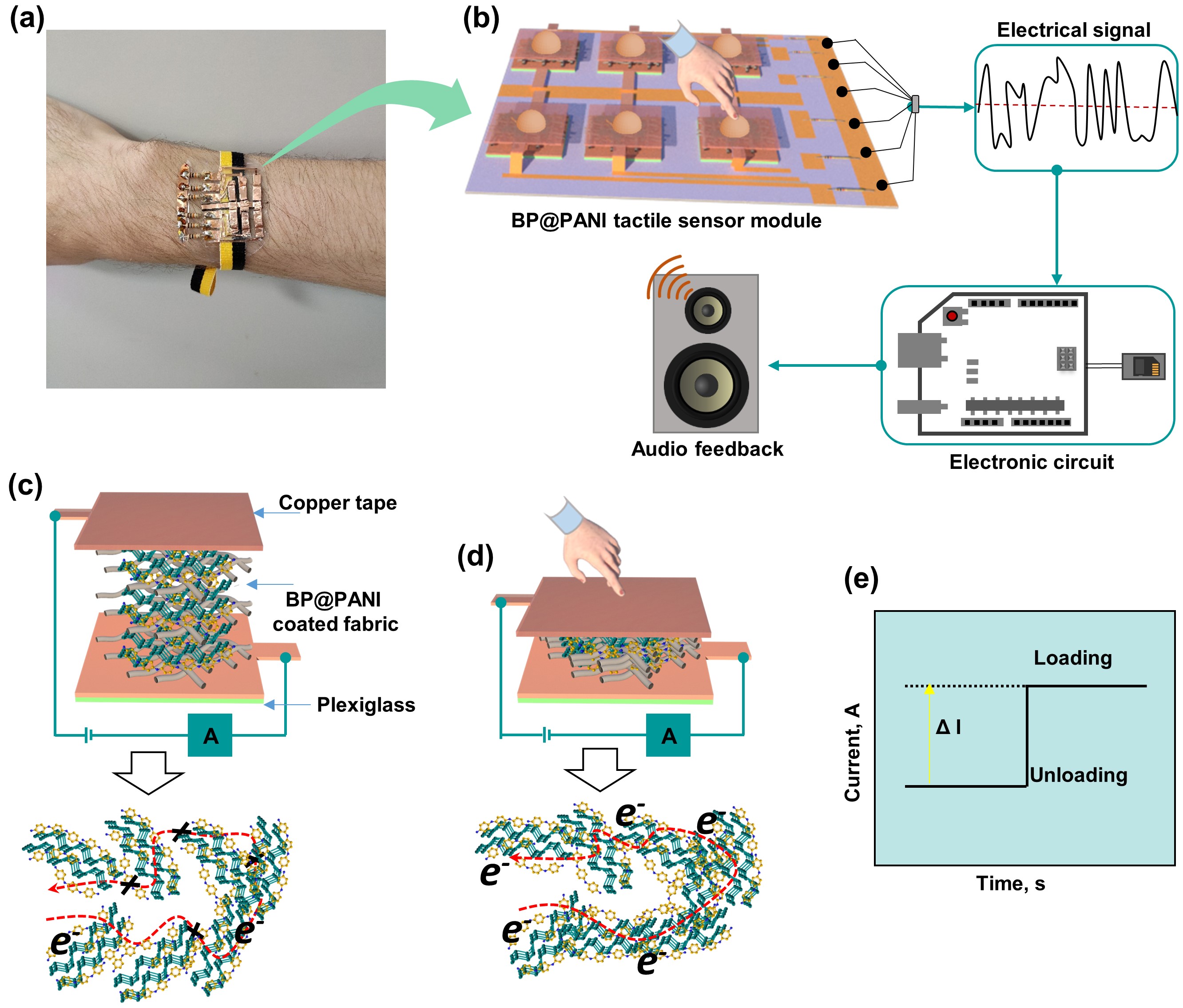

Herein, we demonstrated a proof-of-concept auditory feedback system based on black phosphorous/polyaniline (BP@PANI) tactile sensor. This system function bidirectional, providing touch sensing and feedback functions. Touch-to-audio conversion focuses on human-machine communication interfaces for the visually disabled; the prototype auditory feedback system employs a six-pixel BP@PANI tactile sensor corresponding to the six dots in braille writing slate to convert touch in various braille patterns to an audio signal that speaks the relevant word (see Figure 1). Finally, we exhibited real touch to the audio conversation by pressing different braille letters from A to G and pronouncing the single word “nanomaterials” and a typical conversation between human and machine (see Movie 1).

For further information, please read our paper “Black Phosphorous-based Human-Machine Communication Interface,” published in Nature Communications, https://doi.org/10.1038/s41467-022-34482-4

References

- World Report on Disability 2011, Switzerland, Published date 14th December 2011.

- Qu, X. et al. Assistive devices for the people with disabilities enabled by triboelectric nanogenerators. J. Phys. Mater. 4, 034015 (2021).

- Billah, S. M. et al. Accessible gesture typing for non-visual text entry on smartphones. Proc SIGCHI Conf Hum Factor Comput Syst. 376, 1-12 (2019).

- Monticelli, C. et al. Text vocalizing desktop scanner for visually impaired people. In International Conference on Human-Computer Interaction (pp. 62-67). Springer, Cham (2018).

- Lu, L. et al. Flexible noncontact sensing for human-machine interaction. Adv. Mater. 33, 2100218 (2021).

- Wang, H., Ma, X. & Hao, Y. Electronic devices for human-machine interfaces. Adv. Mater. Interfaces 4, 1600709 (2017).

- Xu, K., Lu, Y. & Takei, K. Flexible hybrid sensor systems with feedback functions. Adv. Funct. Mater. 31, 2007436 (2021).

- Kim, J. et al. 2D materials for skin-mountable electronic devices. Adv. Mater. 33, 2005858 (2021).

- Chen, S. et al. Recent developments in graphene-based tactile sensors and e-skins. Adv. Mater. Techno. 3, 1700248 (2018).

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in